Legendary Gamer

[H]ard|Gawd

- Joined

- Jan 14, 2012

- Messages

- 1,584

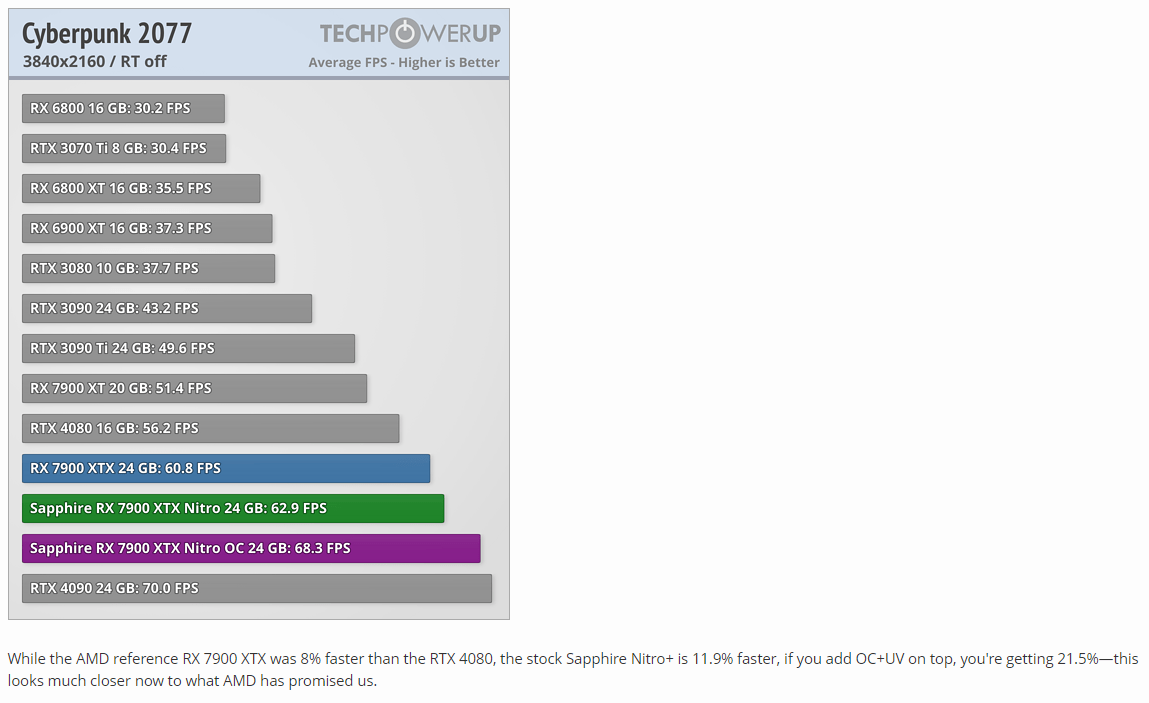

Yeah, I will be curious to see what those cards actually draw. Hell, the reference model is showing spikes larger than supported power draws intermittently up to 455 Watts or something like that.overclocking a 4090 might get you 4-5% more performance while hitting 500w+

So far the good AIB 7900xtx's are showing around 21% performance right now overclocking. While using around 420w+.

It probably all levels off when you have ample power though.

I will let you guys know if I hate reference card, but I suspect I am going to be loving just being over 60 FPS at 4K, max settings in Cyberpunk and probably gonna make my MW5 modded to the gills playthroughs amazing too.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)