I've seen rumors calling it the 5700X and rumors calling it the 5800X. Either way whatever they end up calling the 8-core SKU; I think the price will be $350-400.What is the 5800x? I saw that the 5700x is the 8 core sku.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD 5900X rumors

- Thread starter vjhawk

- Start date

if the 12 core comes in at 499 I will probably swing for that, otherwise, 8 or 10 core for me.I've seen rumors calling it the 5700X and rumors calling it the 5800X. Either way whatever they end up calling the 8-core SKU; I think the price will be $350-400.

BrotherMichigan

Limp Gawd

- Joined

- Apr 8, 2016

- Messages

- 376

That's almost certainly not going to happen. Expect AMD to charge performance-leadership prices.

3900x/xt were $499 at launch, weren't they? And $749 for the 3950x I believe. I guess we will see in a week.That's almost certainly not going to happen. Expect AMD to charge performance-leadership prices.

pippenainteasy

[H]ard|Gawd

- Joined

- May 20, 2016

- Messages

- 1,158

Still going Intel.I have no idea why.

Mindshare. Same reason people buy Bose speakers.

BrotherMichigan

Limp Gawd

- Joined

- Apr 8, 2016

- Messages

- 376

3900x/xt were $499 at launch, weren't they? And $749 for the 3950x I believe. I guess we will see in a week.

They were $499 at launch and did not have comprehensive performance leadership. Zen 3 likely will.

Southpaw_Joe

n00b

- Joined

- Sep 28, 2020

- Messages

- 7

They were $499 at launch and did not have comprehensive performance leadership. Zen 3 likely will.

Yea coming from a 3900XT no point in upgrading atm. Only way i'm going to really go for a new cpu is if im getting 5ghz on all cores while gaming. Fell for that "4.7ghz" boost marketing trick, when in reality for a gamer its like 4.2ghz, so not gonna fall for that scam again.

what cooler are you running?Yea coming from a 3900XT no point in upgrading atm. Only way i'm going to really go for a new cpu is if im getting 5ghz on all cores while gaming. Fell for that "4.7ghz" boost marketing trick, when in reality for a gamer its like 4.2ghz, so not gonna fall for that scam again.

legcramp

[H]F Junkie

- Joined

- Aug 16, 2004

- Messages

- 12,401

ftfyMindshare. Same reason people buy Blose speakers.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,578

Yeah, the max boost for CPU's has always been a bit of a marketing trick. "max" boost advertised is usually for 1 or 2 threads. Intel has been doing that forever. and I don't think they even let you see any sort of official boost table anymore. Since the 7 or 8 series.Yea coming from a 3900XT no point in upgrading atm. Only way i'm going to really go for a new cpu is if im getting 5ghz on all cores while gaming. Fell for that "4.7ghz" boost marketing trick, when in reality for a gamer its like 4.2ghz, so not gonna fall for that scam again.

However, with Zen, you can turn off SMT to limit the threads and thus get better boosts for gaming. Here is techhpowerup's clock table for the 3900x at launch (which in reality, is slightly better now, after bios updates. At launch, the 3900x couldn't actually hit max advertised boost. but that was fixed:

https://www.techpowerup.com/review/amd-ryzen-9-3900x/20.html

Anyway, if you turn off SMT and or closely manage which cores are active: you can get 100-200 more MHZ for gaming.

GN did a video about the 3900XT, where they simply turned off SMT and some nice gains were achieved in some games:

Southpaw_Joe

n00b

- Joined

- Sep 28, 2020

- Messages

- 7

what cooler are you running?

Noctua NH-U12A with upgraded fans. Under load sits about 72ish, and idle anywhere from 32-45 with its normal "spikes". While gaming its sits 57-64ish.

BrotherMichigan

Limp Gawd

- Joined

- Apr 8, 2016

- Messages

- 376

Yea coming from a 3900XT no point in upgrading atm. Only way i'm going to really go for a new cpu is if im getting 5ghz on all cores while gaming. Fell for that "4.7ghz" boost marketing trick, when in reality for a gamer its like 4.2ghz, so not gonna fall for that scam again.

Clockspeeds don't matter, performance does. If these things came out topping out at 3 GHz but demolished a 10900K in gaming, would you really care about the number?

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,578

yeah but clockspeed currently correlates to more game performance. The two architectures are more or less equal in performance. So, clockspeed marks the winner.Clockspeeds don't matter, performance does. If these things came out topping out at 3 GHz but demolished a 10900K in gaming, would you really care about the number?

BrotherMichigan

Limp Gawd

- Joined

- Apr 8, 2016

- Messages

- 376

yeah but clockspeed currently correlates to more game performance. The two architectures are more or less equal in performance. So, clockspeed marks the winner.

That's not how that works at all. They are roughly equal in terms of IPC, so higher clocks can make a difference, but that's actually not the reason Intel leads in low-resolution gaming. That actually has to do with the increased latency of AMD's design due to having to shuttle data all over the place (over the substrate!) via the Infinity Fabric. Hopefully this is one area where Zen 3 will offer solid improvements.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,578

It seems to be title specific and not a general rule, for games. techpowerup tested 10 games for the 3600xt and 3900x and only two showed indications of such an issue. and even then....there are only a couple of standout intel chips which seem to have something going on, which belies their lower clock speed (i5-10400). But overall, I'm having a tough time finding numbers which support the general idea that AMD's supposed latency issues are the underlying problem. 10 games isn't a huge sample size but----its seems like latency inside the CPU isn't that big a deal for some games. Maybe most games. I'm not saying it technically isn't an issue. But, most games seems to be mostly clockspeed sensitive.That's not how that works at all. They are roughly equal in terms of IPC, so higher clocks can make a difference, but that's actually not the reason Intel leads in low-resolution gaming. That actually has to do with the increased latency of AMD's design due to having to shuttle data all over the place (over the substrate!) via the Infinity Fabric. Hopefully this is one area where Zen 3 will offer solid improvements.

If you look closely, some games due better on Ryzen, with an all core overclock which is much lower than their boost clock. Which further suggests clock sensitivity for gaming. We would need to examine the frequency graphs for CPU's on a game by game basis, to really see which ones are actually latency sensitive. And not actually still clock sensitive and really suffering because ryzen boost tables don't scale well with some games.

It seems to be title specific and not a general rule, for games. techpowerup tested 10 games for the 3600xt and 3900x and only two showed indications of such an issue. and even then....there are only a couple of standout intel chips which seem to have something going on, which belies their lower clock speed (i5-10400). But overall, I'm having a tough time finding numbers which support the general idea that AMD's supposed latency issues are the underlying problem. 10 games isn't a huge sample size but----its seems like latency inside the CPU isn't that big a deal for some games. Maybe most games. I'm not saying it technically isn't an issue. But, most games seems to be mostly clockspeed sensitive.

If you look closely, some games due better on Ryzen, with an all core overclock which is much lower than their boost clock. Which further suggests clock sensitivity for gaming. We would need to examine the frequency graphs for CPU's on a game by game basis, to really see which ones are actually latency sensitive. And not actually still clock sensitive and really suffering because ryzen boost tables don't scale well with some games.

The 3600XT has the same latency penalty. What you have to do is look at the 3300x as it is a single CCD and doesn't suffer the same latency penalties. With Zen 3 moving to a an 8 core CCD the latency penalties on anything using a single CCD will be eliminated or at least mostly reduced and that's without taking into account any possible advances to the architecture.

In most cases Zen 2 is the same or higher IPC. Gaming just happens to be one of the few cases where the IPC takes a hit because of latencies. Clock speed alone means nothing. High clock speed with lousy IPC, also known as the Intel Netburst architecture, was destroyed by much higher IPC and much lower clock speed A64. IPC means Instructions Per Clock. At the current time Intel's lower IPC is offset by the higher clock speeds.

BrotherMichigan

Limp Gawd

- Joined

- Apr 8, 2016

- Messages

- 376

It seems to be title specific and not a general rule, for games. techpowerup tested 10 games for the 3600xt and 3900x and only two showed indications of such an issue. and even then....there are only a couple of standout intel chips which seem to have something going on, which belies their lower clock speed (i5-10400). But overall, I'm having a tough time finding numbers which support the general idea that AMD's supposed latency issues are the underlying problem. 10 games isn't a huge sample size but----its seems like latency inside the CPU isn't that big a deal for some games. Maybe most games. I'm not saying it technically isn't an issue. But, most games seems to be mostly clockspeed sensitive.

If you look closely, some games due better on Ryzen, with an all core overclock which is much lower than their boost clock. Which further suggests clock sensitivity for gaming. We would need to examine the frequency graphs for CPU's on a game by game basis, to really see which ones are actually latency sensitive. And not actually still clock sensitive and really suffering because ryzen boost tables don't scale well with some games.

Have a look at the following article Techspot did around the launch of Zen 2: https://www.techspot.com/article/1876-4ghz-ryzen-3rd-gen-vs-core-i9/

Zen 2 just barely edges out Coffee Lake in terms of IPC and wins every rendering test, but still loses to the 9900K in gaming tests. The synthetic tests tell the story of why: despite higher cache and memory bandwidth, better cache latency, and better latency between the best-matched cores (i.e. cores within the same CCX), the latency to main memory and the worst-matched cores (those in another CCX) are significantly worse for Zen 2 than for Coffee Lake. This shortcoming is one of the reasons why AMD endowed Zen 2 with such a large amount of L3 cache: they need to keep as much data as possible close to the cores to avoid making costly trips off-die. This explains why Intel's advantage is most apparent at high framerates; those cycles that AMD loses when fetching data are less impactful when it's taking longer to do the work overall. This analysis is also supported by the fact that increasing the IF clock (thereby reducing the latency penalty) is one of the best avenues for increasing performance on Zen 2 CPUs.

Last edited:

Southpaw_Joe

n00b

- Joined

- Sep 28, 2020

- Messages

- 7

Clockspeeds don't matter, performance does. If these things came out topping out at 3 GHz but demolished a 10900K in gaming, would you really care about the number?

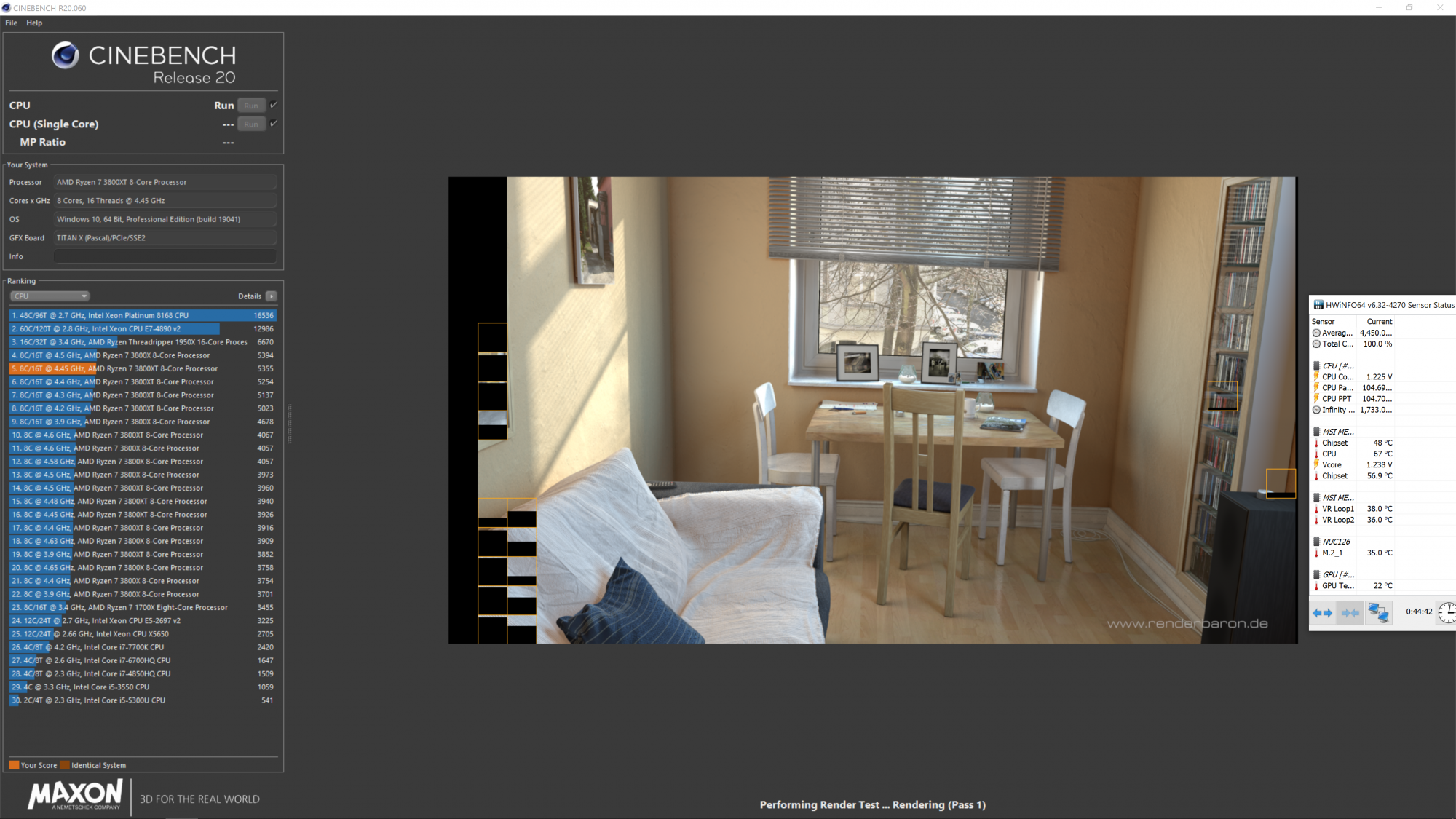

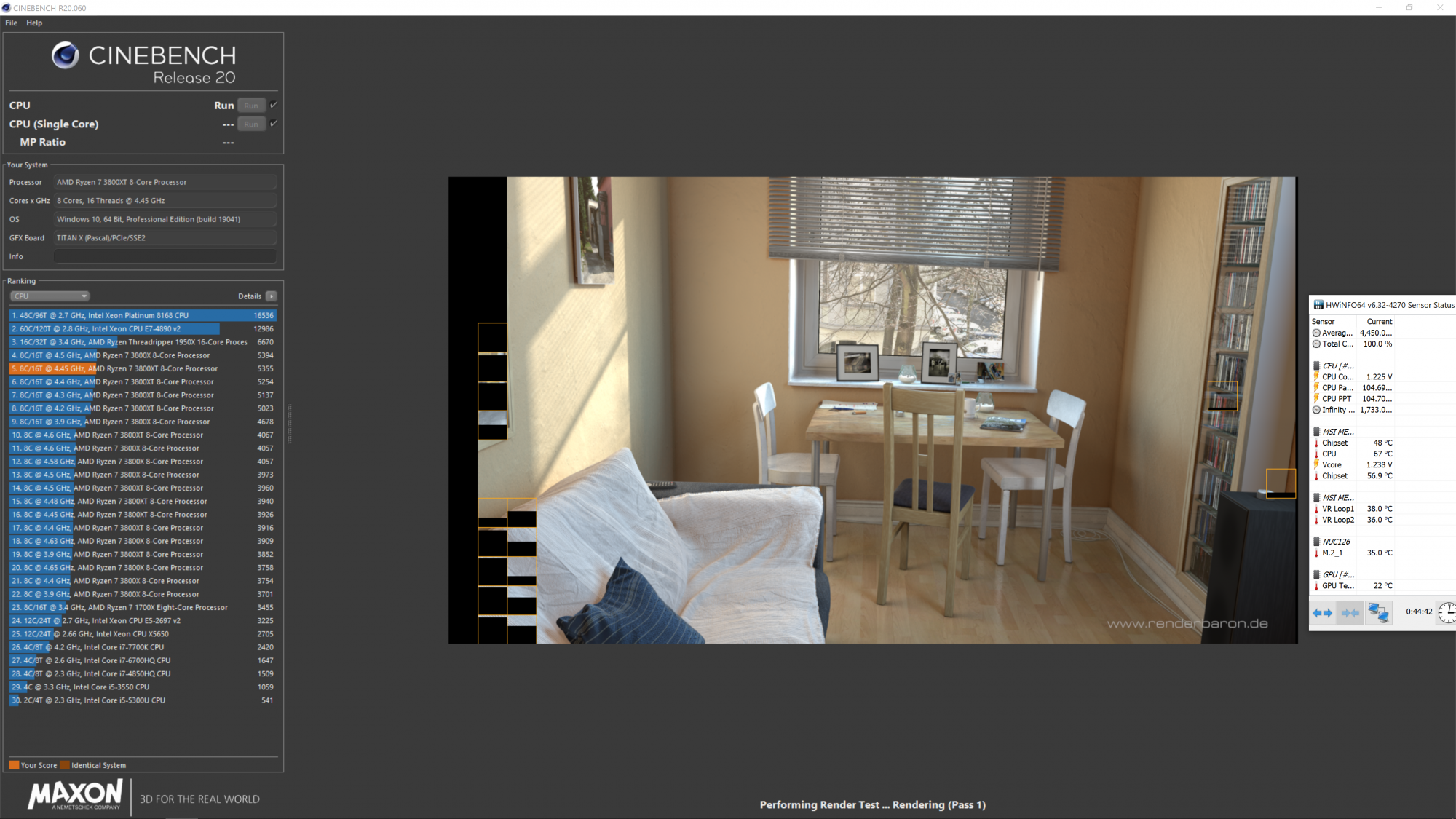

Weird, when I do a manual overclock on this 3900XT to 4.65ghz all core my fps goes up nearly 30 on the low end and top end. My score in cinebench skyrockets as well (so does temps lol). So to say that clockspeed doesn't matter is so weird lol, when its a night and day difference when I increase it. In this case, the clockspeed does matter, but for whats coming out I guess we will have to see whats in store. But im not falling for their marketing scam of "max boost clock" lol, ill wait for GN, or Jay2Cents, and few others to do some testing.

BrotherMichigan

Limp Gawd

- Joined

- Apr 8, 2016

- Messages

- 376

Weird, when I do a manual overclock on this 3900XT to 4.65ghz all core my fps goes up nearly 30 on the low end and top end. My score in cinebench skyrockets as well (so does temps lol). So to say that clockspeed doesn't matter is so weird lol, when its a night and day difference when I increase it. In this case, the clockspeed does matter, but for whats coming out I guess we will have to see whats in store. But im not falling for their marketing scam of "max boost clock" lol, ill wait for GN, or Jay2Cents, and few others to do some testing.

Quite obviously I mean "clockspeed alone doesn't matter." Clock any chip higher and performance will go up, but it doesn't matter one bit if a 10900K hits 5 GHz and a 5900X doesn't if the 5900X performs better.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,578

Sure but I think the underlying point is that no consumer CPU architecture is good enough right now, to dramatically offset clockspeed. At least, that was the point I wasn't really communicating well. And I seriously doubt we will see a situation from AMD, where they release a massively efficient architecture----which still doesn't clock well. All indications point to them having figured out their clockspeed issues with their fab processes. So, even if the latency issue is only partially addressed, I still expect to see nice gains, simply from more megahertz. And if we do get an amazing new architecture: I think it will still clock well.Quite obviously I mean "clockspeed alone doesn't matter." Clock any chip higher and performance will go up, but it doesn't matter one bit if a 10900K hits 5 GHz and a 5900X doesn't if the 5900X performs better.

learners permit

[H]ard|Gawd

- Joined

- Jun 15, 2005

- Messages

- 1,796

I'm mostly interested to see if the boost behaviors with smt off have been revised proficiently. That will surely help with power scaling clockspeeds and result in even higher sustained all core clocks. Most games at this point in time would likely see a performance increase in fps. Hopefully some of the x box data would help with a solution.

sirmonkey1985

[H]ard|DCer of the Month - July 2010

- Joined

- Sep 13, 2008

- Messages

- 22,414

105w vs 150w for overclocking? Why would something pushed to 150w overclock better than something that's sipping power at 105w? If anything there is more room at 105w to overclock because you can turn up the voltage. If it's 150w just to hit stock clocks, that doesn't leave much room for OC. I think you've got it backwards.

My mini ITX has a 1000w PSU, it's not the PSU I'd be concerned about, it's the amount of heat I have to remove from my case. I mean, really I'm not that concerned personally, but in general that's the concern.

because AMD's TDP ratings are 100% garbage and everyones known that for years.. they come up with their TDP based on an algorithm, not on actual power draw.. the 3900x was a 150w TDP chip with stock boost, 115w base clock. only hope would be that AMD finally listened to all the criticism they've been receiving for the last 10 friggin years and finally switched to a true power draw rating but not going to keep my hopes up on that one. intel's are just as garbage since their TDP is power draw at base clock.

learners permit

[H]ard|Gawd

- Joined

- Jun 15, 2005

- Messages

- 1,796

I dunno they look pretty spot on to me if correctly tuned even near advertised maximum boost clock!!

because AMD's TDP ratings are 100% garbage and everyones known that for years.. they come up with their TDP based on an algorithm, not on actual power draw.. the 3900x was a 150w TDP chip with stock boost, 115w base clock. only hope would be that AMD finally listened to all the criticism they've been receiving for the last 10 friggin years and finally switched to a true power draw rating but not going to keep my hopes up on that one. intel's are just as garbage since their TDP is power draw at base clock.

At the very least it depends on what CPU you're talking about. My Ryzen 2600x running all threads maxed out will occasionally go above 95w but has never that I've seen even hit 100w. That's with the boost on all cores sitting around 4100 to 4150. I imagine if I could keep the CPU cooler it would go a bit higher yet and I'd still be surprised if it went over 100w.

sirmonkey1985

[H]ard|DCer of the Month - July 2010

- Joined

- Sep 13, 2008

- Messages

- 22,414

At the very least it depends on what CPU you're talking about. My Ryzen 2600x running all threads maxed out will occasionally go above 95w but has never that I've seen even hit 100w. That's with the boost on all cores sitting around 4100 to 4150. I imagine if I could keep the CPU cooler it would go a bit higher yet and I'd still be surprised if it went over 100w.

yes it does, lol. at the actual EPS cables the 2600x draws upwards of 115w and over 130w at 4.2Ghz all core, don't ever trust what cpu-z says. as a comparison the 2700x pulls ~140w stock, ~190w at 4.2Ghz.

AMD's are very simple to figure out, while still annoying, it's not some magical thing that nobody knows about and they give you a TDP for your cooling solution, not as a # for how much watts the CPU may draw at some point.because AMD's TDP ratings are 100% garbage and everyones known that for years.. they come up with their TDP based on an algorithm, not on actual power draw.. the 3900x was a 150w TDP chip with stock boost, 115w base clock. only hope would be that AMD finally listened to all the criticism they've been receiving for the last 10 friggin years and finally switched to a true power draw rating but not going to keep my hopes up on that one. intel's are just as garbage since their TDP is power draw at base clock.

65w * 1.354 = ~88w during boost

95w * 1.354 = ~128w during boost

105w * 1.354 = ~142w during boost

There you have it, AMD TDP demystified!!

The way they come up with the TDP though is based on how much cooling power you need, not the actual # of watts used, whether you agree or not (and I think it's kind of annoying, but it is what it is). Also, as you mentioned Intels being "just as garbage", but they are/have been much worse. A 10900k is rated at 125w, and can hit 250w+, this is 100% more power than TDP. AMD's is ~35% across the board. A 10700k is 125w and can hit 230w, around 84%... so not only is it much higher % wise, but it's inconsistent as well so you can't just do a quick multiplication and know actual wattage.

Either way, I still hope they meant to say 105w and that it actually draws 150w, as that would be the same as what the 3900x/3950x are doing now. If indeed it's 150w TDP, then at least you know it'll be about 150w* 1.354 = ~203 watts actual during boost, which is still less than a 10900k, and less than a 10700k. I'm just excited for it to finally come out and see some real world benchmarks. Hoping AMD finally closes the last gap. They just hit 25% on steam survey (and with as many people still rocking 3/4th gen Intel CPU's from before AMD was competitive, that's prety good), if they can be within low single digits in 1080 gaming to Intel best (And continue to win in other) then it's going to make it really hard to recommend Intel until they can pull their collective heads out of their rears.

Well, seeing as both the 3700x/3800x are 8-core, there is a good possibility both the 5700x/5800x are both going to be 8-core, so it may not be the rumors calling it one or the other, but both may existI've seen rumors calling it the 5700X and rumors calling it the 5800X. Either way whatever they end up calling the 8-core SKU; I think the price will be $350-400.

3700x was $329, and 3800x $399, so I'm hopeful that we'll end up with an 8-core under $400. I'm mostly curious if both the 5700x /5800x are going to be a single CCD or if the 5700x might be dual CCD that couldn't make the full core count each. This would be a good product segmentation/differentiator one with dual CCD's with 4 cores each vs a single 8-core CCD, but no clue. I hope it's a single CCD, but could see it either way.

sirmonkey1985

[H]ard|DCer of the Month - July 2010

- Joined

- Sep 13, 2008

- Messages

- 22,414

Well, seeing as both the 3700x/3800x are 8-core, there is a good possibility both the 5700x/5800x are both going to be 8-core, so it may not be the rumors calling it one or the other, but both may exist.

3700x was $329, and 3800x $399, so I'm hopeful that we'll end up with an 8-core under $400. I'm mostly curious if both the 5700x /5800x are going to be a single CCD or if the 5700x might be dual CCD that couldn't make the full core count each. This would be a good product segmentation/differentiator one with dual CCD's with 4 cores each vs a single 8-core CCD, but no clue. I hope it's a single CCD, but could see it either way.

still have my hopes set that maybe the 5800x will be a 10 core chip given the changes to the chiplets(using 2x5 core chiplets) just as an ultimate middle finger to intel and the 5700x being the single 8 core chiplet.

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

because AMD's TDP ratings are 100% garbage

Dude, they're the only ones who stick to their advertised TDP. I know this was corrected above but wtf man lol.

CyberJunk

Supreme [H]ardness

- Joined

- Nov 13, 2005

- Messages

- 4,242

That's almost certainly not going to happen. Expect AMD to charge performance-leadership prices.

mmmm nope , Intel has way too much market share.

still have my hopes set that maybe the 5800x will be a 10 core chip given the changes to the chiplets(using 2x5 core chiplets) just as an ultimate middle finger to intel and the 5700x being the single 8 core chiplet.

I'm for anything that pushes 8 cores down the price chain.

BrotherMichigan

Limp Gawd

- Joined

- Apr 8, 2016

- Messages

- 376

mmmm nope , Intel has way too much market share.

You've been around long enough to remember the Athlon 64, right? The situation then was quite a bit worse than it is now for AMD and they still released a processor that was 20% more expensive than Intel's halo part because it was comprehensively faster while also being more efficient.

CyberJunk

Supreme [H]ardness

- Joined

- Nov 13, 2005

- Messages

- 4,242

These are different economic times than in 2005.They most likely ask $499. Maybe even $529.99 at the most.You've been around long enough to remember the Athlon 64, right? The situation then was quite a bit worse than it is now for AMD and they still released a processor that was 20% more expensive than Intel's halo part because it was comprehensively faster while also being more efficient.

Are you guys just pulling numbers from dark holes? 1700x was $399, 2700x and 3700x both sold for $329... you really think they're going to shoot for $529?!??! I don't see the trend you guys are seeing. I wouldn't be surprised to see the 5700x @ $349 and 5800x @ $399 (or maybe stretching it to $429), but no way it's $500+. I know AMD will and should price to maximize profits but they also want to maximize sales (aka, don't price it so high they don't sell them all). It's a fine line and I just don't see them pricing up that high this quickly. I think next generation when DDR5 hits is the time to increase prices as DDR5 and PCIE 5.0 will cause increases in R&D.

Clockspeeds don't matter, performance does. If these things came out topping out at 3 GHz but demolished a 10900K in gaming, would you really care about the number?

LMAO I have been literally made fun of on this forum for making that same suggestion.

People that do not understand how to look at big pictures are the ones that get hung up on one single stat as a deciding factor in their information-less decisions.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,788

I mean I could get a FX8350 to run at 5ghz. Didn't mean much however, lol.LMAO I have been literally made fun of on this forum for making that same suggestion.

People that do not understand how to look at big pictures are the ones that get hung up on one single stat as a deciding factor in their information-less decisions.

Repo79

Weaksauce

- Joined

- Nov 30, 2019

- Messages

- 111

I looking forward to seeing the announcement of the 5900x as i need to get myself a new build so my son can have my current build im using now. Looks real promising

sirmonkey1985

[H]ard|DCer of the Month - July 2010

- Joined

- Sep 13, 2008

- Messages

- 22,414

LMAO I have been literally made fun of on this forum for making that same suggestion.

People that do not understand how to look at big pictures are the ones that get hung up on one single stat as a deciding factor in their information-less decisions.

the sad reality is the numbers on the box sell before the benchmarks.. OEM's have proven that time after time and that's why they convinced AMD to release the 550M which was just a rebranded b450 because consumers thought they were buying something out dated and slower because b450 didn't have pcie 4.0. yet even in an enthusiast forum that shit still happens which is depressing.

I mean, I kind of agree, but, who was buying 550m's because they were rebranded b450's, they are new chipsets that have more features (2.5gbe, more/faster usb, pcie 4.0 for both GPU and 1st nvme)? Or are you talking about the OEM 550A? The 550M is the B550M that we all know is not a rebranded b450 and does have additional feature. The 550A was the B450 rebrand for the OEM market which is probably what you're thinking/talking about, but these were not sold directly to consumers so not sure who on an "enthusiast" forum was buying prebuilts just for a motherboard.the sad reality is the numbers on the box sell before the benchmarks.. OEM's have proven that time after time and that's why they convinced AMD to release the 550M which was just a rebranded b450 because consumers thought they were buying something out dated and slower because b450 didn't have pcie 4.0. yet even in an enthusiast forum that shit still happens which is depressing.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)