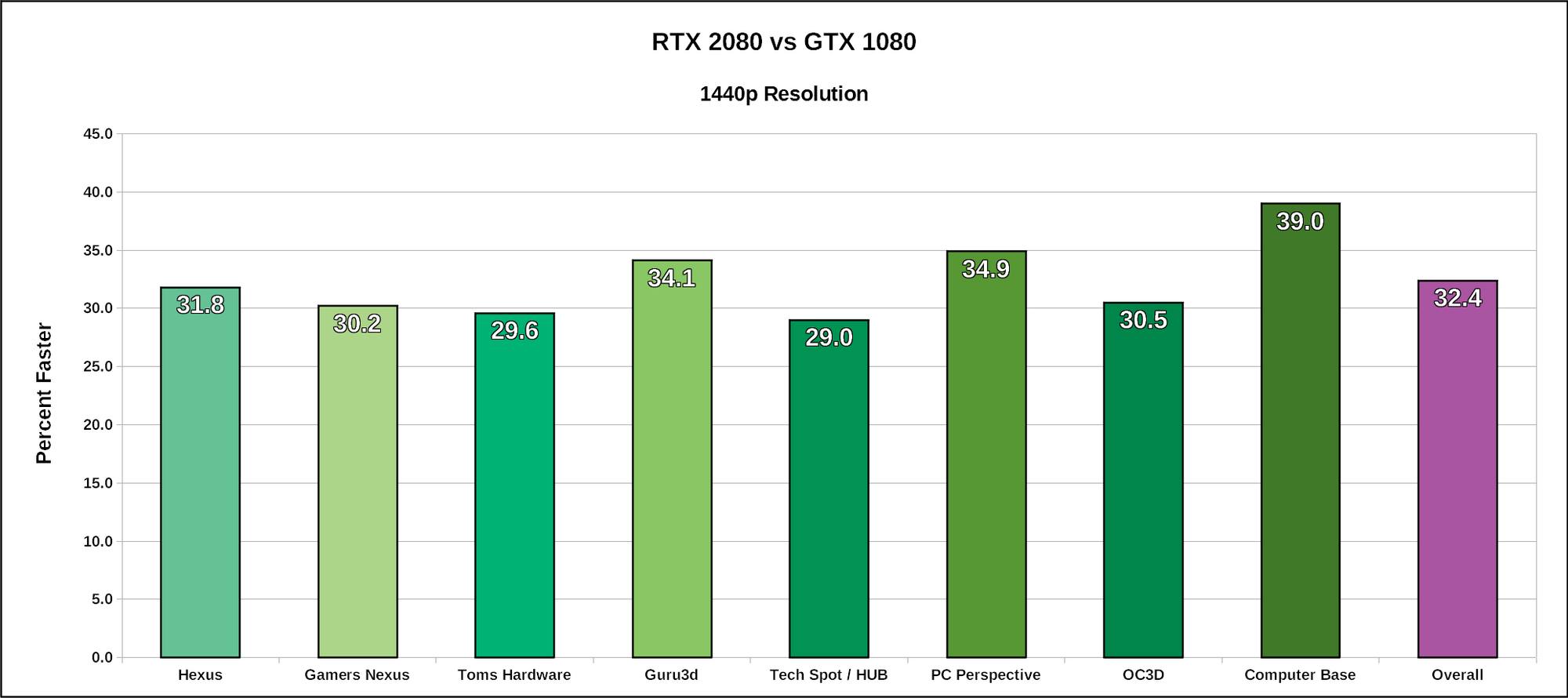

Wonder how the Vega 64 and 2080 compare at 1440p and HDR? Maybe not so far apart in that case. Reviews will be very interesting coming up. As for effects what I've seen so far with Ray Tracing, HDR done right to me would win out over just ray tracing with SDR for IQ. Plus if ray tracing performance limits it to 1080p, that is a non-start right off the bat. Ray Tracing would only be useful if performance is acceptable at 1440p or above and with HDR. Look very much forward to real data, especially looking at best IQ overall on each game.

LOL, now I know why there are many FreeSync HDR monitors and virtually no GSync HDR monitors - because Nvidia performance tanks with HDR!

LOL, now I know why there are many FreeSync HDR monitors and virtually no GSync HDR monitors - because Nvidia performance tanks with HDR!

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)