MavericK

Zero Cool

- Joined

- Sep 2, 2004

- Messages

- 31,892

Got it downloaded, gonna try it tonight hopefully.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

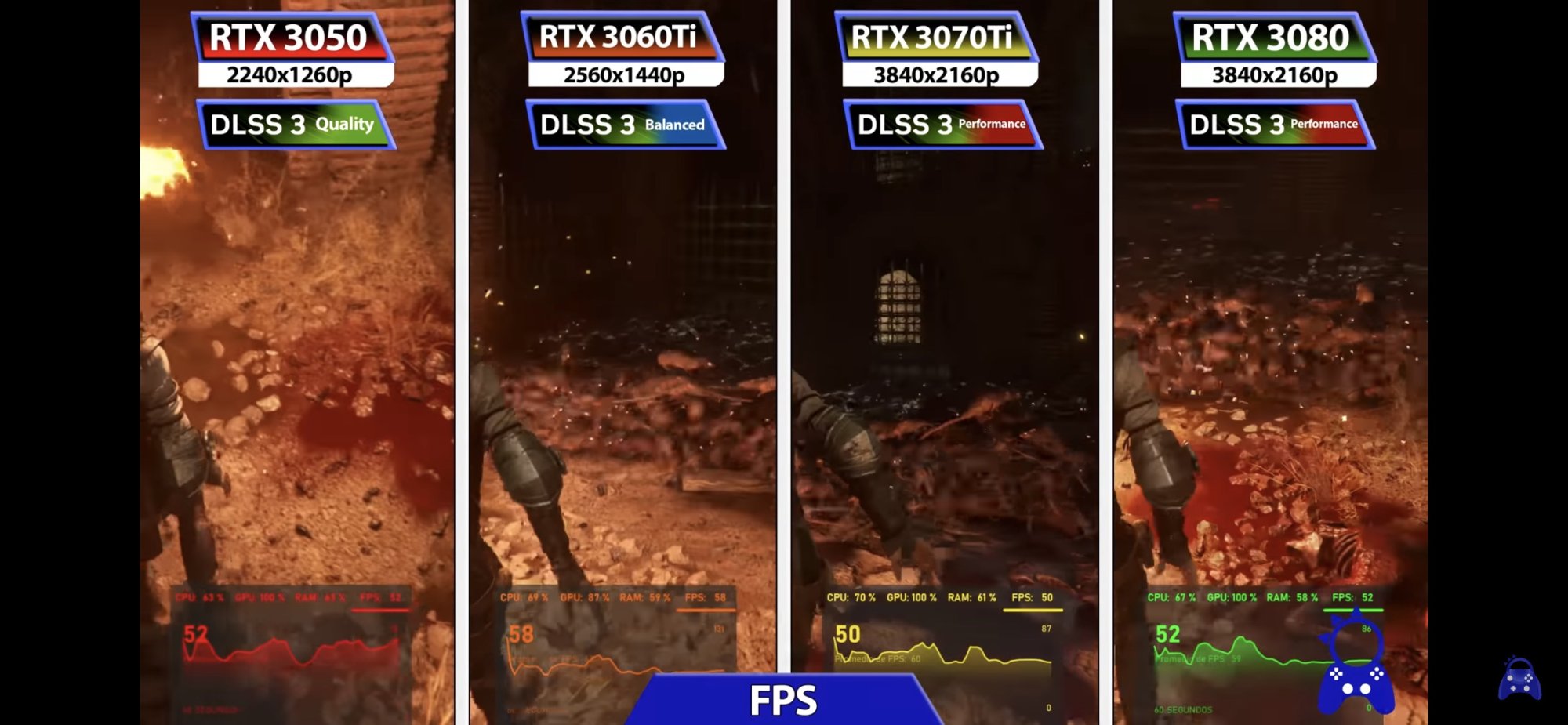

It was buggy with poor performance right after it launched at midnight but later in the day they must have updated it as the bugs were gone and I could see nvidia reflex option in settings too.I hear this is a very demanding game on PC and pushes consoles to their limit...a true next-gen title...the visuals look really impressive as well...the ray-tracing patch will bring PC's to their knees

Yeah, I'm hearing good things about it in Spider-Man also. Sounds like it might be better than the shock youtubers claimedOn a serious note. I just tried the game out 4k max settings no dlss. Low 60s - low 70s. With dlss off and no frame generation the frame rate is pretty close to double. Add dlss quality and it’s 160-170.

First time trying frame generation. Didn’t feel any lag. Input felt quicker couldn’t really tell there were fake frames at play.

Damn impressed.

Nah man, the only plebs are console players.Yeah, I'm hearing good things about it in Spider-Man also. Sounds like it might be better than the shock youtubers claimed. You should be all set once the raytracing support hits this game! - Signed, an RTX 3080 10gb (Dec 2020) pleb.

Game is locked to 30 FPS on consoles, 40 FPS if you run your display at 120 Hz. Console plebs are reporting the game can't even maintain those numbers, that 40 FPS feels worse than 30.View attachment 519542

I wonder what the console peasants are doing?

Also hearing that there's some problem with vsync/gsync/etc. where people are getting screen tearing, even console players.Game is locked to 30 FPS on consoles, 40 FPS if you run your display at 120 Hz. Console plebs are reporting the game can't even maintain those numbers, that 40 FPS feels worse than 30.

Yeah that’s the joke. “<British orphan voice> Please sir may I have another frame.”Game is locked to 30 FPS on consoles, 40 FPS if you run your display at 120 Hz. Console plebs are reporting the game can't even maintain those numbers, that 40 FPS feels worse than 30.

Game is locked to 30 FPS on consoles, 40 FPS if you run your display at 120 Hz. Console plebs are reporting the game can't even maintain those numbers, that 40 FPS feels worse than 30.

If you don't have VRR you can enable Adaptive V-Sync in the NVIDIA control panel. It will override the game setting.Not sure if anyone else with a 4090 is noticing that this game with frame generation kicks out over 200fps but vsync is disabled. I'm seeing screen tearing all over the place. It looks terrible. I can't use DLSS 3. DLSS 2 in quality mode is still pushing out over 120fps which is my monitor refresh rate and the game runs silky smooth.

Nah man, the only plebs are console players.

I tried this game on my Xbox Series X and it runs at 30fps with no performance mode option.I am not seeing any tearing and I have frame gen enabled without vsync but then I am running on a 240 Hz monitor.

But best part is I am playing this on the Xbox Series X and it runs dope even on that.

Night time Xbox

Day time 4090

This game is so depressing it is stunning.

There's one scene where the sun is undisturbed as it sets over the water, the bright glow from it looks especially superb on QD-OLED at HDR 1000 with a sense of squinty glare and realism, especially how when you move the mouse camera around the way the optical effects play with the view like as if you're viewing the scene through a lens which gives the impression of being a drone camera behind Amicia...

Anything bigger than a 2% window brings peak HDR brightness down to 400 nits from 1,000, just so you're aware (Rtings notes a "real scene" is typically around 480 nits). The DisplayHDR certification on the AW3423DW is only for "True Black 400" since it can't (or won't) sustain 1,000 nits. Seems like Samsung is scared to death of burn-in.Completed it just now, piled in the last couple of hours this evening and didn't realise what the time was.

Well that was an experience for sure, definitely felt like being part of a long story saga, and one of the few games I actually completed without giving it up half way through due to boredom or bugs etc (Horizon, Prey, Dying Light 2, Res Evil 2 Remake, God of War,

Res Evil 3 Remake and Res Evil Village were the last games I completed in August for reference, Cyberpunk earlier in the year.

There's one scene where the sun is undisturbed as it sets over the water, the bright glow from it looks especially superb on QD-OLED at HDR 1000 with a sense of squinty glare and realism, especially how when you move the mouse camera around the way the optical effects play with the view like as if you're viewing the scene through a lens which gives the impression of being a drone camera behind Amicia.

View attachment 521383

Also the final scene reaching the summit was pretty stunning, but no screenshots of that as it might spoil a little surprise well worth seeing for yourself.

The particle effects and level of detail is pretty incredible on this engine, and on the whole even though it's not as optimised as desired, it ran well and convinced to me that for gaming, the upgrades I've done to my PC this year have been totally worth it by delivering a 99% smooth experience with high framerates and low frametimes without having to sacrifice any GFX quality setting.

View attachment 521384

^^ 12700F, 64GB 3600 @ 3200, 3080 Ti FE @ 1785 (850mV), Dell AW3423DW QD-OLED

I'd like to see more games with this sort of sustained performance. If the level of detail and SFX in the engine is this high, then I don't care if it sometimes dips to the 70s for example, Gsync handles that transitions from triple figure to double figure fps seamlessly.

I won't be replaying though as it's not the type of game that has any replay value to me, but there will be another one in the series judging by the Easter egg scene at the end, so don't quit out to main menu when the first credits roll

Not saying it doesn't look amazing. My LG and AORUS typically only go up to 600 nits in full scenes. The per-pixel lighting and infinite contrast go a long way on an OLED. My PG27UQ can peak at 1,100 nits at 30%, but it doesn't look nearly as good as the OLEDs.I know this, I have yet to notice the drop from 1060 to 480~ as typically nothing in the games I have played have had max brightness areas dominating the screen area - The sun example in the above post is to highlight where nits like peak 1000 really do come into their own as the sun in that scene is so bright you squint looking directly at the sun there until the eye adjusts.

It's a non-issue that people seem to reference often online citing reviews - In actual use and practice it's perfectly fine and rather remarkable vs any other monitor on the market.

I'd prefer more companies try and develop and/or keep their own bespoke engines. I don't think they could have been able to reach such a level of detail and care if they used UE5.What blows me away a bit is that this is on their own engine. If they can transfer that level of skill to UE5 (which seems to be relatively easy to develop on), I'm expecting great things!

I am quite agnostic on the second part, maybe it would be the complete opposite more time, money and effort going into the details and cares than mechanics, but I also reflexively prefer if some competition on engine stay alive.I'd prefer more companies try and develop and/or keep their own bespoke engines. I don't think they could have been able to reach such a level of detail and care if they used UE5.