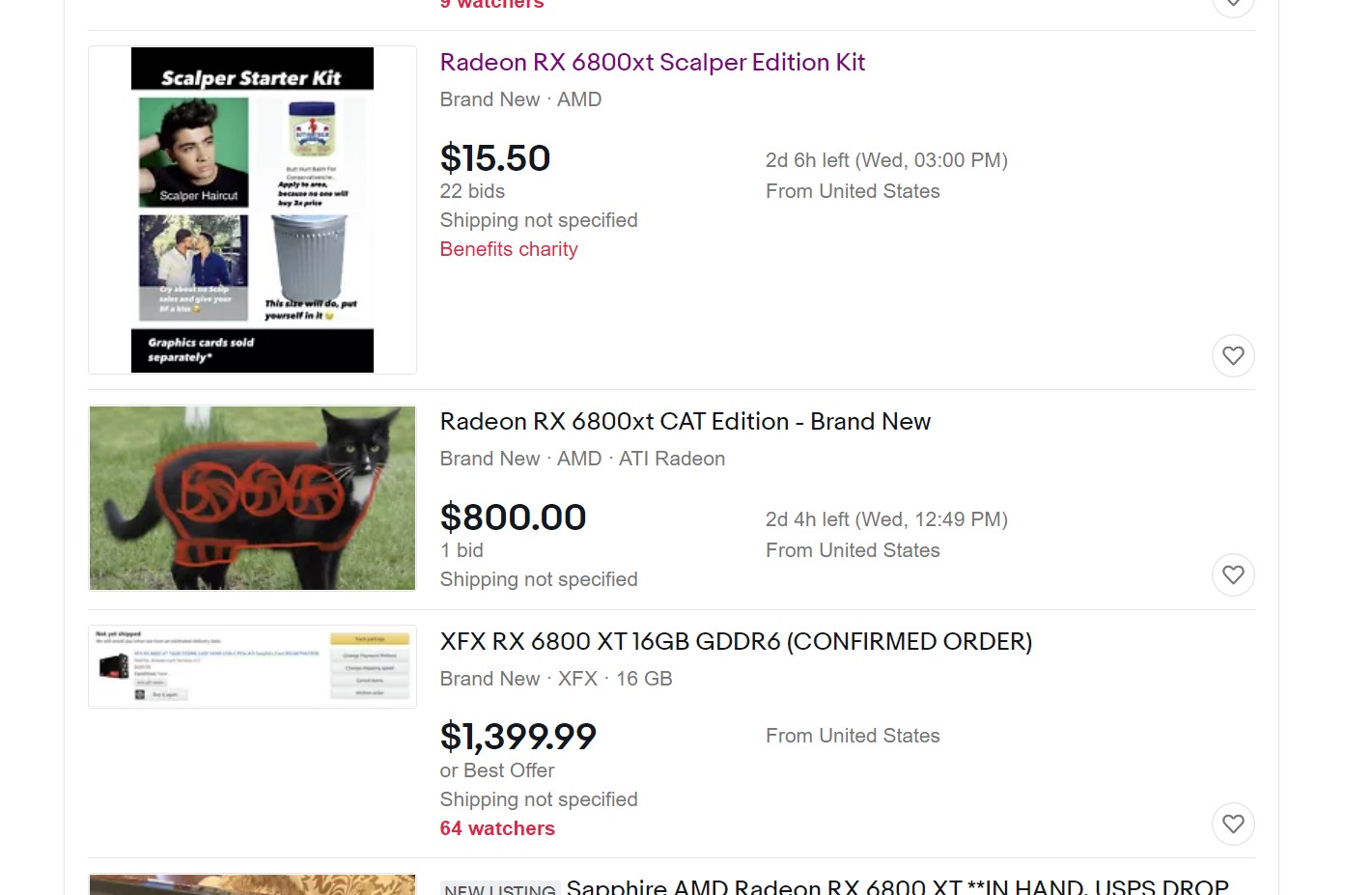

That not the feeling I have when I try to buy them on Amazon, there is literally line of people in front of store of people that want to pay 3x more for product that do ridiculous things, when $400 consoles that game really well and a 5700xt exist.No one wants to pay 3x more for the modern version of the same thing, after correcting for inflation.

There is giant group of people that want to pay that price and that why they make those products, we would be gaming with console graphic with console performance at console price otherwise.

But that said that part:

The option is available for those who can and are willing to buy it.

If you don't want most expensive option, there are cheaper options

Do not exist enough, at least right now, there is not that many interesting cheap option on newegg, if there was a $350 RTX 3060 or cheap 2060/2070/5070xt it would be true, but old card are incredibly expensive right now.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)