gerardfraser

[H]ard|Gawd

- Joined

- Feb 23, 2009

- Messages

- 1,366

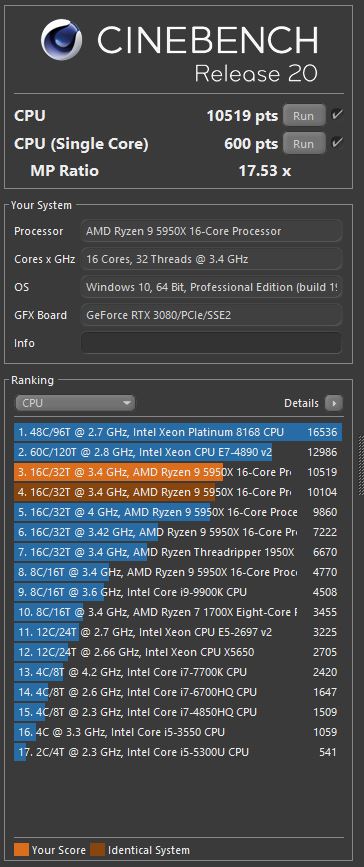

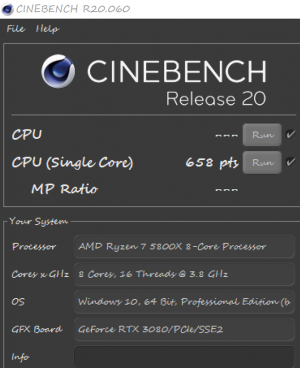

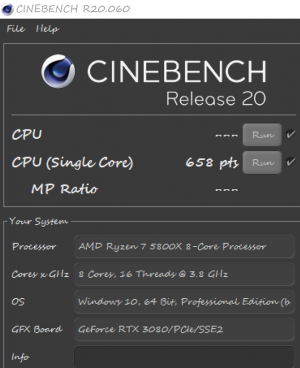

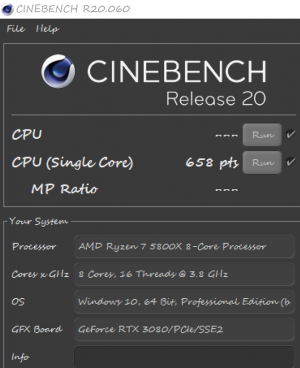

5800X all core overclock Cinebench 20 - 4800Mhz

Multi score -6330 @ 72.5℃ with 1.29v

Too bad some people are having cooling problems,looks like I got lucky.

add ST score with AMD optimizer

Video of run if you like boring stuff

♦ CPU - AMD 5800X With MasterLiquid Lite ML240L RGB AIO

♦ GPU - Nvidia RTX 3080

♦ RAM - G.Skill 32 GB DDR4

♦ Mobo - MSI MAG X570 TOMAHAWK(E7C84AMS v140)

♦ SSD - NVME SSD 1TB

♦ DSP - LG B9 65" 4K UHD HDR OLED G-Sync Over HDMI

♦ PSU - Antec High Current Pro 1200W

Multi score -6330 @ 72.5℃ with 1.29v

Too bad some people are having cooling problems,looks like I got lucky.

add ST score with AMD optimizer

Video of run if you like boring stuff

♦ CPU - AMD 5800X With MasterLiquid Lite ML240L RGB AIO

♦ GPU - Nvidia RTX 3080

♦ RAM - G.Skill 32 GB DDR4

♦ Mobo - MSI MAG X570 TOMAHAWK(E7C84AMS v140)

♦ SSD - NVME SSD 1TB

♦ DSP - LG B9 65" 4K UHD HDR OLED G-Sync Over HDMI

♦ PSU - Antec High Current Pro 1200W

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)