How much of an issue compression-chroma are in 4K-240hz with DP 1.4a for gaming (and not work-texts), I guess will to see for next weeks reviews that will test those, maybe it will end up an non issues for a long time particularly if the monitor industry has to make it work with how much of the field will not have DP 2.0.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

4090 Reviews are up.

- Thread starter vegeta535

- Start date

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,566

from what I can tell DSR does not down sample 8k resolution which is 7680 x 4320 pixels. When I enabled it I do not get the option for games to play at 7680x4320.There's also running 8K downsampled on a 4K monitor, which you can easily do with Nvidia DSR factors. And it does look better/sharper from the few times I've tried it on my own 4K monitor. It's not a huge difference, but it's definitely there. But my 3090 really chokes when doing it.

And then for the purposes of VR, I'd want to see how 4K x2 (4K per eye) or any similar pixel total resolution (so about ~6K res) performs as well.

Now sure playing at 8k it totally niche. But with the 4090, it looks like its possible to get 8k60hz. If you aren't playing at 4:4:4.....its looks terrible.

Furious_Styles

Supreme [H]ardness

- Joined

- Jan 16, 2013

- Messages

- 4,515

There really isn't any. That's why lots of us bought from EVGA.Anyone figure which Manufacturer has a warranty that can compete with EVGA's Advanced RMA? Can't seem to find a good alternative. Love any Rec's

That interesting and surprising, I thought it would be more when you work or read text than game, for example enough that Sony released a PS5 unabled to do 4:4:4 at 4k, movies does not use 4:4:4 and look better than a video game for the most partIf you aren't playing at 4:4:4.....its looks terrible.

https://www.rtings.com/tv/learn/chroma-subsampling

IN VIDEO GAMES

While some PC games that have a strong focus on text might suffer from using chroma subsampling, most of them are either designed with it in mind or implement it within the game engine. Most gamers should therefore not worry about it.Color subsampling is a method of compression that greatly reduces file size and bandwidth requirements with practically no quality loss. Unless you are going to use your TV as a primary PC monitor where lots of text is going to be read, there shouldn't be a need to worry about it. It has no noticeable visual imperfections otherwise and allows you to trade for much better advantages such as 10-bit color depth and HDR. 4:2:0 is essential to modern distribution platforms,

And when going to 4:4:4 instead of 4:2:2 by going down to 60fps on the PS5, many cannot see the difference in game at a regular sitting distance(it could be that PS5 video game are made to be played in 4:2:2 too).

I would have to take people word for it, not being able tot test, but the fact Sony considered it was enough of an non issue to use only a 30 gbs port, make me feel that for most people sitting on a couch playing on a giant TV, should not be an issue, on a 32 inch 4K 240hz monitor too, maybe it would be.

Or guru3d on it:

https://www.guru3d.com/articles_pages/asus_rog_swift_pg35vq_monitor_review,3.html

Is 4:2:2 chroma subsampling a bad thing?

That I can only answer with both yes and no. This form of color compression has been used for a long time already, in fact, if you ever watched a Bluray movie, it was color compressed. Your eyes will be hard-pressed to see the effect of color compression in movies and games. However, with very fine thin lines and fonts, you will notice it. For gaming, you'll probably never ever notice a difference (unless you know exactly what to look for in very specific scenes).

Last edited:

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,566

The problem is some people can see a difference, including me. Also, when you pay $1600 for a video card dont you expect to have no drawbacks in gaming? Like in the linus video.....There are 4k 240hz PC monitors....and the 4090 has to lower its image quality to be able to play at 240hz.That interesting and surprising, I thought it would be more when you work or read text than game, for example enough that Sony released a PS5 unabled to do 4:4:4 at 4k, movies does not use 4:4:4 and look better than a video game for the most part

https://www.rtings.com/tv/learn/chroma-subsampling

IN VIDEO GAMES

While some PC games that have a strong focus on text might suffer from using chroma subsampling, most of them are either designed with it in mind or implement it within the game engine. Most gamers should therefore not worry about it.

Color subsampling is a method of compression that greatly reduces file size and bandwidth requirements with practically no quality loss. Unless you are going to use your TV as a primary PC monitor where lots of text is going to be read, there shouldn't be a need to worry about it. It has no noticeable visual imperfections otherwise and allows you to trade for much better advantages such as 10-bit color depth and HDR. 4:2:0 is essential to modern distribution platforms,

And when going to 4:4:4 instead of 4:2:2 by going down to 60fps on the PS5, many cannot see the difference in game at a regular sitting distance(it could be that PS5 video game are made to be played in 4:2:2 too).

If I am paying $1600 and finding that out I would be pissed personally. Now this is a very very small market considering not many people play at 4k 120hz+....But thats not the point right? You pay for the best you expect the best....not sub optimal specs.

I am not surprised that someone can see, is that someone find it terrible, could be just internet hyperbole, but I do not remember commenting that the PS5 look terrible in 120 fps versus an XboxX because one was in 4:4:4 and not the other (or even talk of seeing a difference at all).The problem is some people can including me.

Fully understand why someone that pay $1600 for a video card with a potential 6-7 years of live time (and with encoding-decoding AV1 on, etc...) to want the DP 2.0 in there, even in a why not mode anyway. But I feel it will be a wait to see if people in general are able to even tell the difference.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,788

This is pretty much it. I have zero interest in this card or the displays it can power, but shouldn't a $1600+ gpu be built for the ultra high end?The problem is some people can see a difference, including me. Also, when you pay $1600 for a video card dont you expect to have no drawbacks in gaming?

Furious_Styles

Supreme [H]ardness

- Joined

- Jan 16, 2013

- Messages

- 4,515

That's kinda always the case though. That's why Gsync/freesync are so nice, you can crank it up and not have to worry about hitting 240 fps, or just lower a few settings and easily get there.The problem is some people can see a difference, including me. Also, when you pay $1600 for a video card dont you expect to have no drawbacks in gaming? Like in the linus video.....There are 4k 240hz PC monitors....and the 4090 has to lower its image quality to be able to play at 240hz.

If I am paying $1600 and finding that out I would be pissed personally. Now this is a very very small market considering not many people play at 4k 120hz+....But thats not the point right? You pay for the best you expect the best....not sub optimal specs.

from what I can tell DSR does not down sample 8k resolution which is 7680 x 4320 pixels. When I enabled it I do not get the option for games to play at 7680x4320.

Now sure playing at 8k it totally niche. But with the 4090, it looks like its possible to get 8k60hz. If you aren't playing at 4:4:4.....its looks terrible.

The only two games I've hopped on at all over the past few months: The Outer Worlds and Planetside 2 both will run 7680 x 4320 (with DSR enabled) if I select that resolution. I also remember running it in Alien Isolation some time ago as well. It definitely works: I get a slightly sharper image on my PG279UQ with a heavily reduced frame rate. I don't consider it necessary by any means as 4K without an AA already looks good enough on a 27'' size, but for the sake of benchmarks it is doable without 8K native.

I'm not sure why it doesn't work on your end.

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,566

I just checked and you are right. I was only using the DLDSR option and not the legacy option.The only two games I've hopped on at all over the past few months: The Outer Worlds and Planetside 2 both will run 7680 x 4320 (with DSR enabled) if I select that resolution. I also remember running it in Alien Isolation some time ago as well. It definitely works: I get a slightly sharper image on my PG279UQ with a heavily reduced frame rate. I don't consider it necessary by any means as 4K without an AA already looks good enough on a 27'' size, but for the sake of benchmarks it is doable without 8K native.

I'm not sure why it doesn't work on your end.

KickAssCop

[H]F Junkie

- Joined

- Mar 19, 2003

- Messages

- 8,322

Any reviews for ASUS TUF?

CAD4466HK

2[H]4U

- Joined

- Jul 24, 2008

- Messages

- 2,684

Never have watched these guys, but here ya go...Any reviews for ASUS TUF?

Never have watched these guys, but here ya go...

Gear Seakers are the best, possibly one of THE best in the computer tech world

Thatguybil

Limp Gawd

- Joined

- Jan 21, 2017

- Messages

- 173

Can anyone point me to the VESA DisplayPort 2.1 specs?

As far as I can tell VESA has not published any purposed specifications for DP 2.1

I am wondering if wires were crossed and HDMI 2.1 is being confused with DP 2.1.

Hopefully Navi31 will have DP2.0.

As far as I can tell VESA has not published any purposed specifications for DP 2.1

I am wondering if wires were crossed and HDMI 2.1 is being confused with DP 2.1.

Hopefully Navi31 will have DP2.0.

Or 240V. Efficiency is slightly better there too.Going to need to be putting your computer on a 20A circuit pretty soon at this rate.

RJ1892

[H]ard|Gawd

- Joined

- Apr 3, 2014

- Messages

- 1,347

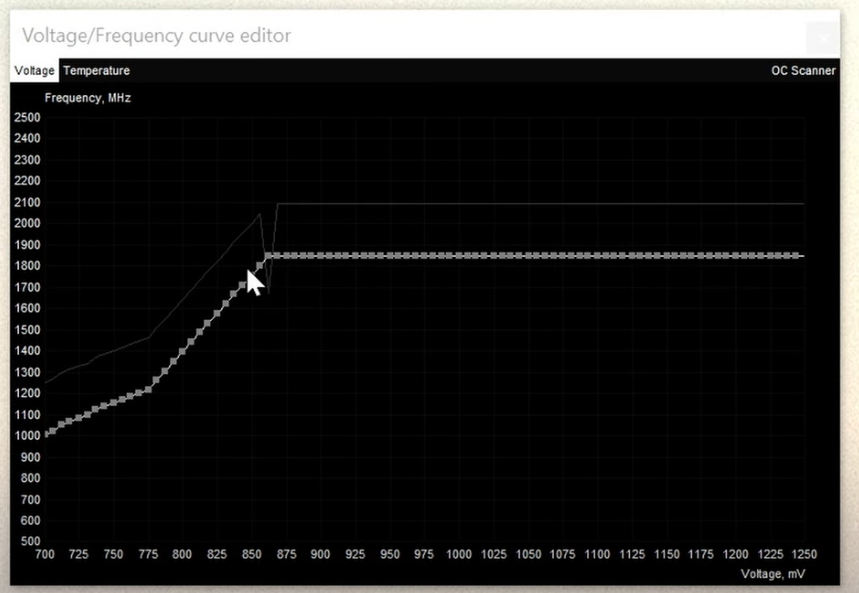

V/F curve undervolting might be dead. This confirms what der8aur was saying in his video.

CAD4466HK

2[H]4U

- Joined

- Jul 24, 2008

- Messages

- 2,684

Interesting that having only 3 plugs connected with the 12VHPWR adapter gives you 100% power target, vs. having all 4 which gives you 133% with the 4090FE.

Last edited:

V/F curve undervolting might be dead. This confirms what der8aur was saying in his video.

Well that is good to know.

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,566

Interesting that having only 3 plugs connected with the 12VHPWR adapter gives you 100% power target, vs. having all 4 which gives you 133% with the 4090FE.

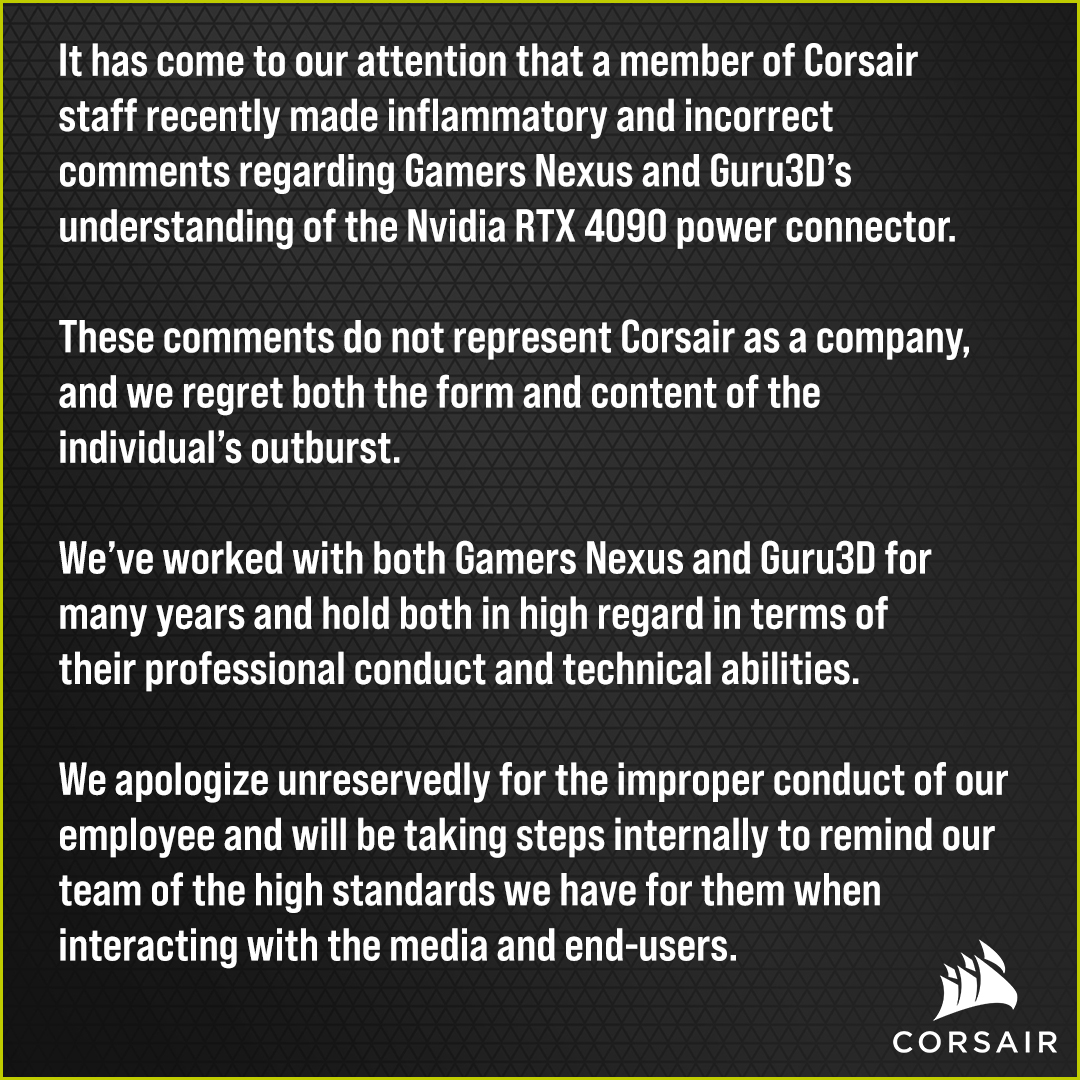

I think its funny Corsair tried to call out Gamers Nexus for spreading information that was correct. Even Nvidia made Corsair look bad AND Gamers Nexus owns evidence in the video proved Corsair wrong.

This is why we should all praise independent reporting.

XoR_

[H]ard|Gawd

- Joined

- Jan 18, 2016

- Messages

- 1,565

This card makes me feel like...

Last edited:

TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,688

TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,688

It was jonnyguru and he's not fired.Someone got fired.

Just jonny, he doesn’t get the guru title any longer.It was jonnyguru and he's not fired.

It was jonnyguru and he's not fired.

Just jonny, he doesn’t get the guru title any longer.

jonny said that? Wow ... (I still remember, as many others do here, when his PSU site was THE site to go, for PSU reviews -- he seemed [generally] a lot more objective then).

Given what I've heard about Corsair's cashflow issues/overexpansion recently, it makes things really interesting, in context.

TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,688

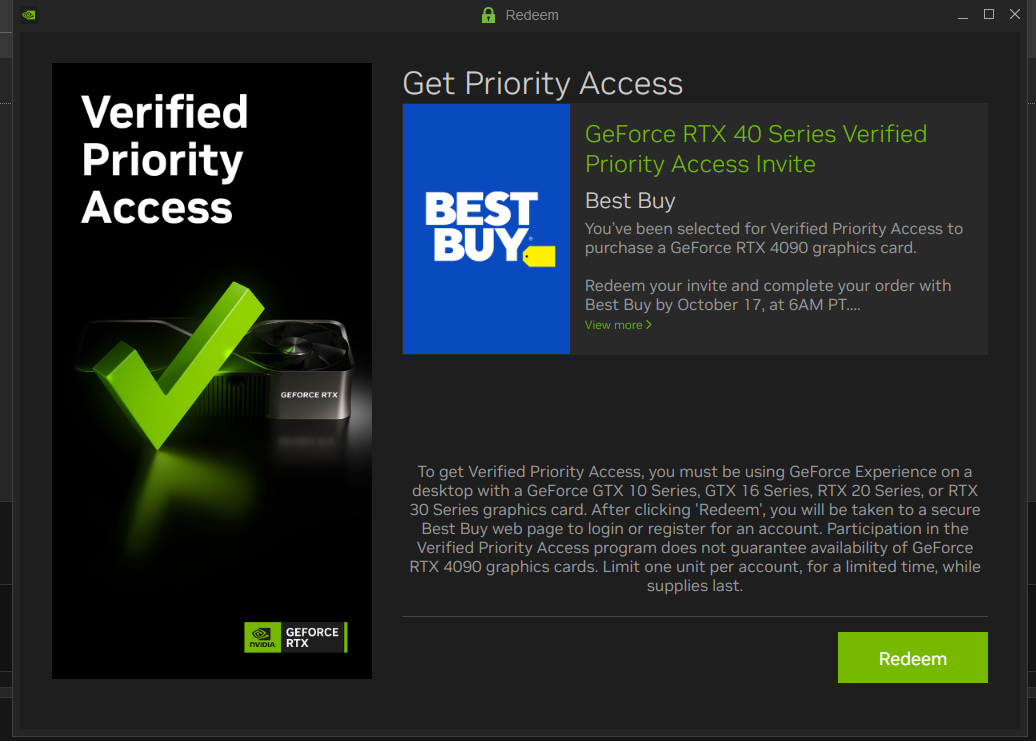

Late posting this, Nvidia is sending out FE purchase emails to random (?) GFE members.

RJ1892

[H]ard|Gawd

- Joined

- Apr 3, 2014

- Messages

- 1,347

Did you sign up to be notified when it's in stock?Late posting this, Nvidia is sending out FE purchase emails to random (?) GFE members.

It works in most games but not all. It gives you the option to select 8k in the resolution menu. You can scale it on the gpu or your monitor, record 8k 60fps or downsampled to 4k videos and snapshot 8k images. When I had an 8k screen I could go to 16kDSR does not down sample 8k resolution which is 7680 x 4320 pixels. When I enabled it I do not get the option for games to play at 7680x4320.

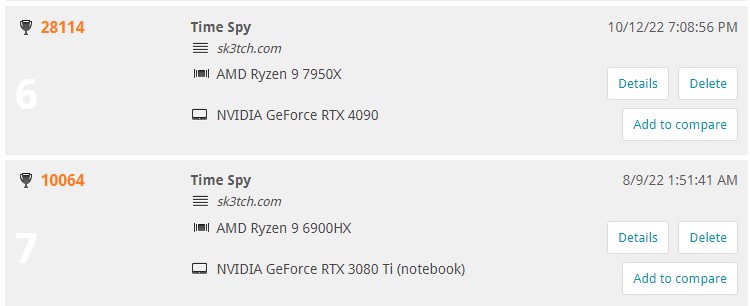

Attachments

I think this was the plan from the get go.Crazy looks like RTX 3090 Ti, for example, has gone up in price on Amazon. Response to demand for RTX 4090?

V/F curve undervolting might be dead. This confirms what der8aur was saying in his video.

But he's using that technique that tanks effective clocks even on 30 series. It would be interesting to see how it behaves if you just flatten the curve at some point, but keep the original curve to the left like I showed a long time ago.

From video,

Alternate method,

https://hardforum.com/threads/rtx-3000-series-undervolt-discussion.2003050/page-3#post-1044857255

WorldExclusive

[H]F Junkie

- Joined

- Apr 26, 2009

- Messages

- 11,548

No, Amazon is sold out of 3090Ti's until November. So 3rd parties are looking for suckers.Crazy looks like RTX 3090 Ti, for example, has gone up in price on Amazon. Response to demand for RTX 4090?

The ones that are in stock by Amazon fluctuate in price throughout the day.

I choose not to buy one because AMD will surely surpass it even more by 40% and the 4080s for probably around $1000-1200 making 3090Ti prices dip even more.

I'm game for a $650 3090 or $800 3090Ti, hold onto it until the 4090Ti is released.

Could be in part the announced pricing of the 4080 and their early benchmark numbers working.Crazy looks like RTX 3090 Ti, for example, has gone up in price on Amazon. Response to demand for RTX 4090?

Woah what did I miss? What was said?Corsair issued an apology to GN and Guru3D.

https://twitter.com/CORSAIR/status/1580649952082268160

View attachment 518185

TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,688

tl;dr Jonnyguru said some wrong things about the 4090 adapter cable, and was pretty mean about it.Woah what did I miss? What was said?

Which one did you get?For those who've gotten a 4090 what's been your experience with coil whine? It's not horrible but it's more noticeable than any card I've had in a number of generations. Just something unavoidable with the power its pulling?

I have the Asus TUF OC edition. Just curious what others experience has been.Which one did you get?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)