Chief Blur Buster

Owner of BlurBusters

- Joined

- Aug 18, 2017

- Messages

- 430

Definitely not -- you still have refreshtime (frametime) persistence so you can't get less than (1/240sec) display motion blur on OLED.I won't argue the motion clarity, though I'd say that OLED is there if not nearly there.

During fast scrolls of 4000 pixels/sec on 4K OLED, 4ms of persistence translates to 16 pixel of display motion blur. That's enough to fuzz-up tiny details like fine text on walls or ultrafine-detail graphics.

Blur Busters Law says 1ms of pixel visibility time translates to 1 pixel of motion blur per 1000 pixels/sec, which is exactly true when GtG=0 (aka squarewave strobing, or squarewave refresh cycle transitions), and that's why OLEDs follows Blur Busters Law much more perfectly than LCDs do. However, the formula is still an excellent approximation of the motion blur you see when GtG is somewhat under half a refresh cycle, so the Blur Busters Law works reasonably well (though more of an estimate) on LCDs, while the motion blur math starts to become virtually perfect on sample and hold OLEDs.

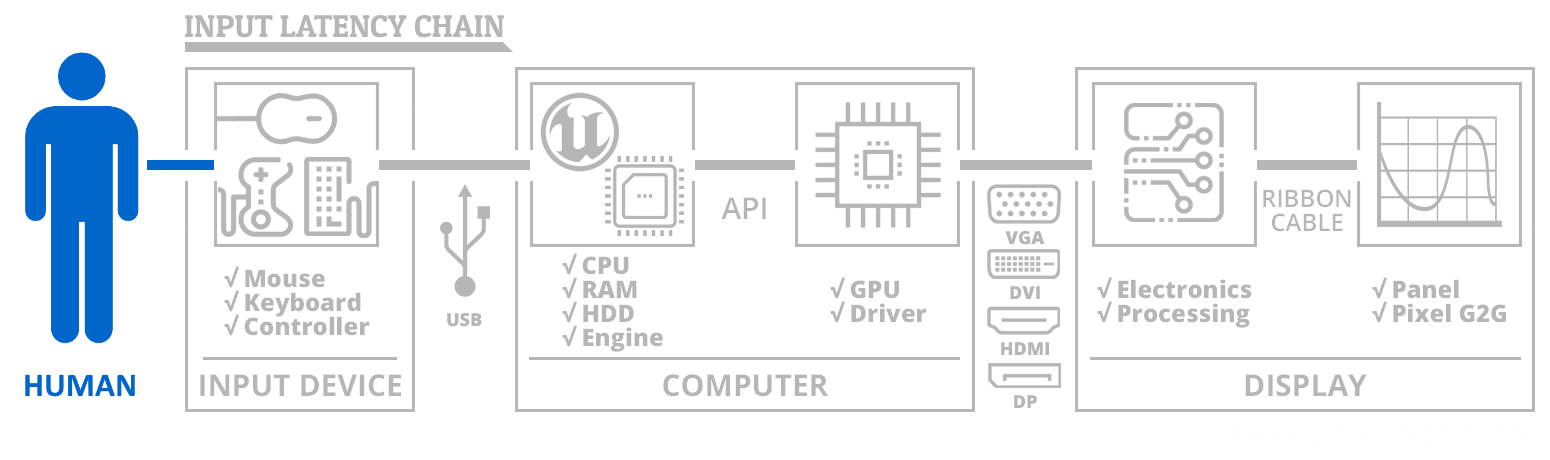

At framerate=Hz:

Motion blur = pixel visibility time.

Motion blur = pulsetime on strobed.

Motion blur = refreshtime on nonstrobed.

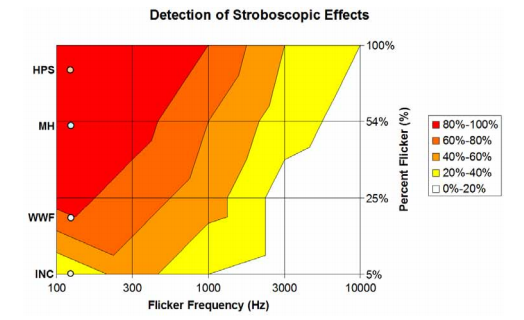

For VRR, refreshtime=frametime which is why you see increasing/decreasing amounts of motion blur in VRR framerate-ramping animations (e.g. www.testufo.com/vrr) -- which is also a good demo of the stutter-to-blur continuum, where the stutter blends to blur at the moment the stutter-vibration goes beyond your flicker fusion threshold. (Regular low frame rate stutter is a sample and hold effect, just like motion blur)

Also, 1ms MPRT on a CRT is easy, but to achieve 1ms MPRT on sample and hold, you need (1 second / MPRT) = 1000fps at 1000Hz at GtG=0 in order to match the display motion blur of a CRT tube.

Remember MPRT is not the same thing as LCD GtG.

Even GtG=0 can still have display motion blur, since MPRT(0%->100%) is throttled by MAX(frametime,refreshtime) on sample and hold.

You can view www.testufo.com/eyetracking on any non-BFI OLED at any refresh rate, to confirm this -- see for yourself -- because the optical illusion is ONLY possible due to display motion blur being throttled by frametime (which is then limited by the minimum refreshtime).

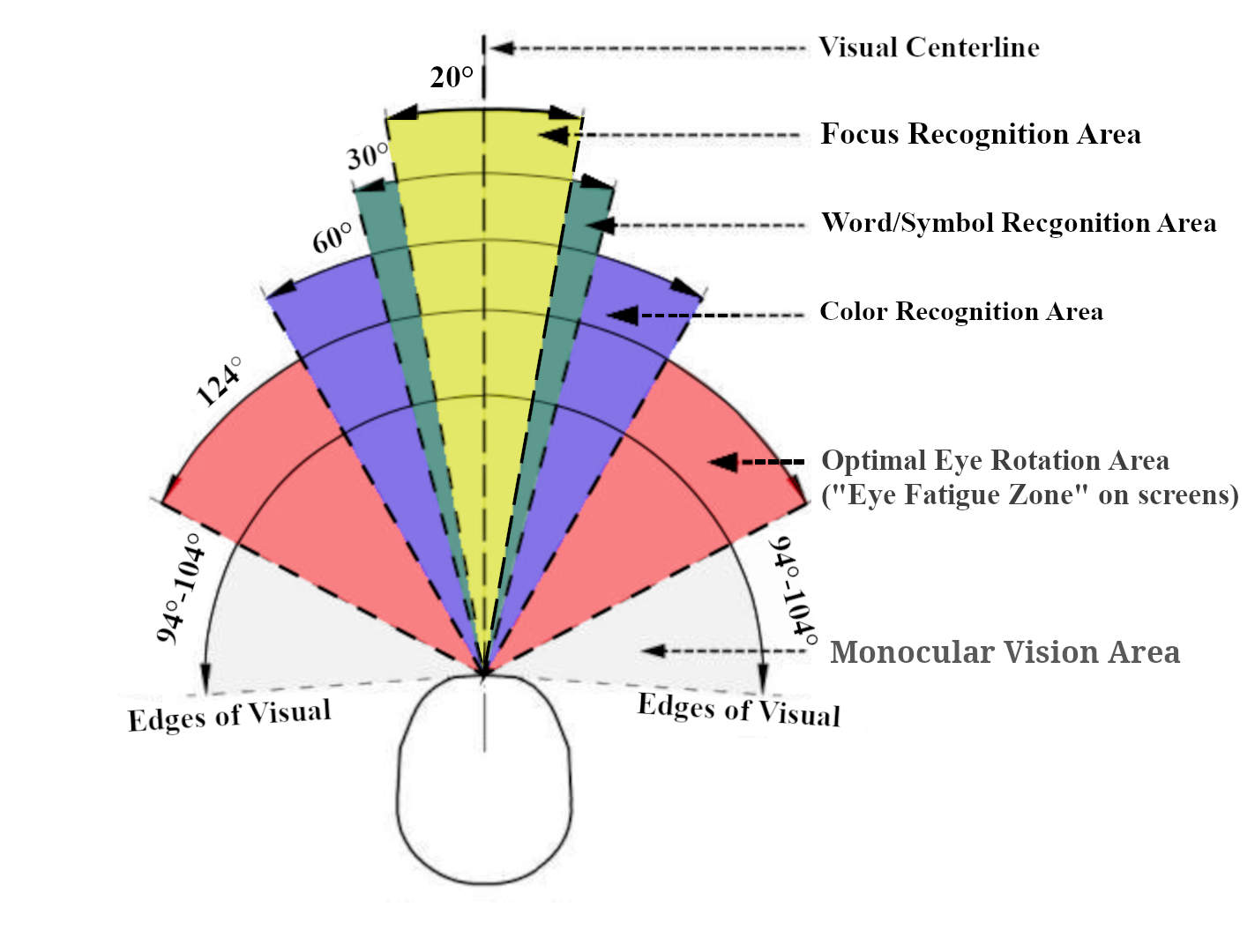

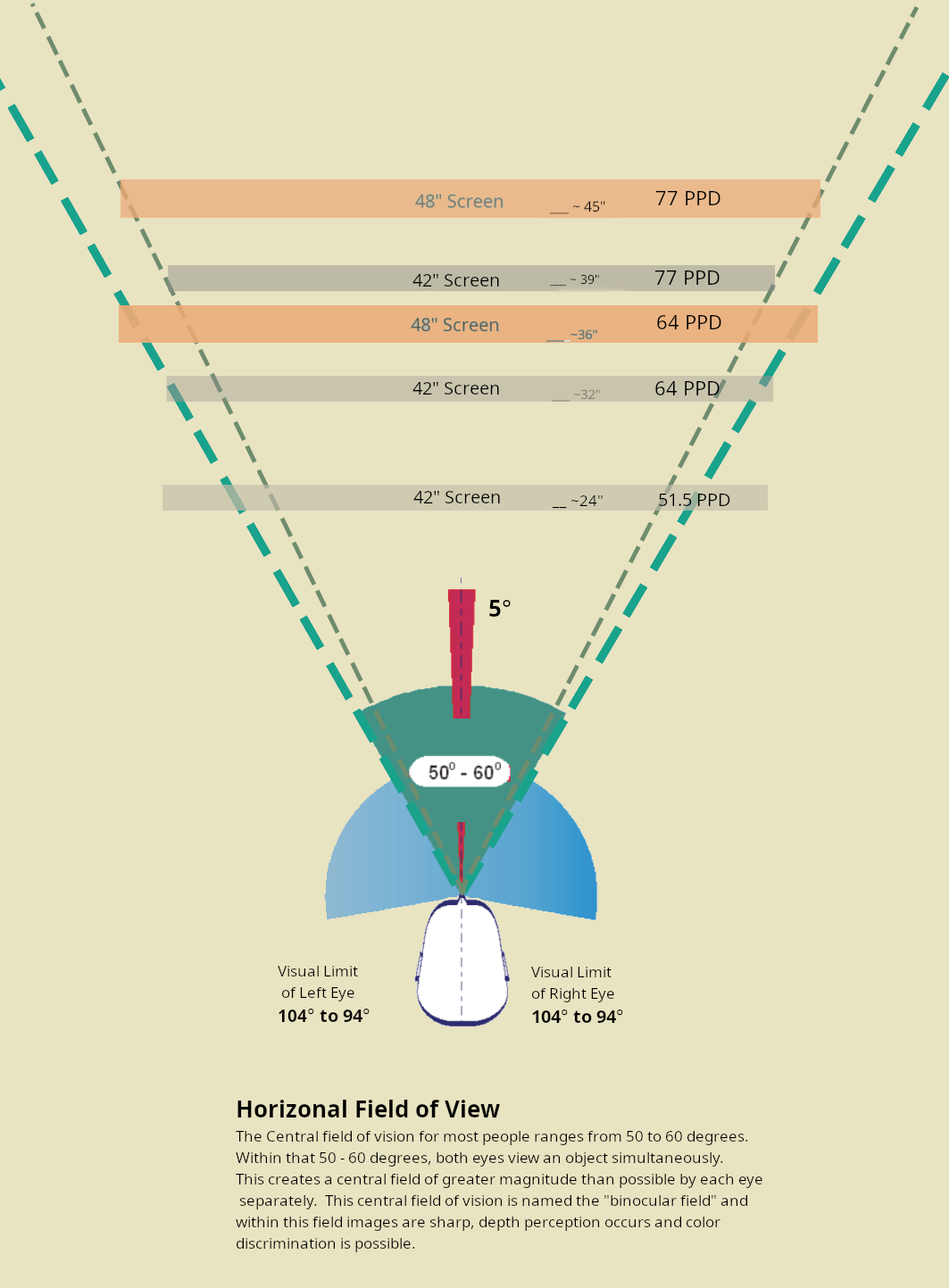

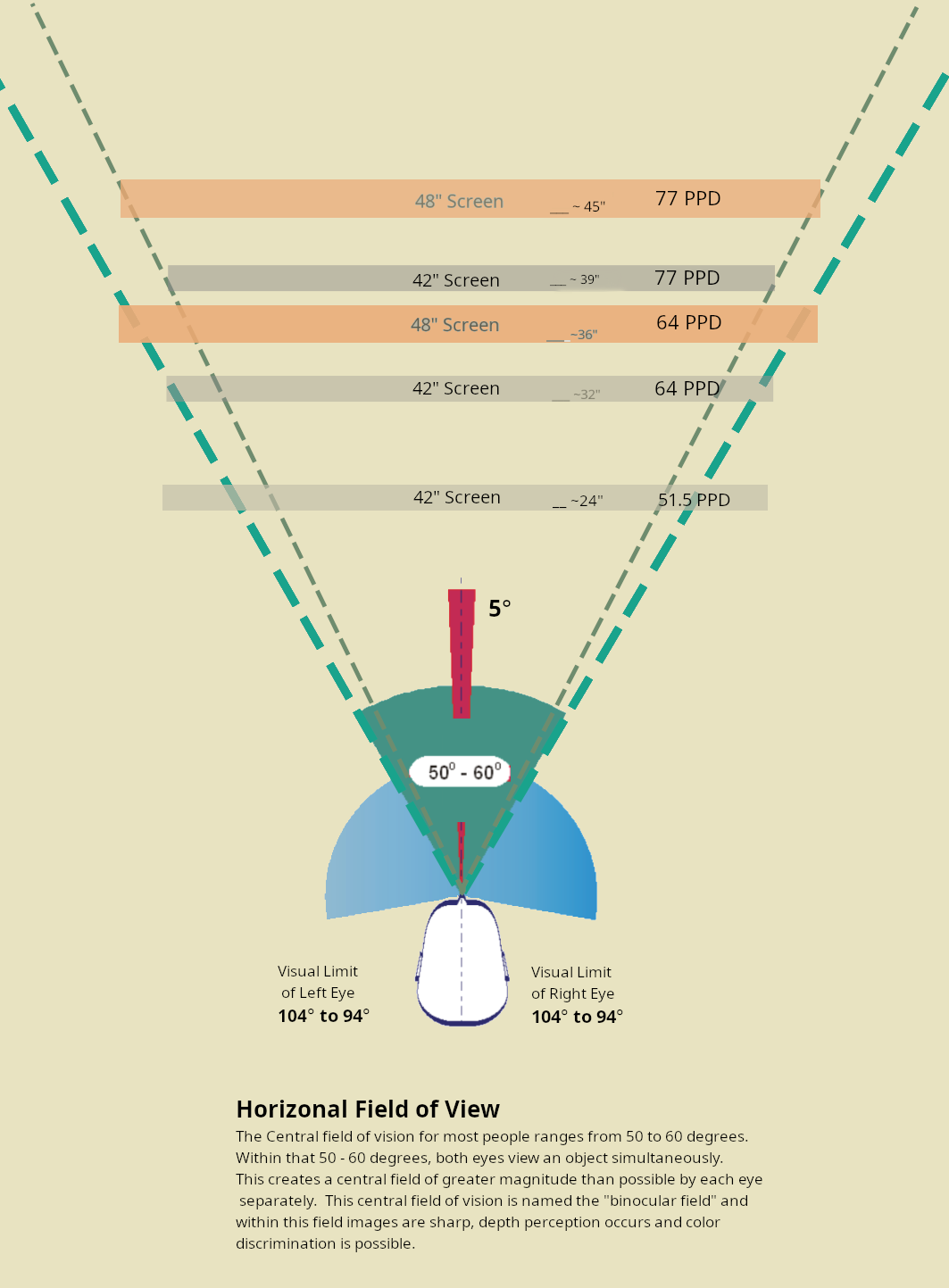

At high resolutions and high angular resolution / high ppi, motion blur is far easier to see than it was on a SXGA CRT tube, as a difference between static resolution and motion resolution. A sharper static resolution (more pixels) competing against an unchanged motion resolution = more motion blur easily seen when resolutions are higher and game details are higher, for the same physical motion speeds (in screenwidths/sec for a similar size display for example).

Lots of variables like screen resolution, human eye resolution, human eye maximum tracking speed, how long a moving object stays on the screen (enough time to identify motion artifacts such as motionblur or stroboscopics). In an extreme case (e.g. 16K 180-degree FOV, like for a theoretical sample and hold VR headset, since real life does not flicker/strobe) -- retina refresh rate is not until >20,000fps at >20,000Hz due to huge number of pixels with plenty of time for eyes to compare difference between static resolution and motion resolution.

For desktop displays, the ballpark 1000-4000Hz at framerate=Hz can easily be the territory of retina refresh rate at currently-used computer resolutions, since the FOV is much smaller, which means ultrafast-moving objects disappear off the edge of the screen too quickly before you're able to identify whether or not the object has motion blur or not. So retina Hz is tied to the human-resolvable resolution over the width of the display, linked to your maximum eye-tracking speed, and how much time the moving object stays on screen, to allow you to identify if the moving object has a difference in resolution versus stationary object.

So, today we're not even matching a CRT with a sample and hold OLED.... yet.

So:

(A) Ultrashort-flash BFI blur reduction needs to be added, OR

(B) Enough brute framerate-based blur reduction needs to be added.

For more information, click the Research tab of the Blur Busters Website. I'm already cited in 25+ peer reviewed papers, so I've been the authoritative source on display motion blur.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)