Nice! FD Trinitron too.Should be pretty good. Its horizontal and vertical refresh rates are identical to GDM-FW900

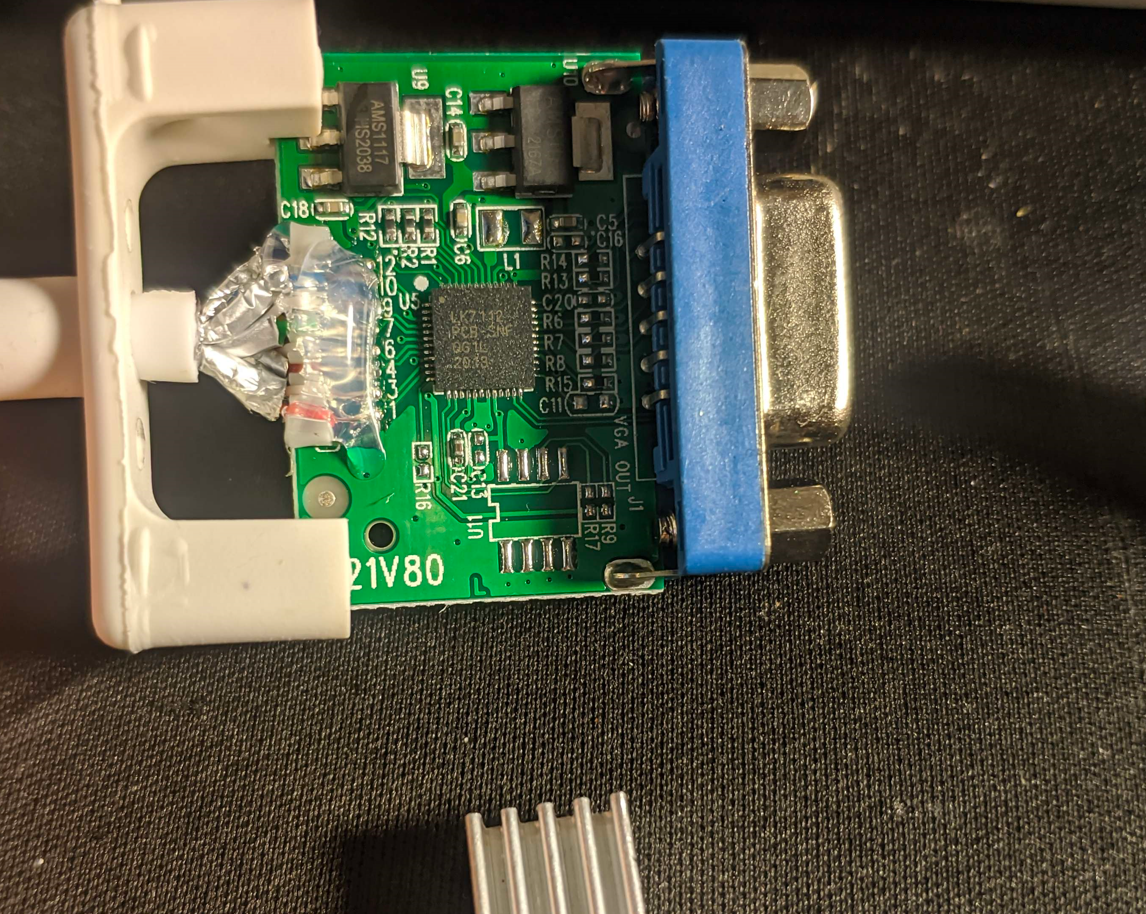

View attachment 565064

One thing is that while a lot of the 20" monitors spec'd 1600x1200 being the max, that was only because they were never tested with higher. That's how I found out my Lacie 22" could do 2560x1600 over vga. From what I remember, most 20"+ could do 2048x1536.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)