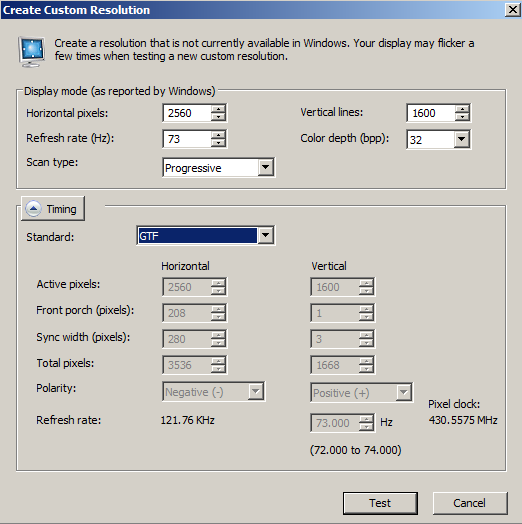

sometimes and i dont know why, windows detects my FW900 as a generic non PNP monitor, i restart windows and it detects it as GDM-FW900 again, but sometimes even restarting it keep detecting it as generic, when that happened the firs time, i lost all resolutions in CRU which were setup for FW900,

a workaround for this was to export all GDM-FW900 custom resolutions from CRU using the "export" button to a file, and when windows detected the monitor as generic, i imported the file, so now it does not matter if windows detects the monitor as FW900 or generic, since i now just use the same custom res created for the FW900 for the "generic" monitor and problem solved.

Very strange with native DAC, do you have other monitor connected to the graphic card?

I had the same problem in the past but only with a secondary Samsung monitor connected with an adapter and never with the FW900, i thought it was an AMD driver bug.

Initially i used your same solution with CRU, copy-paste button was very useful too.

Then i realized that sometimes Windows created new Non-PnP Monitor registry keys, so i had to redo the same thing with CRU with each new key.

I resolved the thing exporting the EDID to an INF file and installing the Non-PnP Monitor with that.

Every time Windows detects that monitor as new Non-PnP, that INF is used on the fly.

I stopped using this method with Radeon Crimson driver, because stupid unbelievable things happen with that configuration

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)