Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

2019 BOINC Pentathlon

- Thread starter pututu

- Start date

pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,091

In the last WCG FB sprint, I tested the bunker technique and it still work though I did screw up initially. Let me revisit this.

pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,091

On the GPU side, there are three GPU projects to be considered for one of the five events.

Collatz - quorum of 1. We have a good chance to possibly win a medal. Nvidia cards do very well.

Einstein - quorum of 2. Bunkering in advance will help. AMD cards performs very well like Radeon7 and Vega 64. Majority of top computers have AMD cards but Nvidia cards can still give a fight.

SETI - quorum of 2. Very long due date. Last check, if you download task today it will be due by May 31st. Linux special GPU apps performs the best. Nvidia is the king here. Need to setup multi-client or modify coproc_info.xml file. Note: for the special GPU linux apps, I'm unable to do with coproc_info.xml because there is no nvidia tag section. Anyone has any idea? See below coproc_info.xml on my linux setup for SETI.

Einstein got selected in 2017 for Pentathlon. Collatz and SETI were not selected over the past three pentathlons (2016-2018). My gut feeling is that either one of these two may be ripe for selection.

Collatz - quorum of 1. We have a good chance to possibly win a medal. Nvidia cards do very well.

Einstein - quorum of 2. Bunkering in advance will help. AMD cards performs very well like Radeon7 and Vega 64. Majority of top computers have AMD cards but Nvidia cards can still give a fight.

SETI - quorum of 2. Very long due date. Last check, if you download task today it will be due by May 31st. Linux special GPU apps performs the best. Nvidia is the king here. Need to setup multi-client or modify coproc_info.xml file. Note: for the special GPU linux apps, I'm unable to do with coproc_info.xml because there is no nvidia tag section. Anyone has any idea? See below coproc_info.xml on my linux setup for SETI.

<coprocs>

<have_cuda>1</have_cuda>

<cuda_version>10010</cuda_version>

<coproc_cuda>

<count>1</count>

<name>GeForce GTX 1080</name>

<available_ram>4160749568.000000</available_ram>

<have_cuda>1</have_cuda>

<have_opencl>0</have_opencl>

<peak_flops>9264640000000.000000</peak_flops>

<cudaVersion>10010</cudaVersion>

<drvVersion>41856</drvVersion>

<totalGlobalMem>4294967295.000000</totalGlobalMem>

<sharedMemPerBlock>49152.000000</sharedMemPerBlock>

<regsPerBlock>65536</regsPerBlock>

<warpSize>32</warpSize>

<memPitch>2147483647.000000</memPitch>

<maxThreadsPerBlock>1024</maxThreadsPerBlock>

<maxThreadsDim>1024 1024 64</maxThreadsDim>

<maxGridSize>2147483647 65535 65535</maxGridSize>

<clockRate>1809500</clockRate>

<totalConstMem>65536.000000</totalConstMem>

<major>6</major>

<minor>1</minor>

<textureAlignment>512.000000</textureAlignment>

<deviceOverlap>1</deviceOverlap>

<multiProcessorCount>20</multiProcessorCount>

<pci_info>

<bus_id>3</bus_id>

<device_id>0</device_id>

<domain_id>0</domain_id>

</pci_info>

</coproc_cuda>

<warning>NVIDIA library reports 1 GPU</warning>

<warning>ATI: libaticalrt.so: cannot open shared object file: No such file or directory</warning>

<warning>OpenCL: libOpenCL.so.1: cannot open shared object file: No such file or directory</warning>

</coprocs>

<have_cuda>1</have_cuda>

<cuda_version>10010</cuda_version>

<coproc_cuda>

<count>1</count>

<name>GeForce GTX 1080</name>

<available_ram>4160749568.000000</available_ram>

<have_cuda>1</have_cuda>

<have_opencl>0</have_opencl>

<peak_flops>9264640000000.000000</peak_flops>

<cudaVersion>10010</cudaVersion>

<drvVersion>41856</drvVersion>

<totalGlobalMem>4294967295.000000</totalGlobalMem>

<sharedMemPerBlock>49152.000000</sharedMemPerBlock>

<regsPerBlock>65536</regsPerBlock>

<warpSize>32</warpSize>

<memPitch>2147483647.000000</memPitch>

<maxThreadsPerBlock>1024</maxThreadsPerBlock>

<maxThreadsDim>1024 1024 64</maxThreadsDim>

<maxGridSize>2147483647 65535 65535</maxGridSize>

<clockRate>1809500</clockRate>

<totalConstMem>65536.000000</totalConstMem>

<major>6</major>

<minor>1</minor>

<textureAlignment>512.000000</textureAlignment>

<deviceOverlap>1</deviceOverlap>

<multiProcessorCount>20</multiProcessorCount>

<pci_info>

<bus_id>3</bus_id>

<device_id>0</device_id>

<domain_id>0</domain_id>

</pci_info>

</coproc_cuda>

<warning>NVIDIA library reports 1 GPU</warning>

<warning>ATI: libaticalrt.so: cannot open shared object file: No such file or directory</warning>

<warning>OpenCL: libOpenCL.so.1: cannot open shared object file: No such file or directory</warning>

</coprocs>

Einstein got selected in 2017 for Pentathlon. Collatz and SETI were not selected over the past three pentathlons (2016-2018). My gut feeling is that either one of these two may be ripe for selection.

Gilthanis

[H]ard|DCer of the Year - 2014

- Joined

- Jan 29, 2006

- Messages

- 8,729

I don't think SETI has ever been in the Pentathlon

tayunz

[H]ard DCoTM October 2018

- Joined

- Sep 27, 2004

- Messages

- 1,343

My vote is Einstein for obvious reasons, but I can handle Collatz is needed.

Gilthanis

[H]ard|DCer of the Year - 2014

- Joined

- Jan 29, 2006

- Messages

- 8,729

OK... we need everyone to post their "votes" for the 4 projects I need to submit when registering in the public forum thread. That way if anyone questions my numbers, they can read them openly without needing to be in the "secret area".

In prep for the Pentathalon, there's a few things everyone can check off the list if they can find the time.

Nah, but we will have fun though

- Linux trounces Windows on some projects (eg WCG) and has apps that are unavailable in Windows (eg LHC Native Atlas). If your crunching rigs are not already under linux, consider doing so or, if not practical, then consider a dual boot. There are multiple flavours of linux builds ... I use Mint which is Debian/Ubuntu based and fairly close in it's simplicity and GUI to Windows. I haven't used it but there's what looks like a useful TAAT thread on Mint here.

- Multiple instances of BOINC are easy to set up and are a godsend for bunkering. I used this guide which, being by our favourite cockwomble, mmonnin, I am reliably informed was plagiarised from some earlier Gilthanis guides. Subsequent to this I think motqalden may have enhanced this approach in the name of Xansons hegemony

- Virtualbox is a must for 2 of the potential Pent projects, LHC and Cosmology. There are standard apps on both but they are both crap for credits and will quickly be hoovered up by all those without VBox installed. Don't be one of those bleating during the competition about how VBox doesn't work and what a piece of shit it is (no matter how true). Best to set it up and test it beforehand.

- Another 2 of the potential projects have optimised apps. I think everyone is familiar with the Rakesearch one following our recent excruciating sprint event. The less obvious one is Seti which can be found here. It think it's linux only, so yeah, another good reason to dual boot. I'm dreading Seti getting picked for the GPU event because, unless I'm mistaken, both our GPU dreadnoughts atp1916 and RFGuy_KCCO are substantially using Windows which is an order of magnitude slower than the linux optimised app

- Each new user gets a $300 free credit for Google Cloud Platform (AWS alternative) here. There is 24 thread limitation when you first sign up although it was quick and easy for me to get this changed to 1000 threads. Their 64 vcpu medium memory spot/interruptible instance costs 48c an hour which makes it possible to run 2 of these, free gratis, throughout the Pentathlon. It's almost like having 2 x 2990WXs on loan for 2 weeks ... what's not to like

- Some of the project deadlines will soon start to overlap the Pent timeline. Seti already does so I'm going to start bunkering that as soon as I get the optimised app set up.

Nah, but we will have fun though

pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,091

I've tested SETI a few weeks ago and I'm using V097.b2 version which takes up 1 core. There is V098.b1 which I haven't tried out yet which is faster than V097.b2 as reported here and here.

Posted in the forum where to get the V098 version. Maybe I missed reading it.

I'm testing the WCG bunker and looks ok.

Posted in the forum where to get the V098 version. Maybe I missed reading it.

I'm testing the WCG bunker and looks ok.

Posted in the forum where to get the V098 version. Maybe I missed reading it..

The link within my post leads to the seti post with the v098 version. The nvidia specific file within the download that your looking for is setiathome_x41p_V0.98b1_x86_64-pc-linux-gnu_cuda101 which together with the app_info.xml file (once tailored) will work with the 418 drivers you and I installed for Numberfields.

Edit: The direct link is http://www.arkayn.us/lunatics/BOINC.7z

Last edited:

Gilthanis

[H]ard|DCer of the Year - 2014

- Joined

- Jan 29, 2006

- Messages

- 8,729

The mass majority of my contributions will be CPU based with a couple nVidia 970's. I'm all Windows but may be able to fire up a couple quad core boxes with Linux. Do you by chance have a guide for setting up the Google instances? I think that would go a long way. Also, it would be nice for a good AWS guide to be pinned in the forums too.

Gilthanis

[H]ard|DCer of the Year - 2014

- Joined

- Jan 29, 2006

- Messages

- 8,729

Just a heads up, I have encountered various Windows machines having trouble with virtualbox projects recently that didn't use to have problems. Work units just stop and don't recover. I found over an RNA World a post confirming it is a vbox 6+ bug. I plan on down grading what I can tomorrow. Hope this helps others plan their boxes.

ChristianVirtual

[H]ard DCOTM x3

- Joined

- Feb 23, 2013

- Messages

- 2,561

What is the highest “payed” WCG sub project in case WCG is in ?

pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,091

I think OZ running under linux. I've been running this recently.

Gilthanis

[H]ard|DCer of the Year - 2014

- Joined

- Jan 29, 2006

- Messages

- 8,729

doesn't matter... WCG is in and it will be a pre-determined sub project that will be announced.

- This year's Marathon takes place at a subproject of the World Community Grid chosen by the project administrators. Hence, the World Community Grid and its subprojects are not available for the other disciplines.

pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,091

My random guess: MIP may be chosen since it is only 14% completed. MIP will run in Windows well.

ChristianVirtual

[H]ard DCOTM x3

- Joined

- Feb 23, 2013

- Messages

- 2,561

Opps, sorry. a proof that’s an advantage to be able to readdoesn't matter... WCG is in and it will be a pre-determined sub project that will be announced

Do you by chance have a guide for setting up the Google instances? I think that would go a long way. Also, it would be nice for a good AWS guide to be pinned in the forums too.

I'm a bit naff at guides but I'll try to throw together a basic one for GCP if I get some time at the weekend. It's relatively simple compared to AWS so hopefully won't take too long.

ChristianVirtual

[H]ard DCOTM x3

- Joined

- Feb 23, 2013

- Messages

- 2,561

With OZ choosen bunkering is really work ... with those shorter runtimes I need many more clients ...

ChristianVirtual

[H]ard DCOTM x3

- Joined

- Feb 23, 2013

- Messages

- 2,561

and seems OZ run already dry ... hope it is us ...

As OZ allows uploads through a 0.01 network setting the best way to bunker it is to run an update script with the network turned off in boinctasks/boinc manager. This will stop you crunching tasks as fast as you grab them. Pending downloads will accumulate to your max cache (70 x number of threads?) capped at 1000 for you to download when you turn the network back on. Once downloaded turn the network off again or block IP 169.47.63.74.

Windows batch file script for WCG:

for /l %%x in (1, 1, 10000) do (

echo %%x

"c:\Program Files\Boinc\boinccmd.exe" --project http://www.worldcommunitygrid.org/ update

ping 127.0.0.1 -n 260 > nul

)

Linux terminal command for WCG:

watch -n 260 boinccmd --project http://www.worldcommunitygrid.org/ update

Windows batch file script for WCG:

for /l %%x in (1, 1, 10000) do (

echo %%x

"c:\Program Files\Boinc\boinccmd.exe" --project http://www.worldcommunitygrid.org/ update

ping 127.0.0.1 -n 260 > nul

)

Linux terminal command for WCG:

watch -n 260 boinccmd --project http://www.worldcommunitygrid.org/ update

I may not be understanding correctly but if you're asking if the trick will still work with the WCG IP blocked or if the WCG project page network setting works independently of the other boinc projects, then no. The global boinc no network setting works because it blocks uploads/downloads but still allows project communication.

RFGuy_KCCO

DCOTM x4, [H]ard|DCer of the Year 2019

- Joined

- Sep 23, 2006

- Messages

- 923

I am currently crunching my way through an ~11K WU OZ bunker. Hope to add another 1K to that this week, as well.

ChristianVirtual

[H]ard DCOTM x3

- Joined

- Feb 23, 2013

- Messages

- 2,561

I only have around 6k in the bunker and still need to crunch through a bit more; 400 pages in WCG each 15WU. Epyc with 15 parallel BOINC instances, waiting to get the pipe opened again.

pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,091

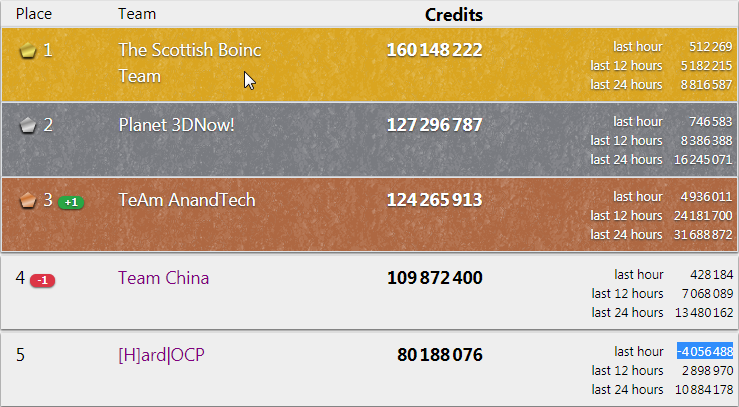

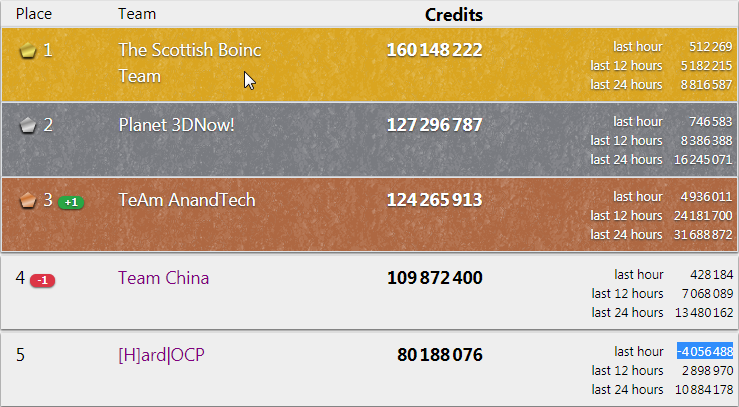

I'm really sorry guys. I joined TAAT for a day, so my 4M points moved to TAAT. I still have 3 bunker drops which I'll probably hold for a few more days. Yeah, called me traitor  . It would be fun if TAAT can overtake TSBT in WCG with 8 days left to go. I nudge them close to P3D and hopefully this will inspire the rest of TAAT to work harder.

. It would be fun if TAAT can overtake TSBT in WCG with 8 days left to go. I nudge them close to P3D and hopefully this will inspire the rest of TAAT to work harder.

Also the -4M point deduction from our team is create FUD (Fear, Uncertainty, Doubt) in TSBT and expanding the inter-teamwork skills. They may sense some fear and perhaps divert some resources from other projects to WCG. Just my one cent.

phoenicis, are your running the bunker technique? I'm sure you can massively over-inflated your credits with the rigs that you have. Maybe give me access to your rigs and I can set this up over the weekend . Just kidding.

. Just kidding.

Also the -4M point deduction from our team is create FUD (Fear, Uncertainty, Doubt) in TSBT and expanding the inter-teamwork skills. They may sense some fear and perhaps divert some resources from other projects to WCG. Just my one cent.

phoenicis, are your running the bunker technique? I'm sure you can massively over-inflated your credits with the rigs that you have. Maybe give me access to your rigs and I can set this up over the weekend

phoenicis, are your running the bunker technique? I'm sure you can massively over-inflated your credits with the rigs that you have. Maybe give me access to your rigs and I can set this up over the weekend. Just kidding.

Phew, I thought they'd caught you with your fingers in the cookie jar and docked the points

I've tested the technique in gcp and simulated it on the tail end of my bunkering by running four instances at once. Both worked quite well.

It's just a bit of a guilt thing that's stopping me from going whole hog. I know anything that doesn't change files/impact the science is fair game in the Pent and that other teams are no doubt using the approach but it still made me feel guilty.

I'm sure I'll get over it, lol.

I've tested the technique in gcp and simulated it on the tail end of my bunkering by running four instances at once. Both worked quite well.

It's just a bit of a guilt thing that's stopping me from going whole hog. I know anything that doesn't change files/impact the science is fair game in the Pent and that other teams are no doubt using the approach but it still made me feel guilty.

I'm sure I'll get over it, lol.

pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,091

Once you dump your bunker, start a new host in GCP. In this way, you don't have to wait very long to get peak pphr. Somehow WCG still remember your average pphr and will attempt to average it down which will hit your pphr very bad when you run on the same host. I normally run like 4 times the real CPU thread count. But once you have a task compute error, all downloaded tasks proceeding this error task will switch to quorum of 2 for OZ. Just try not to get any compute error.

ChristianVirtual

[H]ard DCOTM x3

- Joined

- Feb 23, 2013

- Messages

- 2,561

pututu how does that work ? I use multiple boinc instances I can load and crunch without network on the same physical hardware. But how does it inflate credits. Runtime get longer; during HSTB chase I saw that the CPU time still was the “real” crunchtime, as it looks like measured without all the overhead from task switching,

Is that different in case of OZ ?

Is that different in case of OZ ?

pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,091

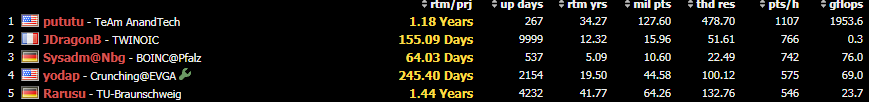

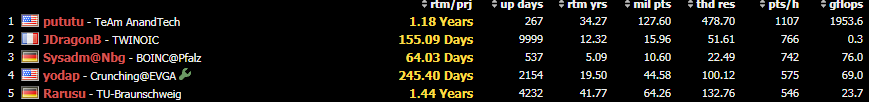

Somehow WCG has an algorithm that keep tracks on how much credit to grant upon the work done on a rig and consistently update that credit grant based on some factors which I don't know. All I know is that your last pphr is used to grant the credit for the next update (so it is important that if you want to bunker you should prevent any completed task been upload and update the credit counter, whatever that is in their system). After you drop the bunker, your credit counter will adjust for the next upload. Your bunker drop will depends on the previous pphr. Subsequent upload your points will be very extremely low and it will take time to go back to normal. What I do is to start a new host to speed up things. Most important once you setup your rig for max pphr, there should be not be any task been uploaded, but you can still download tasks. How do you do this: Simple. Just suspend ongoing tasks that are about to finish and start new one so no task will ever be completed and uploaded during the download period. Yes, you have to watch this carefully, so it is manual.

The other thing is that when you set this up you should decide before hand on how many ncpus you want to fake your rig. Keep in mind that you don't want to generate any error in the task as this may screw up the points earned. The more ncpus you have the more points you will get until you see task errors. So far I tested 4 x actual cores and it looks stable.

I sometimes monitor this site and compare with others. I'm now at the top of pphr and honestly I'm not returning any more work done other than juicing the points.

The other thing is that when you set this up you should decide before hand on how many ncpus you want to fake your rig. Keep in mind that you don't want to generate any error in the task as this may screw up the points earned. The more ncpus you have the more points you will get until you see task errors. So far I tested 4 x actual cores and it looks stable.

I sometimes monitor this site and compare with others. I'm now at the top of pphr and honestly I'm not returning any more work done other than juicing the points.

ChristianVirtual

[H]ard DCOTM x3

- Joined

- Feb 23, 2013

- Messages

- 2,561

What’s going on with atp1916 ? Reading over the live stream on Penthouse ... what happen ?

Gilthanis

[H]ard|DCer of the Year - 2014

- Joined

- Jan 29, 2006

- Messages

- 8,729

I will try and update you over in Slack a little later today.

atp1916

[H]ard|DCoTM x1

- Joined

- Jun 18, 2004

- Messages

- 5,004

Now that the competition is mostly settled...I do want to make my position as clear as possible on all of this so as to prevent misinformation.

1: I did not "quit" because of anything [H] did. I've been [H] for 15 years, regardless of this whole Boinc thing. That will never change.

2. I did not "quit" because of the trolling from certain 5150s in the ShoutBox. I participated in the Shoutbox in specific attempts to get folks to drop their bunkers in hopes it would clear up my Pending stack. My Pending did not clear up after seeing fairly massive bunker drops, and that began to really tick me off. Still have 1220. That is not only stupid, but a waste of my time and effort.

3. I did "quit" explicitly due to the entirely closed-source administration of this Challenge, and - in a generalized sense - the nature of "competitive Boinc'ing". Team-hopping in disguise, mega-team formations, bunkering, dirty tactics, insider information, and even misinformation - Some see it as part of the "game" here - i do not.

4. I did "quit" explicitly due to an epiphany that I pay to participate in something i feel is busted and ethically challenged. This specific competition highlights all of the items in #3, and brings it all together in a symphony of complete and utter idiocy. Bowing out before the competition ended is something I do feel a bit bad about, but i had had enough.

5. I should have "retired" at the end of last year's campaign, but in retrospect that might have impacted [H]'s outcome in the challenge here. Bit of a rock and [H]ard place if you will.

Will i be back?

No.

I am not interested in the science behind any of this and frankly do not give a damn about points or badges. I am not here to talk #### in shoutboxes/forums, deal with the apparently many 5150s in the Boinc space, or play the politics game.

I was here to compete in good faith, but that is obvious and foolish naivety on my part.

So in closing: Long live the [H]orde. We stand on our own two feet no matter what.

1: I did not "quit" because of anything [H] did. I've been [H] for 15 years, regardless of this whole Boinc thing. That will never change.

2. I did not "quit" because of the trolling from certain 5150s in the ShoutBox. I participated in the Shoutbox in specific attempts to get folks to drop their bunkers in hopes it would clear up my Pending stack. My Pending did not clear up after seeing fairly massive bunker drops, and that began to really tick me off. Still have 1220. That is not only stupid, but a waste of my time and effort.

3. I did "quit" explicitly due to the entirely closed-source administration of this Challenge, and - in a generalized sense - the nature of "competitive Boinc'ing". Team-hopping in disguise, mega-team formations, bunkering, dirty tactics, insider information, and even misinformation - Some see it as part of the "game" here - i do not.

4. I did "quit" explicitly due to an epiphany that I pay to participate in something i feel is busted and ethically challenged. This specific competition highlights all of the items in #3, and brings it all together in a symphony of complete and utter idiocy. Bowing out before the competition ended is something I do feel a bit bad about, but i had had enough.

5. I should have "retired" at the end of last year's campaign, but in retrospect that might have impacted [H]'s outcome in the challenge here. Bit of a rock and [H]ard place if you will.

Will i be back?

No.

I am not interested in the science behind any of this and frankly do not give a damn about points or badges. I am not here to talk #### in shoutboxes/forums, deal with the apparently many 5150s in the Boinc space, or play the politics game.

I was here to compete in good faith, but that is obvious and foolish naivety on my part.

So in closing: Long live the [H]orde. We stand on our own two feet no matter what.

atp raises some good points and I agree with the main complaint (as I read it) stated in #3. There's too much bullshit going on. Could rant and rave, but as I think about it, assholes who screw the game by pulling WUs they'll never do, just to screw with people, are one of the biggest problems. (BTW, what's this 5150 reference?) Pre-event bunkers should be banned. Period.

That aside, I'm a little confused about LHC. Is it six track only for the sprint? Thought it was; but keep seeing people talking about Atlas and such too.

That aside, I'm a little confused about LHC. Is it six track only for the sprint? Thought it was; but keep seeing people talking about Atlas and such too.

DooKey

[H]F Junkie

- Joined

- Apr 25, 2001

- Messages

- 13,552

I've long thought the crap that is pulled during these events is shady and not in the spirit of competition.

ChristianVirtual

[H]ard DCOTM x3

- Joined

- Feb 23, 2013

- Messages

- 2,561

Had to google it; came up with this:(BTW, what's this 5150 reference?)

https://www.dictionary.com/e/slang/5150/

Kind of fits ...

ChristianVirtual

[H]ard DCOTM x3

- Joined

- Feb 23, 2013

- Messages

- 2,561

And agree: the tactics getting a bit out of control.

Countermeasure: protest, absence next time. Or even joining the leading team with [H] in our user name. To show how wired it is. And claim the victory.

Else it doesn’t make sense to participant in something wich is self destructing; except that is the objective.

@ atp1916 sorry to see you leaving; you will be missed and always welcomed to rejoin

Countermeasure: protest, absence next time. Or even joining the leading team with [H] in our user name. To show how wired it is. And claim the victory.

Else it doesn’t make sense to participant in something wich is self destructing; except that is the objective.

@ atp1916 sorry to see you leaving; you will be missed and always welcomed to rejoin

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)