You don't as long as you maintain the original framerate (or multiple therein). (You can also drop frames, repeat frames, draw partial frames, etc. which is how judder is introduced.) If you deviate from that at all, the audio will become pitch shifted. You compensate by stretching (or compressing) the audio.

Anyway, I was leaning heavily on hyperbole, as a form of sarcasm. Without knowing how PowerDVD internally works to the exact detail, I can't possible know. The point is, they could have gone with either solution (or a hybrid).

The point I'm really trying to make is you end up with some morphed output either way.

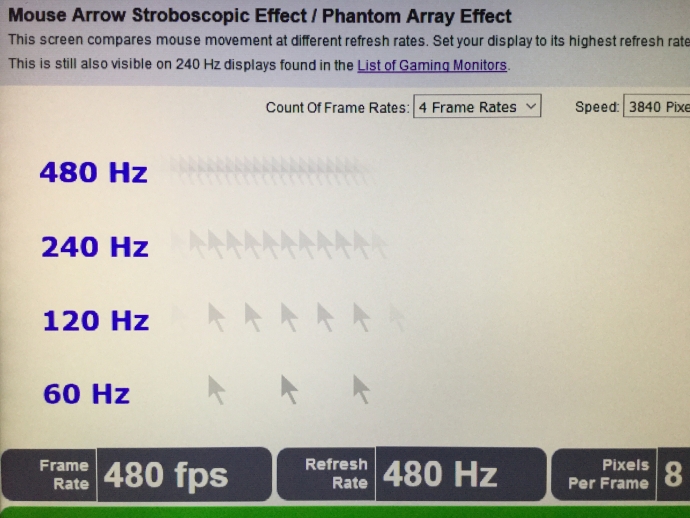

A real world example of using audio stretching: Say I have a 24 fps source. The closest multiple of my available monitor refresh rates is 95Hz (96Hz being the ideal). Being 1Hz off is a big deal as it would introduce motion judder. So I have two options: 1) use frame interpolation to hit a target framerate of 95/4 = 23.75 fps or 2) just display the video as-is by slowing it down to 23.75 fps which also requires stretching the audio. As a personal preference, I'm going with option #2 as to maintain the original picture as my eyes are far more sensitive than my ears. You can use ReClock with MPC to achieve that result.

TLDR; Unless you game 24/7, there is nothing "glorious" about a new and fancy monitor that doesn't support a refresh rate that is a multiple of 24.

Eh variable refresh makes this a non issue. This goes back to devs being lazy fucks again. If PowerDVD doesn't have a full screen exclusive mode that actually runs at 24hz (or 48hz) for G-Sync monitors, they suck cock. 24fps movies should not have juddering in a world with variable refresh displays.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)