- Joined

- May 18, 1997

- Messages

- 55,630

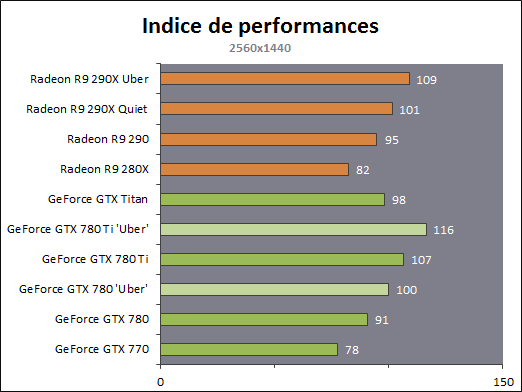

ASUS Radeon R9 290 DirectCU II OC Video Card Review - On our test bench today is a factory overclocked Radeon R9 290 from ASUS sporting the DirectCU II cooling system. We will compare it to the NVIDIA GeForce GTX 780 to determine which card reins supreme. AMD prices have finally stabilized back to normal, and this pushes one card into an extreme disadvantage from the other. Find out which one.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)