Probably one of the worst times for Nvidia to go all power hungry besides price bloat. It is also not just additional power for the GPU but also additional cooling for the room, plus power supplies are not 100% efficient, 450w/.9 = 500w from the wall. If AIBs go wild with power up to 600w, 600/.9 = 666w (Nvidia's magic number). I like to see what AMD has to offer, price/performance. This maybe the generation I will skip after over 2 decades of not skipping a graphics card release. Nvidia MSRP is utterly unreliable as hell, not interested in the FE since it is a huge bulbus brick, AIBs air cooled versions are worst, only a hybrid has a chance but the price maybe way above Nvidia's laughable MSRP guidelines.I invested in a significant solar/battery system last year as well (Yank in the UK). Energy costs have gotten ridiculous over here, even before the war. ~20% of my net now gets sold back to the grid. It feels good being able to power my house (and shortly car), and I'm intimately aware of my energy gain/loss. I now understand why my father yelled at us for leaving the lights on. Running a 450w GPU for as much as I work and game would put a bigger dent the curve that I have now.

The market dynamics are certainly changing, but I'm betting if most people did the math even at present, they'd be more interested in performance per watt and heat loss and dispersion.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

RTX 4xxx / RX 7xxx speculation

- Thread starter Nebell

- Start date

Someone correct me if I'm wrong, but I'm under the impression, that even PSU efficiency certifications only stand at certain loads? Off the top of my head I want to say I remember reading something like 50% of total power? So a 90+ Gold/Platinum PSU will be X efficient at that specific certified load range, but could be significantly lower if utilized at higher or lower total potential. I'm sure someone here will know the specifics of the testing/certification methodology.Probably one of the worst times for Nvidia to go all power hungry besides price bloat. It is also not just additional power for the GPU but also additional cooling for the room, plus power supplies are not 100% efficient, 450w/.9 = 500w from the wall. If AIBs go wild with power up to 600w, 600/.9 = 666w (Nvidia's magic number). I like to see what AMD has to offer, price/performance. This maybe the generation I will skip after over 2 decades of not skipping a graphics card release. Nvidia MSRP is utterly unreliable as hell, not interested in the FE since it is a huge bulbus brick, AIBs air cooled versions are worst, only a hybrid has a chance but the price maybe way above Nvidia's laughable MSRP guidelines.

I do know that with any type of power conversion, be it DC to DC or AC to DC, this appears to be the case. Severity and circumstance being dependent on methodology and specific variables. Usually with traditional DC to DC conversion methods that I'm aware of, the maximum conversion efficiency (which is ostensibly about 90-95% for even the most efficient mainstream methods) is only achieved by meeting very specific variables, i.e. either very close input and output voltages or a very particular spread within a particular current range. Obviously these can be pretty easily designed to meet optimum conditions for various conversions needed on a component level, with specific input variables, but the main system PSUs have pretty high potential output range in terms of current, and in most cases here in the US, also a pretty high input range variable, as most PSUs are selectable ~100-130VAC or ~200-250VAC 1p input, often 50hz or 60hz compatible as well, to provide the largest potential market for a single model design.

All that to say, I imagine there's a lot more complexity to this than we realize at a glance.

Strange bird

Gawd

- Joined

- Feb 19, 2021

- Messages

- 530

You have new power supplies, if you didn't know, in Europe I don't believe there will be any problems, except that the computers will consume like old vacuum cleaners and similar tools.

https://www.techpowerup.com/299196/seasonic-announces-the-vertex-atx-3-0-and-pcie-5-0-ready-psu-line

https://hardcoregamer.com/hardware/...towards-high-end-systems-overclocking/425914/

https://www.msi.com/blog/msis-meg-ai1300p-pcie5-is-the-worlds-first-atx-30-compliant-psu

https://www.techpowerup.com/299196/seasonic-announces-the-vertex-atx-3-0-and-pcie-5-0-ready-psu-line

https://hardcoregamer.com/hardware/...towards-high-end-systems-overclocking/425914/

https://www.msi.com/blog/msis-meg-ai1300p-pcie5-is-the-worlds-first-atx-30-compliant-psu

Tamlin_WSGF

2[H]4U

- Joined

- Aug 1, 2006

- Messages

- 3,114

You are totally right, this is an Intel spec connector and its not an adapter vs. ATX 3.0 PSU thing. Its the same connector in both ends. I pointed that out above. Its not even a Nvidia only thing, for all we know, the new AMD series might have the samme connector. Nvidia didn´t report any problems with connections to the card, because it was ment as a feedback to PSU manufacturers from their testing of the connector. Nvidia didn´t report either that they have solved the connector issue on their end, infact, they were trying to find out what the root causes were with the connector.I mean, it's Intel that made the spec for the connector, not Nvidia. If there's a problem with insertions of a 12VHPWR connector, it isn't an adapter vs. ATX 3.0 PSU thing. They are the same connector. But I want to see how many insertions PCIE 8pins are rated for (I did some googling and didn't immediately find anything). Nothing is new with the way the pins go in with the 12VHPWR connector compared to PCIE 6/8pin or EPS connectors to make this suddenly be a brand new problem.

Also incidentally, Nvidia didn't report any problems with the connection to cards. You can see what they say linked above on this page, they talk about heat issues where the cables connect to PSUs (not that it makes any difference that I can imagine, unless some PSU makers have lower quality connectors on their ends than the cards have).

Im sorry, but you are totally wrong about this not being a new problem. A PCI-E 6 pin cable are rated 75W/6.75Amps, an 8-pin 150W/13.5Amps. The new 12+4 pins: 600W/55Amps. If to take any similarities from the 8-pin generation, some have learned the hard way that connecting 8+6 or 8+8 via a single PCI-E cable on a power hungry GPU might not be a wise choice. I would feel much more comfortable connecting my next gpu to the 8-pins Ultraflex of the FD ION+ 2 850W Platinum in my secondary system then the next PSU I am considering buying on my primary system for the new card with a flimsy 12+4 connector that have issues with bending. Unless they fix the problem with the connector, I might have a potential issue on both the PSU and the GPU side with that connector and bending.

On the positive note regarding the new RTX 4XXX series, it seems Nvidia have taken measures to combat the transient load issues that plagued some PSUs with the 3XXX series:

TechpowerupIn the chart above, NVIDIA shows how current spikes get mitigated by their new VRM design, which uses a PID controller feedback loop. While the RTX 3090 bounces up and down, the RTX 4090 stays relatively stable, and just follows the general trend of the current loading pattern thanks to a 10x improvement in power management response time. It's also worth pointing out that the peak power current spike on the RTX 3090 is higher than the RTX 4090, even though the RTX 3090 is rated 100 W lower than the RTX 4090 (350 W vs 450 W).

mirkendargen

Limp Gawd

- Joined

- Dec 29, 2006

- Messages

- 435

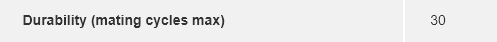

Finally found it. Guess how many insertions PCIE 8pin connectors are rated for?

Yup, you guessed it, 30.

https://www.molex.com/molex/products/part-detail/pcb_headers/0455860005

Yup, you guessed it, 30.

https://www.molex.com/molex/products/part-detail/pcb_headers/0455860005

And the cables? Because I've gone way beyond that with my EVGA 1600W T2 PSU and all of the GPUs that I've swapped in and out (including repasting, watercooling, and troubleshooting) over the past 6 years or so.Finally found it. Guess how many insertions PCIE 8pin connectors are rated for?

Yup, you guessed it, 30.

https://www.molex.com/molex/products/part-detail/pcb_headers/0455860005

View attachment 513598

Tamlin_WSGF

2[H]4U

- Joined

- Aug 1, 2006

- Messages

- 3,114

I think you need to put more context, if you want to compare a connector part to the rest of the discussion about the 12+4pin connector and Nvidias point of failure test after 40 matings and cable starts to melt. Not to mention how flimsy it is when it comes to bending and be honest, who doesn´t bend a little when they cable manage?Finally found it. Guess how many insertions PCIE 8pin connectors are rated for?

Yup, you guessed it, 30.

https://www.molex.com/molex/products/part-detail/pcb_headers/0455860005

View attachment 513598

According to the papers there regarding, you will have in the durability tests a requirement of maximum deviation in resistance of 20 Milliohms or 0.02 Ohm if you mate it 30 cycles at a max of 10 cycles a minute. Voltage of this part is also 600 V DC max.

So ok, when does it start to melt a cable rated for 150W/13.5A? How many mating cycles does it take for the increase in resistance creates a hotspot in our standard 8-pin connectors for PC PSUs? Whats the sideload situation there? You need to connect (no pun intended) the dots from specs of a connector which have a wide range of cable thickness, voltage and ampere, to a PC PCI-E power cable limited to 150W/13.5A for this to even matter regarding the 12+4pin issue that Nvidia reported concerns about. Its nowhere near an apples to apples thing here.

mirkendargen

Limp Gawd

- Joined

- Dec 29, 2006

- Messages

- 435

https://www.molex.com/molex/products/part-detail/crimp_housings/0455870004And the cables? Because I've gone way beyond that with my EVGA 1600W T2 PSU and all of the GPUs that I've swapped in and out (including repasting, watercooling, and troubleshooting) over the past 6 years or so.

The end that goes on cables doesn't have an insertion cycle rating.

So the real "fix" has nothing to do with the connector. Users shouldn't bend their cables 90deg in less than 3cm from the connector. Vendors could force compliance with this by putting a boatload of reinforcement in the first 1cm of the cable to ensure the pins inside the connector stay straight and the wiring connections to the pins aren't stressed. GPU and/or PSU makers could put OCP on each +12v wire rather than the entire combined device/rail.I think you need to put more context, if you want to compare a connector part to the rest of the discussion about the 12+4pin connector and Nvidias point of failure test after 40 matings and cable starts to melt. Not to mention how flimsy it is when it comes to bending and be honest, who doesn´t bend a little when they cable manage?

According to the papers there regarding, you will have in the durability tests a requirement of maximum deviation in resistance of 20 Milliohms or 0.02 Ohm if you mate it 30 cycles at a max of 10 cycles a minute. Voltage of this part is also 600 V DC max.

So ok, when does it start to melt a cable rated for 150W/13.5A? How many mating cycles does it take for the increase in resistance creates a hotspot in our standard 8-pin connectors for PC PSUs? Whats the sideload situation there? You need to connect (no pun intended) the dots from specs of a connector which have a wide range of cable thickness, voltage and ampere, to a PC PCI-E power cable limited to 150W/13.5A for this to even matter regarding the 12+4pin issue that Nvidia reported concerns about. Its nowhere near an apples to apples thing here.

And users should already not bend 8pin PCIE cables like that.

And it still isn't some sort of giant engineering scandal that the new connector is rated at 30 insertions, since the old one is too.

Tamlin_WSGF

2[H]4U

- Joined

- Aug 1, 2006

- Messages

- 3,114

Yes, both the "male" and the "female" parts have a mating ratings. Check under "mates with" on your link.https://www.molex.com/molex/products/part-detail/crimp_housings/0455870004

The end that goes on cables doesn't have an insertion cycle rating.

So the real "fix" has nothing to do with the connector. Users shouldn't bend their cables 90deg in less than 3cm from the connector. Vendors could force compliance with this by putting a boatload of reinforcement in the first 1cm of the cable to ensure the pins inside the connector stay straight and the wiring connections to the pins aren't stressed. GPU and/or PSU makers could put OCP on each +12v wire rather than the entire combined device/rail.

And users should already not bend 8pin PCIE cables like that.

And it still isn't some sort of giant engineering scandal that the new connector is rated at 30 insertions, since the old one is too.

There is no real "fix", Nvidia presented the issues and possible causes from their tests, no fixes. These tests were conducted with 3 cm "standard" bend and resulted in issues due to that vs. straight connections.

Users do bend their 8-pin PCI-E cables as done in Nvidias tests at 3cm and there are 8-pin Ultraflex cables sold because users wants to bend and cable manage ...

Its not an OCP issue, because Nvidia loaded the PSU within the specs of the 12+4 pin cable.

Yes, this is a engineering scandal with the new connector. If Nvidia is concerned, why shouldn´t we be? If 40 cycles or bending makes it melt, its not a good thing for 1000 dollars + cards. Nvidia wouldn´t have reported on it, if it was of no concern and everything was normal. The more current you put through a cable, the more heat will build up if resistance increases, There is even a difference on the same 12+4 pin cable if you run 150W, 250W, 350W, 450W or 600W on how the resistance and temperatures will react upon deviation.

Please, its not an apples to apples comparison with our 8-pin PCI-E cables. Molex specs of 30 cycles on a connector that supports up to 600V DC doesnt mean that the 8-pin connector on a PC with 150W cables will melt after 30 cycles or more. Perhaps if you run 600V through the cable, it would ...

Nvidia tested it at 600W, 4090 is 450W. Should there be a 600W bios update (that goes with the big ass 600W+ coolers already provided on the 4090 GPUs), there might be (according to Nvidias testing) some young enthusiasts in their kids room singing Bloodhound Gangs - Fire Water Burn if this is not fixed.

Kyle Bennett have confirmed that there really was a 600W 4090 in the works (linked in previous post in this thread).

- Joined

- May 18, 1997

- Messages

- 55,601

Thanks for the linkage, I had not seen that. Seeing that flat voltage reaction is an excellent thing.You are totally right, this is an Intel spec connector and its not an adapter vs. ATX 3.0 PSU thing. Its the same connector in both ends. I pointed that out above. Its not even a Nvidia only thing, for all we know, the new AMD series might have the samme connector. Nvidia didn´t report any problems with connections to the card, because it was ment as a feedback to PSU manufacturers from their testing of the connector. Nvidia didn´t report either that they have solved the connector issue on their end, infact, they were trying to find out what the root causes were with the connector.

Im sorry, but you are totally wrong about this not being a new problem. A PCI-E 6 pin cable are rated 75W/6.75Amps, an 8-pin 150W/13.5Amps. The new 12+4 pins: 600W/55Amps. If to take any similarities from the 8-pin generation, some have learned the hard way that connecting 8+6 or 8+8 via a single PCI-E cable on a power hungry GPU might not be a wise choice. I would feel much more comfortable connecting my next gpu to the 8-pins Ultraflex of the FD ION+ 2 850W Platinum in my secondary system then the next PSU I am considering buying on my primary system for the new card with a flimsy 12+4 connector that have issues with bending. Unless they fix the problem with the connector, I might have a potential issue on both the PSU and the GPU side with that connector and bending.

On the positive note regarding the new RTX 4XXX series, it seems Nvidia have taken measures to combat the transient load issues that plagued some PSUs with the 3XXX series:

Techpowerup

I'd just like to point out that Jayztwocemts has a video where upon 15 insertions or so, that new cable standard started getting really hard to insert or remove on his EVGA 3090 Ti FTW3 card, as if a pin was going out of alignment (kinda like the shitty old Molex connectors).https://www.molex.com/molex/products/part-detail/crimp_housings/0455870004

The end that goes on cables doesn't have an insertion cycle rating.

So the real "fix" has nothing to do with the connector. Users shouldn't bend their cables 90deg in less than 3cm from the connector. Vendors could force compliance with this by putting a boatload of reinforcement in the first 1cm of the cable to ensure the pins inside the connector stay straight and the wiring connections to the pins aren't stressed. GPU and/or PSU makers could put OCP on each +12v wire rather than the entire combined device/rail.

And users should already not bend 8pin PCIE cables like that.

And it still isn't some sort of giant engineering scandal that the new connector is rated at 30 insertions, since the old one is too.

That's pretty shitty and an issue that I've never had with a PCIE 8 / 6+2 / 6 pin PCIE connector

Tamlin_WSGF

2[H]4U

- Joined

- Aug 1, 2006

- Messages

- 3,114

Yeah, this is really good news and I feel it has been drowned a bit in media vs. the new PCI-E 5 connector issues. I think many people would be very happy seeing transient load issues being resolved, since it plagued even some of the best PSUs out there.Thanks for the linkage, I had not seen that. Seeing that flat voltage reaction is an excellent thing.

Wonder if that even with higher consumption than a 3090, would it not end up that their 850watt PSU recommendation for a 4090 will be more realistic than for those.Yeah, this is really good news and I feel it has been drowned a bit in media vs. the new PCI-E 5 connector issues. I think many people would be very happy seeing transient load issues being resolved, since it plagued even some of the best PSUs out there.

Tamlin_WSGF

2[H]4U

- Joined

- Aug 1, 2006

- Messages

- 3,114

I would think so. Even though the 4090 has 100W higher TBP (total board power), you shouldn´t need the extreme overhead if the power spikes are supressed (a quality 850W psu can handle more then 850W, so it already have some overhead). Nvidias new power design might solve a lot of the issues the GPU vendors and PSU vendors have been arguing about (who should handle the spikes, the GPU or PSU). Less fluctuations means more stability too, so this is great news from Nvidia!Wonder if that even with higher consumption than a 3090, would it not end up that their 850watt PSU recommendation for a 4090 will be more realistic than for those.

Fucking Europe is burning WOOD to stay warm. This winter without Russian gas is going to be BRUTAL. Nvidia is crazy with those prices.I would like one rtx 4080 12gb (if someone gave it to me for free), it would be just right for my ryzen 2700x and 1080p resolution, I could run rdr2 at 100fps on ultra settings without any problems.

And the old corsair tx 650w would be just right loaded, neither too much nor too little.

Otherwise, yes, elitism is going for the richest as well.

Those who have already bought rtx 3090 and rtx 3080 will have no problem buying rtx 4090 and 4080 16gb. Because that is basically their only progress.With rtx 4080 12gb and weaker there is no progress at all.

Now Jensen figured out that there are enough elites in Europe and that he can make extra money, so he increased the prices for Europe even more.

I have no doubt at all that the rtx 4090 and rtx 4080 16gb will go like hot cakes in Europe, the only question is whether they will be in stock.

https://www.tomshardware.com/news/geforce-rtx-40-series-gpus-are-22-more-expensive-in-europe

Obviously, the demand is there, so the prices can go sky high.

Andrew_Carr

2[H]4U

- Joined

- Feb 26, 2005

- Messages

- 2,777

Oh please God no, I thought we had put those behind us.(kinda like the shitty old Molex connectors).

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,028

The longest period I used a power supply for was about 12 years. Over that power supply's lifetime the PCI-E cables only went through 9 or 10 insertion cycles. That included 4 different video card configurations, migration to a new case once, transplant to a new motherboard, dismounting to get at a loose screw in the chassis, and to use temporarily when I RMA'd a new PSU. My last Corsair PSU went about 10 years with around the same number of insertion cycles.Me for one. GPU power cables are notoriously in the way of everything no matter what you do, due to their location in a system. Any change in hardware almost guarantees unplugging them just for sanity but also to not risk physically jostling the heavy ass modern GPUs the PCIE slots can barely support. If you ever move your machine for any reasonable distance, I highly recommend removing the GPU entirely, especially for any kind of car ride, as the sheer size and weight of them can cause all sorts of damage if you hit any bumps let alone have to deal with the sort of roads I have. I've killed a couple of GPUs going back and forth to my cabin which has a mile long 4wd only rough mountain two-track road.

Some of you guys may just build a system, plug everything in one time and be done forever, but I imagine for most of us here, that's not the case. Any single significant hardware event causing a machine not to post, is likely to merit 30 cycles of plugging in my experience. Especially if you suspect a short to ground somewhere that ends up removing every peripheral and re-seating the MB, while ruling out each component one by one, which is pretty standard practice in diagnostic repair no? FWIW most electronics connectors in my experience over many years are rated in thousands of plugging cycles, though I'm not speaking to the PC market, which I admit I have no data on, but yeah, 30 is a pretty damn big red flag to me, especially when the potential for a dead short could fry the single most expensive component in most systems, and are common with electrical connector failures. Lacking any particular data however, I did work for a very long time in this industry, doing PC repair as a teenager for local shops, where we would routinely unplug connectors dozens of times in diagnostic assesment, to building some of the largest clusters of servers in the world at the time for various ecoms, b2bs, corps, and governments, (admittedly almost 20 years ago) and I can promise you if any connector or component had such low cycle life, I'd have killed it dozens of times over, so empirically I can't fathom this sort of cycle life being anywhere near normal, or commonly accepted.

Just my 2c, take it for what it's worth.

What are you people doing that you need to do 30 or more cycles on your personal PC? I don't need to disconnect most things during cleaning, including my video cards.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,789

That's still no excuse. I've cycled mine more than 30 times testing GPUs, moving water cooling, adjusting cables, etc.What are you people doing that you need to do 30 or more cycles on your personal PC? I don't need to disconnect most things during cleaning, including my video cards.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,028

Sounds like an edge case.That's still no excuse. I've cycled mine more than 30 times testing GPUs, moving water cooling, adjusting cables, etc.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,789

You asked what people are doing, I answered. "I don't do this hence it's not an issue" doesn't excuse a possible issue.Sounds like an edge case.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,028

To which I replied my opinion of. If you're doing that many cycles then I'd be ordering more cables from the PSU manufacturer considering 6- and 8-pin connectors are rated for the same number of cycles.You asked what people are doing, I answered. "I don't do this hence it's not an issue" doesn't excuse a possible issue.

pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 52,028

shit gets looser the more you put it in and out, giggity...

this isnt new but the new higher draw is, thats the issue. so get a new cable, the units will be fine.

this isnt new but the new higher draw is, thats the issue. so get a new cable, the units will be fine.

- Joined

- May 18, 1997

- Messages

- 55,601

No.Is there a chance you'll need to buy an ATX 3.0 PSU to use an RX 7500 video card?

cvinh

2[H]4U

- Joined

- Sep 4, 2009

- Messages

- 2,100

I'm planning to use the Cablemod 3 pcie -> 12vhpwr cable. I think that should be enough for a 4090 Suprim. No need to use the 4 x pice - 12vhpwr that comes with it do I?

mirkendargen

Limp Gawd

- Joined

- Dec 29, 2006

- Messages

- 435

Nope, no adapter needed then. I'm probably gonna use this one too, the Corsair one (in the render at least) looks pretty short and I think might not work in my case (or at least not cleanly). You can have one custom made up to 1m from Cablemod though.I'm planning to use the Cablemod 3 pcie -> 12vhpwr cable. I think that should be enough for a 4090 Suprim. No need to use the 4 x pice - 12vhpwr that comes with it do I?

Tamlin_WSGF

2[H]4U

- Joined

- Aug 1, 2006

- Messages

- 3,114

The higher draw is new and the connector itself is new. Its the 12VHPWR connector thats the problem. As example, take the new 12VHPWR connector to 8-pin connector from Corsair. The mini-fit plus HCS terminals on the 8-pin side is rated for 100 cycles. On the 12VHPWR side, you have 30 cycles. The 12VHPWR connector is more flimsy when it comes to bending, so this is a better option then having a native 12VHPWR connector on the PSU side. We stuff a lot of cables on the PSU side when we cable manage.shit gets looser the more you put it in and out, giggity...

this isnt new but the new higher draw is, thats the issue. so get a new cable, the units will be fine.

On the GPU side, you have a bit more room not to bend the cable so much, but I bet its not just edge cases where people have a bend on the GPU side too. Here is from JonnyGURU on this subject:

JonnyGURUThey are. The tendency to bend a sharper angle is moreso on the PSU side as people are trying to route the cable from under a PSU shroud, around a stack of 5.25" bays and then up the back of the mobo tray. Whereas, on the GPU side, you're just trying to avoid the side panel. Not saying it's not ever going to happen on the GPU side; but given the size of the cards and the distance between the card and side panel in some instances, we might see some failures.

The 12VHPWR connector is some flimsy shit and until this is fixed, I would much prefer 8-pin connectors on both sides!

- Joined

- May 18, 1997

- Messages

- 55,601

Hardly any 4090 stock is going to EU. NV knows there is little to no chance of sales there currently. NA inventories for launch are up 400% to 500% as to what we would usually see for this series of card.

DooKey

[H]F Junkie

- Joined

- Apr 25, 2001

- Messages

- 13,552

Wow. Guess they had to use as many of those wafers they bought as possible. That's just crazy numbers for a halo card.Hardly any 4090 stock is going to EU. NV knows there is little to no chance of sales there currently. NA inventories for launch are up 400% to 500% as to what we would usually see for this series of card.

- Joined

- May 18, 1997

- Messages

- 55,601

If you go back and listen to the last NV earnings call you will see that they also wrote down due to purchases already made on future inventory. Once you buy those wafers, they either get run and you pay for those, or they don't get run and you still pay for those....Wow. Guess they had to use as many of those wafers they bought as possible. That's just crazy numbers for a halo card.

Strange bird

Gawd

- Joined

- Feb 19, 2021

- Messages

- 530

what is NA? it's kind of hard for me to believe that they won't sell in Europe and that's where they made the most from gamers and cryptocurrency miners as far as the rtx 3000 series is concernedHardly any 4090 stock is going to EU. NV knows there is little to no chance of sales there currently. NA inventories for launch are up 400% to 500% as to what we would usually see for this series of card.

NA = North America. Crypto is dead, and Europe has no cheap Russian gas. Europe will struggle to stay warm this winter and even at 600W $1600 US is too much for a space heater.what is NA? it's kind of hard for me to believe that they won't sell in Europe and that's where they made the most from gamers and cryptocurrency miners as far as the rtx 3000 series is concerned

Denpepe

2[H]4U

- Joined

- Oct 26, 2015

- Messages

- 2,269

if only they were 1600$ https://wccftech.com/nvidia-geforce...s-available-in-europe-prices-1999-2549-euros/NA = North America. Crypto is dead, and Europe has no cheap Russian gas. Europe will struggle to stay warm this winter and even at 600W $1600 US is too much for a space heater.

- Joined

- May 18, 1997

- Messages

- 55,601

North America. You need to look a global news feed once in a while. There will be inventory in the UK/EU, but not close to the traditional inventory.what is NA? it's kind of hard for me to believe that they won't sell in Europe and that's where they made the most from gamers and cryptocurrency miners as far as the rtx 3000 series is concerned

Tamlin_WSGF

2[H]4U

- Joined

- Aug 1, 2006

- Messages

- 3,114

Prescalped, for our convenience ... I am holding out until the new AMD series launches, so hopelfully there will be something more appealing then or I cave in and get a 4090. Haven´t had an AMD card for many years now, so might be fun and new in a way.

Ya, no one is buying at that price. Ok, maybe the fun crowd at Davos and Ursula Gertrud von der Leyen but not the peons she looks down on, you know, regular people.

Euro and Pound are 25% down from fall 2020, early 2021 and electricity cost a fortune, in the UK for example:what is NA? it's kind of hard for me to believe that they won't sell in Europe and that's where they made the most from gamers and cryptocurrency miners as far as the rtx 3000 series is concerned

Electricity rates range from 36.01p per kWh in north Wales to 32.24p per kWh in northern England. That about 40 cent USD

In markets where GPU were already quite expensive, this time could be really prohibitive

https://browser.geekbench.com/cuda-benchmarks

https://videocardz.com/newz/geforce-rtx-4090-is-60-faster-than-rtx-3090-ti-in-geekbench-cuda-test

4090 - 417713

3090 Ti - 260346

3090: 238123

3080 Ti - 233000

3080: 206390

2080 Ti - 176681 (FE) or 165093

4k raster performance:

https://static.techspot.com/articles-info/2442/bench/4K.png

Using extremelly bad logic:

If it follow the really high correlation (almost 1.0 correlation in excel) between geekbench cuda score and fps at 4k without RT or dlss a 4090 would have done around 172 fps here in average in those 12 games, at 4K.

https://videocardz.com/newz/geforce-rtx-4090-is-60-faster-than-rtx-3090-ti-in-geekbench-cuda-test

4090 - 417713

3090 Ti - 260346

3090: 238123

3080 Ti - 233000

3080: 206390

2080 Ti - 176681 (FE) or 165093

4k raster performance:

https://static.techspot.com/articles-info/2442/bench/4K.png

Using extremelly bad logic:

| Column1 | Average fps | cuda score | cuda by frame |

| 3090TI | 107 | 260,346 | 2433.14 |

| 3090 | 98 | 238,123 | 2429.83 |

| 3080 ti | 95 | 233,000 | 2452.63 |

| 3080 | 86 | 206,390 | 2399.88 |

If it follow the really high correlation (almost 1.0 correlation in excel) between geekbench cuda score and fps at 4k without RT or dlss a 4090 would have done around 172 fps here in average in those 12 games, at 4K.

https://images.nvidia.com/aem-dam/Solutions/geforce/ada/nvidia-ada-gpu-architecture.pdf

Main stats expressed in absolute value and relative to the 3090TI

Relative to a 3080 12 GB

Relative to a 2080TI

Main stats expressed in absolute value and relative to the 3090TI

| GeForce RTX 2080 Ti | Geforce 3080 12 GB | GeForce RTX 3080 Ti | GeForce RTX 3090 T | RTX 4080 12 GB | RTX 4080 16 GB | GeForce RTX 4090 | ||

| FP32 | TFLOPS | 14.2 | 30.6 | 34.1 | 40.0 | 40.1 | 48.7 | 82.6 |

| RT | TFLOPS | 42.9 | 59.9 | 66.6 | 78.1 | 92.7 | 112.7 | 191.0 |

| Texture | Rate | 444.7 | 478.8 | 532.8 | 625.0 | 626.4 | 761.5 | 1290.2 |

| Pixel | Rate | 143.9 | 164.2 | 186.5 | 208.3 | 208.8 | 280.6 | 443.5 |

| FP32 | TFLOPS | 0.4 | 0.8 | 0.9 | 1.0 | 1.0 | 1.2 | 2.1 |

| RT | TFLOPS | 0.5 | 0.8 | 0.9 | 1.0 | 1.2 | 1.4 | 2.4 |

| Texture | Rate | 0.7 | 0.8 | 0.9 | 1.0 | 1.0 | 1.2 | 2.1 |

| Pixel | Rate | 0.7 | 0.8 | 0.9 | 1.0 | 1.0 | 1.3 | 2.1 |

Relative to a 3080 12 GB

| GeForce RTX 2080 Ti | Geforce 3080 12 GB | GeForce RTX 3080 Ti | GeForce RTX 3090 T | RTX 4080 12 GB | RTX 4080 16 GB | GeForce RTX 4090 | ||

| FP32 | TFLOPS | 0.46 | 1.00 | 1.11 | 1.30 | 1.55 | 1.88 | 3.19 |

| RT | TFLOPS | 0.72 | 1.00 | 1.11 | 1.31 | 1.31 | 1.59 | 2.69 |

| Texture | Rate | 0.93 | 1.00 | 1.14 | 1.27 | 1.27 | 1.71 | 2.70 |

| Pixel | Rate | 0.88 | 1.00 | 1.14 | 1.27 | 1.27 | 1.71 | 2.70 |

Relative to a 2080TI

| GeForce RTX 2080 Ti | Geforce 3080 12 GB | GeForce RTX 3080 Ti | GeForce RTX 3090 T | RTX 4080 12 GB | RTX 4080 16 GB | GeForce RTX 4090 | ||

| FP32 | TFLOPS | 1.00 | 2.15 | 2.40 | 2.82 | 2.82 | 3.43 | 5.82 |

| RT | TFLOPS | 1.00 | 1.40 | 1.55 | 1.82 | 2.16 | 2.63 | 4.45 |

| Texture | Rate | 1.00 | 1.08 | 1.20 | 1.41 | 1.41 | 1.71 | 2.90 |

| Pixel | Rate | 1.00 | 1.14 | 1.30 | 1.45 | 1.45 | 1.95 | 3.08 |

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)