HAL_404

[H]ard|Gawd

- Joined

- Dec 16, 2018

- Messages

- 1,240

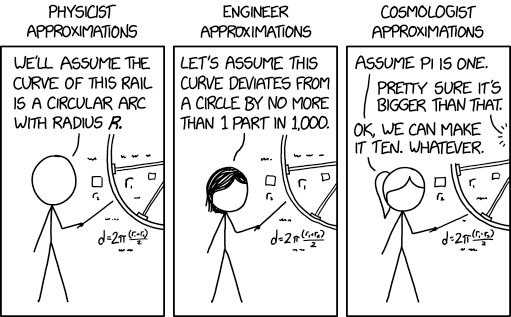

"high-performance computer has added 12.8 trillion new digits to the number Pi, in a calculation that reached a record-breaking 62.8 trillion figures in total."

Personally, I still prefer cherry to apple oh wait ... wrong pi

https://www.msn.com/en-us/news/tech...old-math-problem/ar-AANsSJ9?ocid=winp1taskbar

Personally, I still prefer cherry to apple oh wait ... wrong pi

https://www.msn.com/en-us/news/tech...old-math-problem/ar-AANsSJ9?ocid=winp1taskbar

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)