GN actually saw a performance degradation in a few scenarios when OC'd

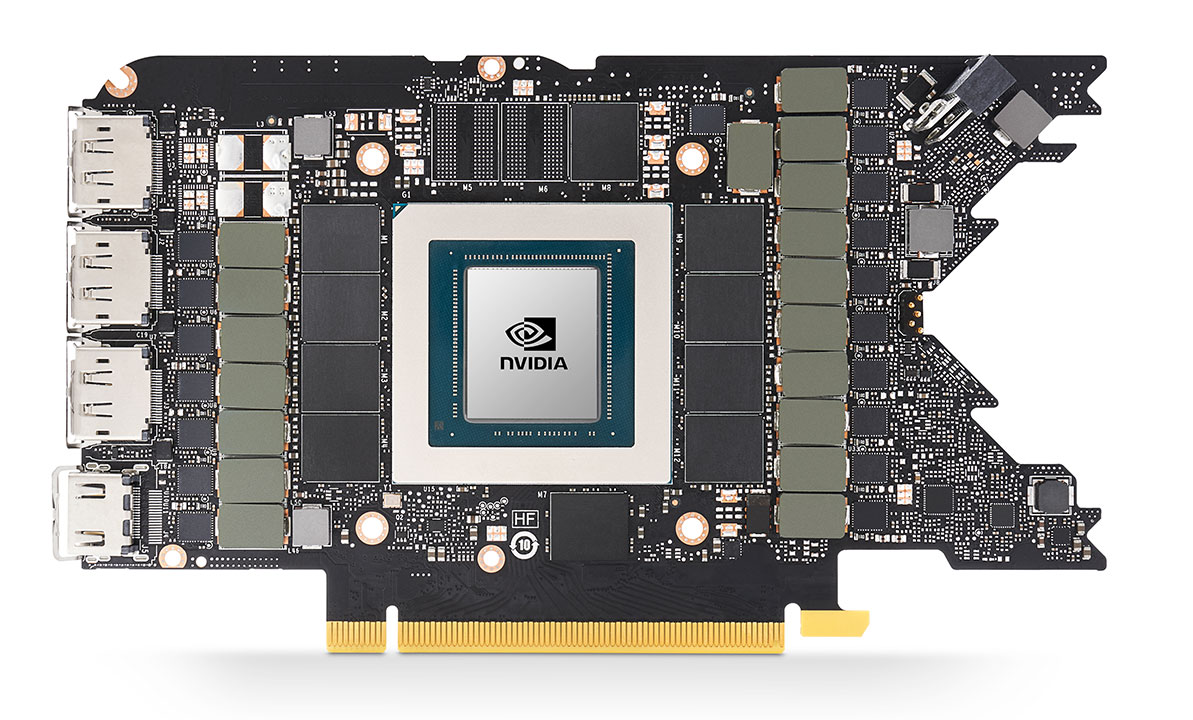

The FE cards may have the superior cooling solution, but with such a compact PCB, I imagine power delivery is at it's limit even at default settings.

I was doing some reading about how memory OC works with this new GDDR6x stuff. It has some interesting error "correction" stuff happening. Instead of getting artifacts and all the other usual signs, you simply will performance loss as the memory controller keeps requesting good data instead of passing it on to the pipeline.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)