Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

DF: Future-Proofing Your PC For Next-Gen Gaming

- Thread starter polonyc2

- Start date

defaultluser

[H]F Junkie

- Joined

- Jan 14, 2006

- Messages

- 14,398

Pretty well balanced recommendations,

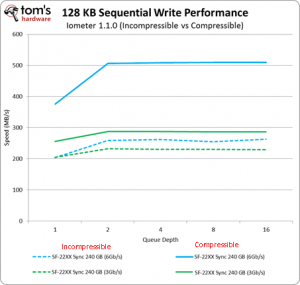

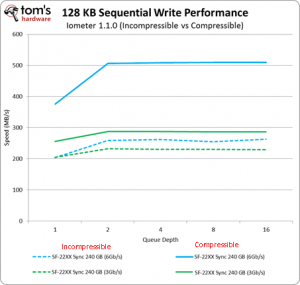

I love it when the Console jerk-off team can;t get enough of SSD Compression, when we already had it here on PC, and decided it wasn't worth the extra complexity in controller firmware AND bugs associated with that real-time compression..

REMEMBER SANDFORCE?

Because most data is already compressed on PC (your media library, and every installer you ever downloaded, for example), the benefit of real-time compression is spotty.

But consoles have shit for data compression on discs, so this was the easy way out: the developer is assumed to be lazy about asset compression, so do it in hardware!.

I don't expect it to change game design massively versus PC MMOs already requiring SSDS but if it magically does, it would be easy to return us to 2012 and go full SANDFORCE 2.0!

I love it when the Console jerk-off team can;t get enough of SSD Compression, when we already had it here on PC, and decided it wasn't worth the extra complexity in controller firmware AND bugs associated with that real-time compression..

REMEMBER SANDFORCE?

Because most data is already compressed on PC (your media library, and every installer you ever downloaded, for example), the benefit of real-time compression is spotty.

But consoles have shit for data compression on discs, so this was the easy way out: the developer is assumed to be lazy about asset compression, so do it in hardware!.

I don't expect it to change game design massively versus PC MMOs already requiring SSDS but if it magically does, it would be easy to return us to 2012 and go full SANDFORCE 2.0!

Last edited:

limitedaccess

Supreme [H]ardness

- Joined

- May 10, 2010

- Messages

- 7,594

I'm not sure what the exact criteria is as to what constitutes as future proofing but the biggest concern I'd have is with the CPU. I feel people are not realizing how small of a relative gap current CPUs have to the upcoming consoles relative to the past, especially if the recommendation is that a 6c/12t Zen 2 would be future proof.

The 2ghz A6-5200 4 core (Jaguar core, same as Xbox One/PS4) scores roughly 2000 in CB10 ST. The PS4 was clocked at 1.6ghz and the Xbox One 1.75ghz, although they were 8 core designs.

A 2.40hz Q6600 scores 2800 in CB10 ST. Do people feel a stock Q6600 was future proof enough for this console generation? And the Q6600 had a bigger performance gap than the Zen 2 options available now.

As another comparison a point a stock 2500k scores 5900 in CB10 ST. No wonder that was "future proof" enough for this entire generation.

XBX 3.6/3.8ghz. PS5 upto 3.5ghz. Zen 2 4.2ghz? typical boost clocks while under the same gaming workloads? Let's say what a generous 20%? 25% advantage only? Enough to overcome overhead differences?

Now what if the games target 30 fps only and load the CPUs to that extent? Say good bye to 60 fps PC gaming.

The 2ghz A6-5200 4 core (Jaguar core, same as Xbox One/PS4) scores roughly 2000 in CB10 ST. The PS4 was clocked at 1.6ghz and the Xbox One 1.75ghz, although they were 8 core designs.

A 2.40hz Q6600 scores 2800 in CB10 ST. Do people feel a stock Q6600 was future proof enough for this console generation? And the Q6600 had a bigger performance gap than the Zen 2 options available now.

As another comparison a point a stock 2500k scores 5900 in CB10 ST. No wonder that was "future proof" enough for this entire generation.

XBX 3.6/3.8ghz. PS5 upto 3.5ghz. Zen 2 4.2ghz? typical boost clocks while under the same gaming workloads? Let's say what a generous 20%? 25% advantage only? Enough to overcome overhead differences?

Now what if the games target 30 fps only and load the CPUs to that extent? Say good bye to 60 fps PC gaming.

defaultluser

[H]F Junkie

- Joined

- Jan 14, 2006

- Messages

- 14,398

\Now what if the games target 30 fps only and load the CPUs to that extent? Say good bye to 60 fps PC gaming.

Sorry dude, most of these games running at TRUE 4k are going to be GPU-limited.

Last time I checked, the 5700 XT was NOT a 4k graphics card. Adding 20% more performance for the PS5 means barely 45 fps at true 4k resolution for a current-gen PC port:

Now, imagine a next-gen console game, and the performance will be closer to 30 fps. Or they're going to run it at 1440p 60 fps.

In games using 6 cores, Zen 2 6 core performs at a minimum of 100 fps (red dead redemption 2), with typical framerates closer to 150:

And 6-major threads currently in use means performance could ALMOST DOUBLE over the lifetime of the 8 core/16 thread device!

Also, you should look forward to the single-CCX Zen 3 device, as you see it could boost gaming by up to 15%. That is why the 4-ccore 3300X comes within spitting distance of the 3600!

Last edited:

limitedaccess

Supreme [H]ardness

- Joined

- May 10, 2010

- Messages

- 7,594

Sorry dude, most of these games running at TRUE 4k are going to be GPU-limited.

And 6-major threads currently in use means performance could DOUBLE over the lifetime of the 8 core/16 thread device!

I feel you're making an assumption here that future console games will not fully leverage the CPUs placed in them. The problem wouldn't be if console CPU's are only running at something like <50% utilization to reach 60 fps. The concern would be is if they are end up running nearer to 100% utilization to just reach 30 fps (as they do now with this generation).

If the latter above is the case there is going to be real issue with whether or not available PC CPUs have enough of a brute force advantage to overcome and potential overhead differences which historically have existed.

jeremyshaw

[H]F Junkie

- Joined

- Aug 26, 2009

- Messages

- 12,511

A bit of me is curious how much the CPU will really be loaded. Right now, developers HAVE to multithread as much as they can, since the individual Jaguar cores were basically junk, back in 2013. They were a failed Atom competitor and the team that developed them? Went on the make Samsung's failed Mongoose cores. What a great lineage. There are serious questions if those 8 Jaguar cores were even competitive vs smartphone CPUs in 2016, nevermind 2020.

Now, game developers have access to high single core performance. There is less pressure to multithread the code, since the Zen2 CPU core is actually fast enough to be worth a damn. No more chasing down race conditions, resource contentions, etc. Sure, some work will still be farmed out to other cores, but the main game thread/loop isn't running on some weak uarch that could barely get out of its own way.

Furthermore, Sony's boost clock indicates they will allow some of the CPU's power budget to boost GPU clocks to the moon. I said indicates, Cerny straight up said they are using AMD's Smartshift.

Now, game developers have access to high single core performance. There is less pressure to multithread the code, since the Zen2 CPU core is actually fast enough to be worth a damn. No more chasing down race conditions, resource contentions, etc. Sure, some work will still be farmed out to other cores, but the main game thread/loop isn't running on some weak uarch that could barely get out of its own way.

Furthermore, Sony's boost clock indicates they will allow some of the CPU's power budget to boost GPU clocks to the moon. I said indicates, Cerny straight up said they are using AMD's Smartshift.

limitedaccess

Supreme [H]ardness

- Joined

- May 10, 2010

- Messages

- 7,594

A bit of me is curious how much the CPU will really be loaded. Right now, developers HAVE to multithread as much as they can, since the individual Jaguar cores were basically junk, back in 2013. They were a failed Atom competitor and the team that developed them? Went on the make Samsung's failed Mongoose cores. What a great lineage. There are serious questions if those 8 Jaguar cores were even competitive vs smartphone CPUs in 2016, nevermind 2020.

Now, game developers have access to high single core performance. There is less pressure to multithread the code, since the Zen2 CPU core is actually fast enough to be worth a damn. No more chasing down race conditions, resource contentions, etc. Sure, some work will still be farmed out to other cores, but the main game thread/loop isn't running on some weak uarch that could barely get out of its own way.

Furthermore, Sony's boost clock indicates they will allow some of the CPU's power budget to boost GPU clocks to the moon. I said indicates, Cerny straight up said they are using AMD's Smartshift.

We don't need to speculate on Jaguar performance (Xbox One/PS4) as it was released for PC platforms.

These are Cinebench 10 singlethread numbers from Anandtech. CB isn't everything but there is data for all 3 architectures.

Core 2 Duo Q6600 2.4ghz 2006 - 2778

i5-2500k 3.3ghz 2011 - 5860

A6-5200 2ghz Jaguar 4 core 2013 - 1986

PS4 Jaguar 8 core 1.6ghz assuming linear clock scaling - 1589

Xbox One Jaguar 8 core 1.75ghz 2013 assuming linear clock scaling - 1738

So the A6-5200 was clocked higher and therefore faster than both.

Q6600 was 1.75x faster than the PS4, 1.60x than the Xbox One per core.

2500k was 3.69x faster than the PS4 and 3.37x faster than the Xbox One per core.

Now think how well those CPUs respectively performed over this generation.

If we assume current Zen 2's run at 4.2ghz than they'd only have a 1.10x XBX SMT off, 1.17x over XBX with SMT on, 1.2x+ over the PS5 (clock rate is variable). As we can see we have nowhere near the raw performance advantage compared to last time around even with CPUs released years prior.

We can look at the following scenarios -

1) PS5/XBX CPUs are way over specced and will be completely under utilized even with games targeting 60 fps. No real issue, although high refresh gaming (60 fps +) could be challenging.

2) They will be fully utilized at low thread counts as they are over specced in MT performance (scenario you mention). Will not be as MT focused during design because of this. This will be rather problematic for the PC as current CPU cores (and even near future ones) do not enjoy the same advantage compared to the last generation.

3) They will highly utilize the entire CPU. Again this is a problem since it doesn't mean there will be same scaling past 8c/16t, meaning just buying >8c/16t may not be the solution. But <8c/16t will not even meet the minimum.

It's a little early to be trying to get ahead of the next consoles, 6 months to a year after release we'll start seeing what advancements devs are leveraging and have a good idea what to beef up in order to stay well ahead of the consoles.

I get a kick out of the hype surrounding console releases these days. There's always some new alleged PC killer feature that ends up just barely matching current PC tech at best and then quickly left in the dust if devs actually utilize it. It's amazing what they get out of consoles these days but given the acceptable size and price limits they have to work with consoles are always going to be limited even with the economy of scale advantage they have.

I get a kick out of the hype surrounding console releases these days. There's always some new alleged PC killer feature that ends up just barely matching current PC tech at best and then quickly left in the dust if devs actually utilize it. It's amazing what they get out of consoles these days but given the acceptable size and price limits they have to work with consoles are always going to be limited even with the economy of scale advantage they have.

defaultluser

[H]F Junkie

- Joined

- Jan 14, 2006

- Messages

- 14,398

I feel you're making an assumption here that future console games will not fully leverage the CPUs placed in them. The problem wouldn't be if console CPU's are only running at something like <50% utilization to reach 60 fps. The concern would be is if they are end up running nearer to 100% utilization to just reach 30 fps (as they do now with this generation).

If the latter above is the case there is going to be real issue with whether or not available PC CPUs have enough of a brute force advantage to overcome and potential overhead differences which historically have existed.

No, I'm saying they won't do it on day one, but we could potentially see it in 3-5 years.

I'm assuming the current 6-thread console games will be our baseline, and those should see a little over a doubling in performance (without any other engine threading effort.)

Cutting-edge console games of the PS3/360-era moved quickly from 4 to 6 threads on Jaguar because they were forced to by the almost non-existent per-core performance increase, but developers can ride that increase on Zen 2.

The "console overhead" advantage on modern systems is a figment of your imagination: there's a reason the PS4 reserved two fucking cores for the rest of it's multithreaded OS image, with the capability to real-time record. Modern consoles have he exact same overhead as Windows gaming without a live stream does.

Last edited:

Ah the 'PCs are dead, long live the console' thing, the way it almost always plays out is that PC's are already ahead of consoles, consoles catch up to a modern mid range PC with a new release, that power boost pushes games forward, PCs benefit not hurt. Every time.

Then you have the arguments about people using older GPUs or not having SSDs, again, I don't know anyone that doesn't have an SSD in their system. Aged GPUs sure, but in my experience that is always because they can do what they want, at the resolution and fps they want, with the old GPU so have no need to upgrade. As soon as that becomes an issue people will pick up new GPUs.

Then you have the arguments about people using older GPUs or not having SSDs, again, I don't know anyone that doesn't have an SSD in their system. Aged GPUs sure, but in my experience that is always because they can do what they want, at the resolution and fps they want, with the old GPU so have no need to upgrade. As soon as that becomes an issue people will pick up new GPUs.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,004

Digital Foundry is not a good source for PC anything. I would not be listening to their advice. Most of their PC videos are how you can get the "console experience." No, thank you. I am a primary PC gamer precisely because I DON'T want the "console experience."

defaultluser

[H]F Junkie

- Joined

- Jan 14, 2006

- Messages

- 14,398

Digital Foundry is not a good source for PC anything. I would not be listening to their advice. Most of their PC videos are how you can get the "console experience." No, thank you. I am a primary PC gamer precisely because I DON'T want the "console experience."

I dunno man, the recommendation for 6 cores with an upgrade path to 8 is solid, as is the recommendation for using whatever SSD you havw now, butr have an m.2 upgrade path (if things magically change for game load time requirements).

And the emphasis on RTX video cards for a build TODAY is a solid one - if you're spending over $300, it's pointless to buy the 5700 XT..

Digital Foundry is not a good source for PC anything. I would not be listening to their advice. Most of their PC videos are how you can get the "console experience." No, thank you. I am a primary PC gamer precisely because I DON'T want the "console experience."

Digital Foundry is an Excellent source for PC deep dives/architecture...DF is the best in the business when it comes to both console and PC architecture...you seem to hate most hardware/gaming sites...just buying every new Ti card or 10900K CPU is not how most people buy hardware

I have to question anybody's 'deep dive' knowledge thats exclusively from YouTube, especially into things like architecture. That kind of knowledge is best in writting, complete with techinical specs and graphs that you can review without pausing some video.

Sure if your taking apart an engine, or framing a gabled dormer, a youtube video can be excellent help given its ability to present three dimesional information.

Sure if your taking apart an engine, or framing a gabled dormer, a youtube video can be excellent help given its ability to present three dimesional information.

defaultluser

[H]F Junkie

- Joined

- Jan 14, 2006

- Messages

- 14,398

I have to question anybody's 'deep dive' knowledge thats exclusively from YouTube, especially into things like architecture. That kind of knowledge is best in writting, complete with techinical specs and graphs that you can review without pausing some video.

Sure if your taking apart an engine, or framing a gabled dormer, a youtube video can be excellent help given its ability to present three dimesional information.

Seriously, there are a lot worse Youtube channels to get your bitch on around here.

We haven't had the option of pretending Youtube doesn't exist after half pf my daily tech destinations dried up in the last five years. Get off your high-horse or leave this thread.

Seriously, there are a lot worse Youtube channels to get your bitch on around here.

We haven't had the option of pretending Youtube doesn't exist after half pf my daily tech destinations dried up in the last five years. Get off your high-horse or leave this thread.

ree much?

I'll hang around where ever I please too, your welcome to just ignore me.

Hmm. I'm going ot guess this console generation will be much like every other one. It looks like it is some amazing leap because very few games bother reching for the limits of PC gaming capability. So we are all shocked when we see the first gen on games allowed to stretch their legs a bit because the consoles stopped holding the state of the art back quite so much. It LOOKS like they beat current PCs because of exclusivity contracts. In reality the consoles will be pricey, but a good value compared to the maybe slightly above mid tier PC they match in power. Then the pc uber systems push the possible while little to nothing really takes much advantage of it. Rinse and repeat.

Well.. maybe not so much this time. This time there are some extra factors.

On the console side you will have a lot of people trying to push 4k and I suspect 4k will still be less than optimal. Which mean a lot of the market may be playing at 1080. And this generation of console is probably going ot be pretty damn good at that. From a market perspective a LOT of pc gaming are people playing 1080p really nicely without uber rigs. This is bread and butter income for the industry, and consoles may significantly undercut this. I'd expect the PC side of things to double down on the 1080p gaming laptop due to this.

On the PC side of things, outperforming the console is going to take some cash. Probably more cash than the console costs just for a video card. Then of course to really do it, you need a 4k monitor. If you aren't already there, this go round is going to be potentially very pricey to play the pc master race card. Or you can just keep sort of a notch above mid level and be about the same for a bit and wait for the next iteration of a notch above mid level and be ahead of the console curve well before this gen is winding down to be replaced.

Then there is VR. Someone might do something cool that is like pushing 4k per eyeball and needs 24 gigs of video ram in a $2000 card to drive it. But probably not.

My guess is you may see some PC customers permanently shift away from the endless cycle of gaming rigs and this will change the price to performance costs negatively.

Well.. maybe not so much this time. This time there are some extra factors.

On the console side you will have a lot of people trying to push 4k and I suspect 4k will still be less than optimal. Which mean a lot of the market may be playing at 1080. And this generation of console is probably going ot be pretty damn good at that. From a market perspective a LOT of pc gaming are people playing 1080p really nicely without uber rigs. This is bread and butter income for the industry, and consoles may significantly undercut this. I'd expect the PC side of things to double down on the 1080p gaming laptop due to this.

On the PC side of things, outperforming the console is going to take some cash. Probably more cash than the console costs just for a video card. Then of course to really do it, you need a 4k monitor. If you aren't already there, this go round is going to be potentially very pricey to play the pc master race card. Or you can just keep sort of a notch above mid level and be about the same for a bit and wait for the next iteration of a notch above mid level and be ahead of the console curve well before this gen is winding down to be replaced.

Then there is VR. Someone might do something cool that is like pushing 4k per eyeball and needs 24 gigs of video ram in a $2000 card to drive it. But probably not.

My guess is you may see some PC customers permanently shift away from the endless cycle of gaming rigs and this will change the price to performance costs negatively.

Hmm. I'm going ot guess this console generation will be much like every other one. It looks like it is some amazing leap because very few games bother reching for the limits of PC gaming capability. So we are all shocked when we see the first gen on games allowed to stretch their legs a bit because the consoles stopped holding the state of the art back quite so much. It LOOKS like they beat current PCs because of exclusivity contracts. In reality the consoles will be pricey, but a good value compared to the maybe slightly above mid tier PC they match in power. Then the pc uber systems push the possible while little to nothing really takes much advantage of it. Rinse and repeat.

Well.. maybe not so much this time. This time there are some extra factors.

On the console side you will have a lot of people trying to push 4k and I suspect 4k will still be less than optimal. Which mean a lot of the market may be playing at 1080. And this generation of console is probably going ot be pretty damn good at that. From a market perspective a LOT of pc gaming are people playing 1080p really nicely without uber rigs. This is bread and butter income for the industry, and consoles may significantly undercut this. I'd expect the PC side of things to double down on the 1080p gaming laptop due to this.

On the PC side of things, outperforming the console is going to take some cash. Probably more cash than the console costs just for a video card. Then of course to really do it, you need a 4k monitor. If you aren't already there, this go round is going to be potentially very pricey to play the pc master race card. Or you can just keep sort of a notch above mid level and be about the same for a bit and wait for the next iteration of a notch above mid level and be ahead of the console curve well before this gen is winding down to be replaced.

Then there is VR. Someone might do something cool that is like pushing 4k per eyeball and needs 24 gigs of video ram in a $2000 card to drive it. But probably not.

My guess is you may see some PC customers permanently shift away from the endless cycle of gaming rigs and this will change the price to performance costs negatively.

everything is an endless cycle, only death or life circumstances will shift peoples purchasing habits, surely not consoles.

They’re associated with Eurogamer I believe and many of their deep dives also have articles. These guys have a habit of also talking to developers about the engines. They have some extremely in depth videos on the Decima Engine (from Guerrilla) and they talk to Guerrilla about the engine as well.I have to question anybody's 'deep dive' knowledge thats exclusively from YouTube, especially into things like architecture. That kind of knowledge is best in writting, complete with techinical specs and graphs that you can review without pausing some video.

Sure if your taking apart an engine, or framing a gabled dormer, a youtube video can be excellent help given its ability to present three dimesional information.

Just because someone uses YouTube to deliver their content doesn’t discount their knowledge.

I say this also as someone that greatly prefers to read an article vs watch a video, but DF are good and often pause their videos themselves when relevant portions of the image are being shown (or do slow motion playback).

They’re associated with Eurogamer I believe and many of their deep dives also have articles. These guys have a habit of also talking to developers about the engines. They have some extremely in depth videos on the Decima Engine (from Guerrilla) and they talk to Guerrilla about the engine as well.

Just because someone uses YouTube to deliver their content doesn’t discount their knowledge.

I say this also as someone that greatly prefers to read an article vs watch a video, but DF are good and often pause their videos themselves when relevant portions of the image are being shown (or do slow motion playback).

plus that video/article is not trying to say that consoles are better etc...it's literally trying to explain how the PC has created the new tech in the consoles...they go on to list the current 5+ most popular GPU's/CPU's etc according to the Steam Hardware survey and use that as a baseline to explain how the new consoles will move things forward exponentially and how those people can 'future-proof' their systems...the vast majority of PC gamers are not people with 2080 Ti cards and 10 core 10900K CPU's...in the next few years developers will now be able to more effectively use things like NVMe, SSD, ray-tracing etc because of the next-gen consoles...without consoles PC games would stagnate

PC creates the hardware but software lags behind until the consoles catch up...some of the people who upgrade every cycle for no rhyme or reason are so out of touch with technology and just think that buying the highest end GPU/CPU as soon as they are released is the answer...that is literally insane and shows they have no clue about computer hardware (and then they claim that they are not spending a lot of $$ because they sold their old parts and got back 80% of what they paid lol)...people need to educate themselves and places like Digital Foundry are a great resource

Last edited:

Comixbooks

Fully [H]

- Joined

- Jun 7, 2008

- Messages

- 22,004

Maximum PC said dont get caught up in the upgrade treadmill. Future proofing doesnt exist just look at that PC I bought in 1996 it's in the junkyard now. 10 years from now almost any PC on the market will be a piece of junk for games. Who knows what the gaming market will be like in a few years.

Maximum PC said dont get caught up in the upgrade treadmill. Future proofing doesnt exist just look at that PC I bought in 1996 it's in the junkyard now. 10 years from now almost any PC on the market will be a piece of junk for games. Who knows what the gaming market will be like in a few years.

I'm still using a CPU from 2010- 6 core i7 980X 3.6 GHz (Turbo)...I upgrade my GPU more frequently (as well as SSD) but CPU upgrades are not needed as much...I was ahead of the curve as far as 6 cores which is still an excellent CPU even today...never ran into any issues as far as newer CPU instruction sets in games etc...but all good things come to an end and I am planning on upgrading my CPU/memory/mobo this summer to AMD Zen 2 (I doubt I can wait until Zen 3 in October)

CrimsonKnight13

Lord Stabington of [H]ard|Fortress

- Joined

- Jan 8, 2008

- Messages

- 8,434

I'm still using a CPU from 2010- 6 core i7 980X 3.6 GHz (Turbo)...I upgrade my GPU more frequently (as well as SSD) but CPU upgrades are not needed as much...I was ahead of the curve as far as 6 cores which is still an excellent CPU even today...never ran into any issues as far as newer CPU instruction sets in games etc...but all good things come to an end and I am planning on upgrading my CPU/memory/mobo this summer to AMD Zen 2 (I doubt I can wait until Zen 3 in October)

Some newer games are using AVX & other newer instruction sets. I'm surprised you haven't hit walls with any that do.

Some newer games are using AVX & other newer instruction sets. I'm surprised you haven't hit walls with any that do.

which games?

CrimsonKnight13

Lord Stabington of [H]ard|Fortress

- Joined

- Jan 8, 2008

- Messages

- 8,434

which games?

From what I've seen on a quick view over, AC Odyssey & Overwatch are 2 AAA titles. I've not seen an extensive list for AVX, AVX2, & AVX-512 other than software & OSes.

https://en.wikipedia.org/wiki/Advanced_Vector_Extensions

From what I've seen on a quick view over, AC Odyssey & Overwatch are 2 AAA titles. I've not seen an extensive list for AVX, AVX2, & AVX-512 other than software & OSes.

https://en.wikipedia.org/wiki/Advanced_Vector_Extensions

AC: Odyssey doesn't require it as I have that installed...I don't think any game makes AVX extensions mandatory

defaultluser

[H]F Junkie

- Joined

- Jan 14, 2006

- Messages

- 14,398

AC: Odyssey doesn't require it as I have that installed...I don't think any game makes AVX extensions mandatory

Right, you're at a performance disadvantage, but it will still run.

We all have to upgrade eventually,. but most of the time I can ride a CPU upgrade for two GPU generations. The console owners will never have that luxury.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)