erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,868

Hook it up !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

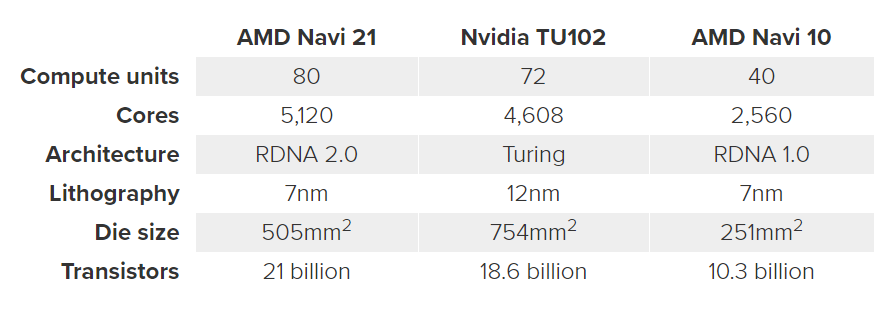

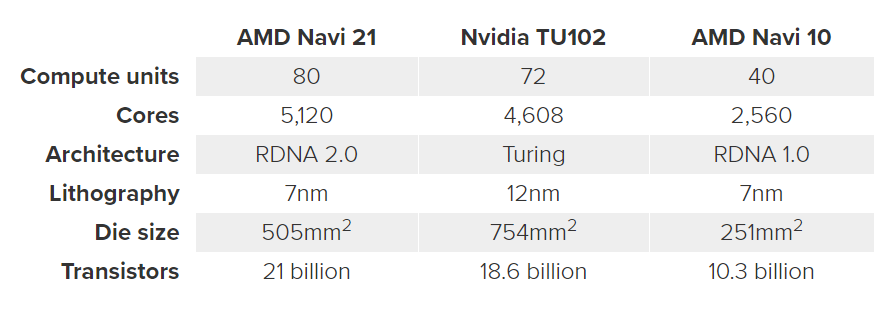

"And with those 80 CUs you’re looking at 5,120 RDNA 2.0 cores. Quite how AMD is managing the hardware-accelerated ray tracing we still don’t know. It will surely be designed to support Microsoft’s DirectX Raytracing API, given that is what will likely power the Xbox Series X’s approach to the latest lighting magic, but how that is manifested in the GPU itself is still up in the air."

https://translate.google.com/transl...phell.com/thread-2181303-1-1.html&prev=search

"And with those 80 CUs you’re looking at 5,120 RDNA 2.0 cores. Quite how AMD is managing the hardware-accelerated ray tracing we still don’t know. It will surely be designed to support Microsoft’s DirectX Raytracing API, given that is what will likely power the Xbox Series X’s approach to the latest lighting magic, but how that is manifested in the GPU itself is still up in the air."

https://translate.google.com/transl...phell.com/thread-2181303-1-1.html&prev=search

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)