Thunderdolt

Gawd

- Joined

- Oct 23, 2018

- Messages

- 1,015

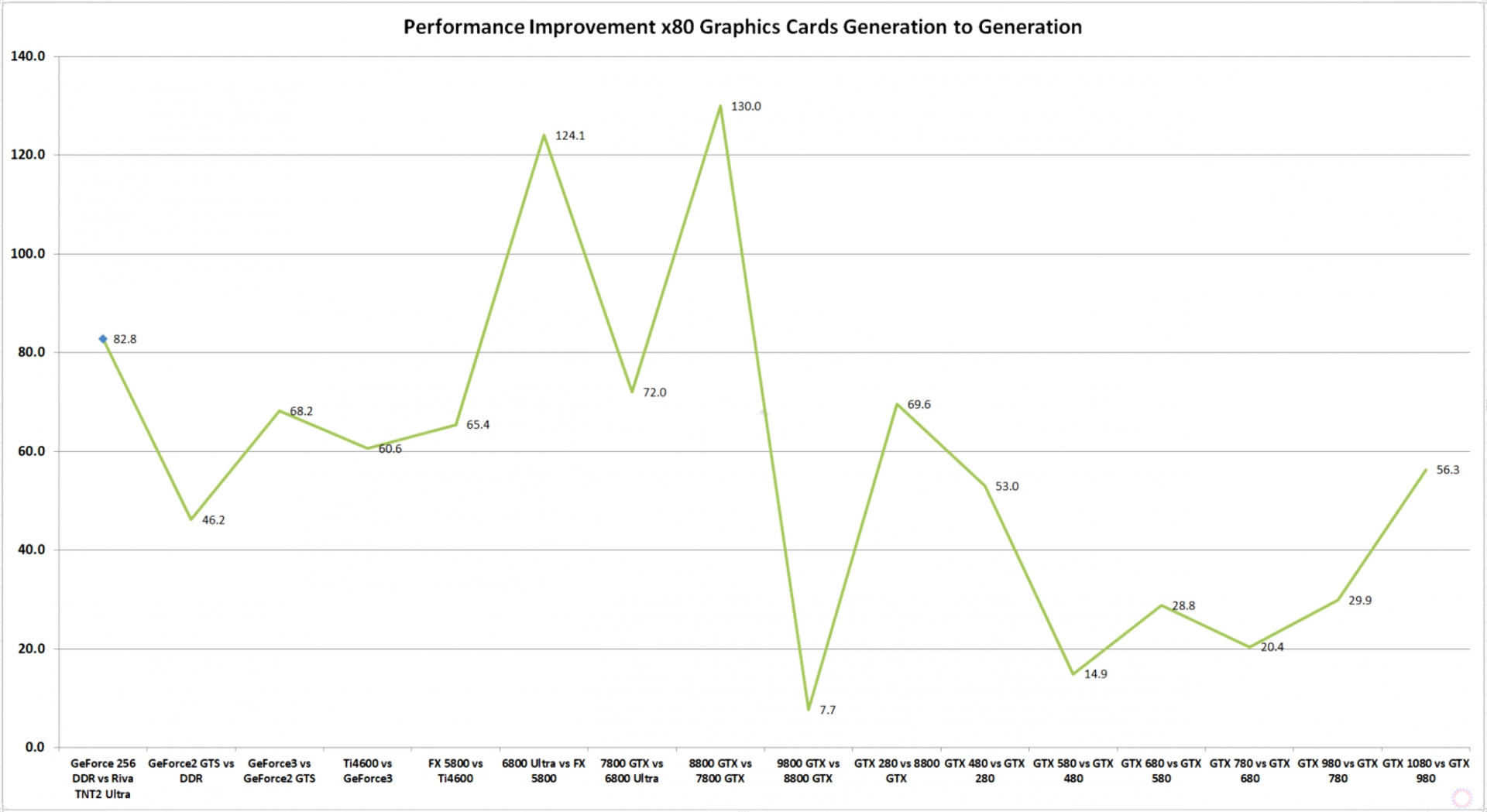

Maxwell to Pascall exceeded 50% jumps from 980ti to 1080ti pretty much across the board

That isn't really how it happened though. The sequence was 980 Ti -> 1080 -> 1080 Ti. There is no 50% jump in that sequence.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)