Dayaks

[H]F Junkie

- Joined

- Feb 22, 2012

- Messages

- 9,773

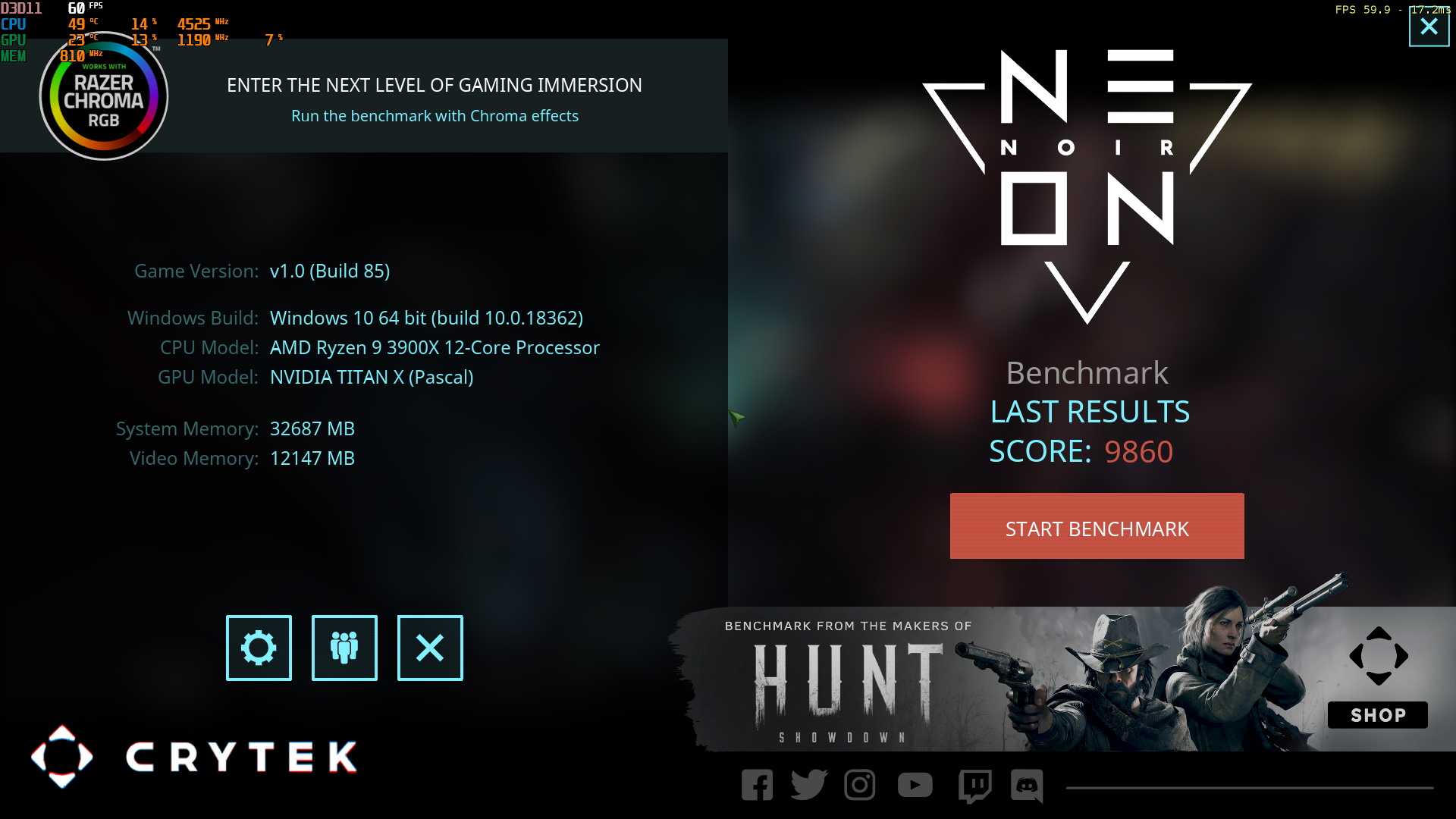

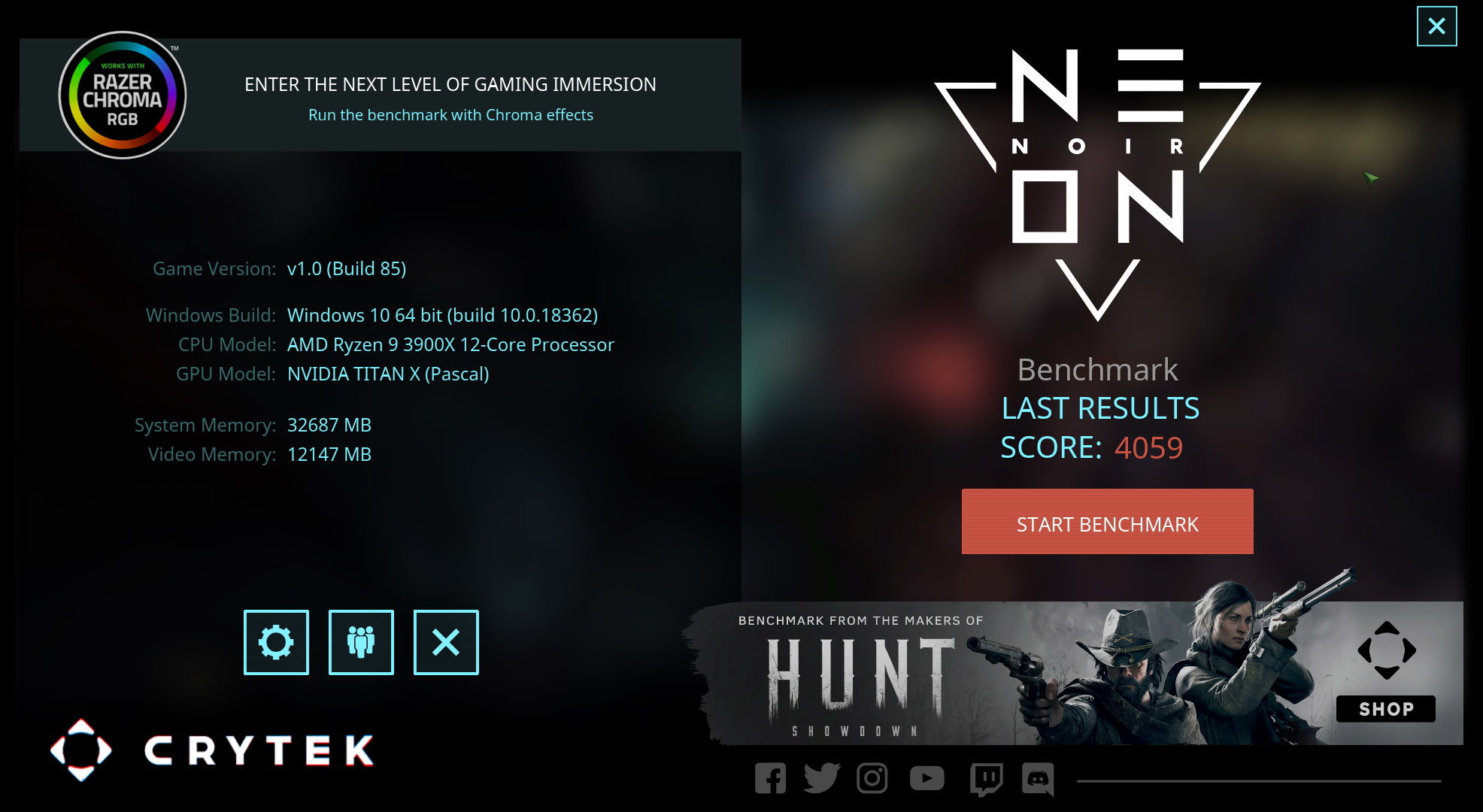

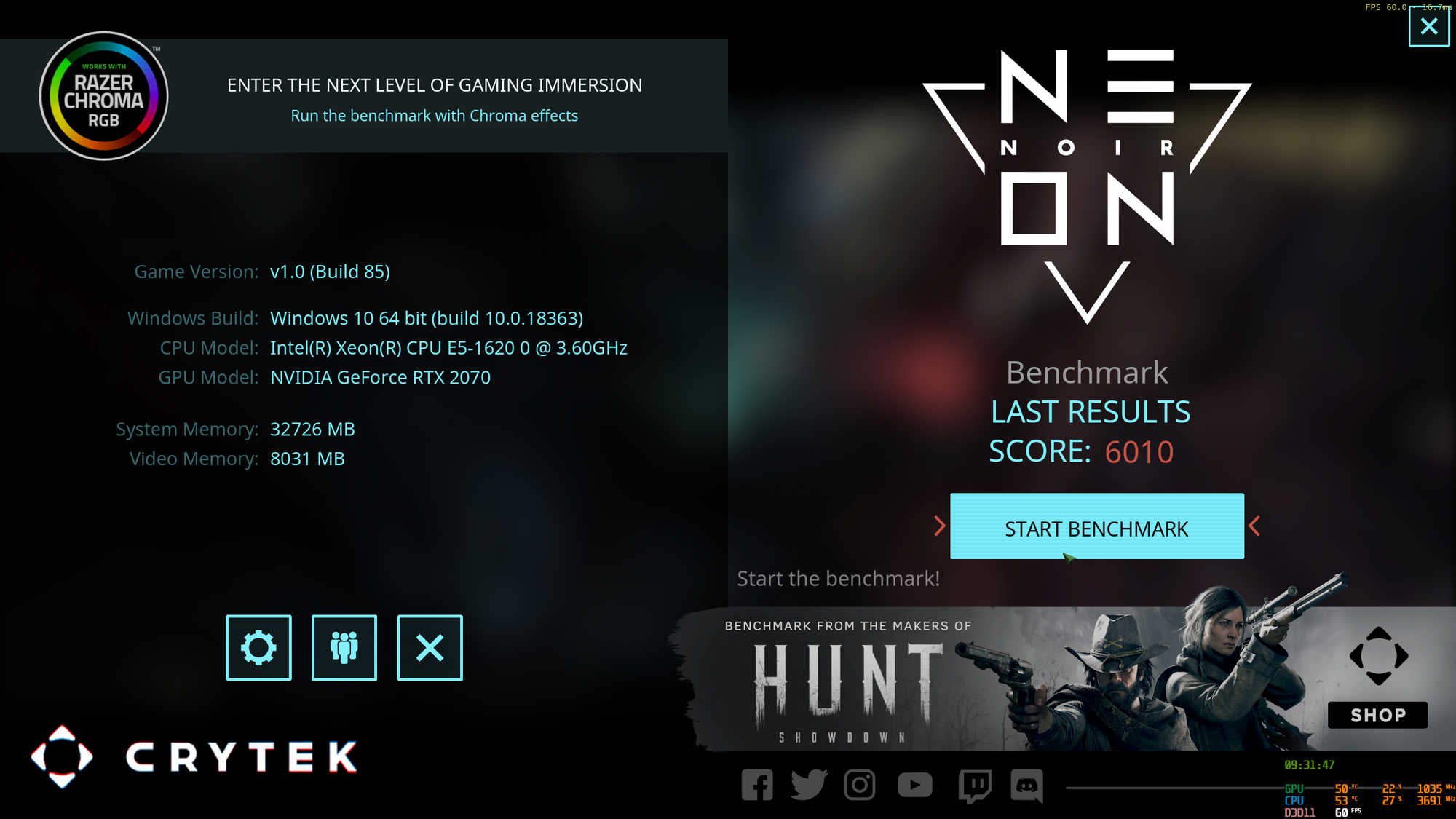

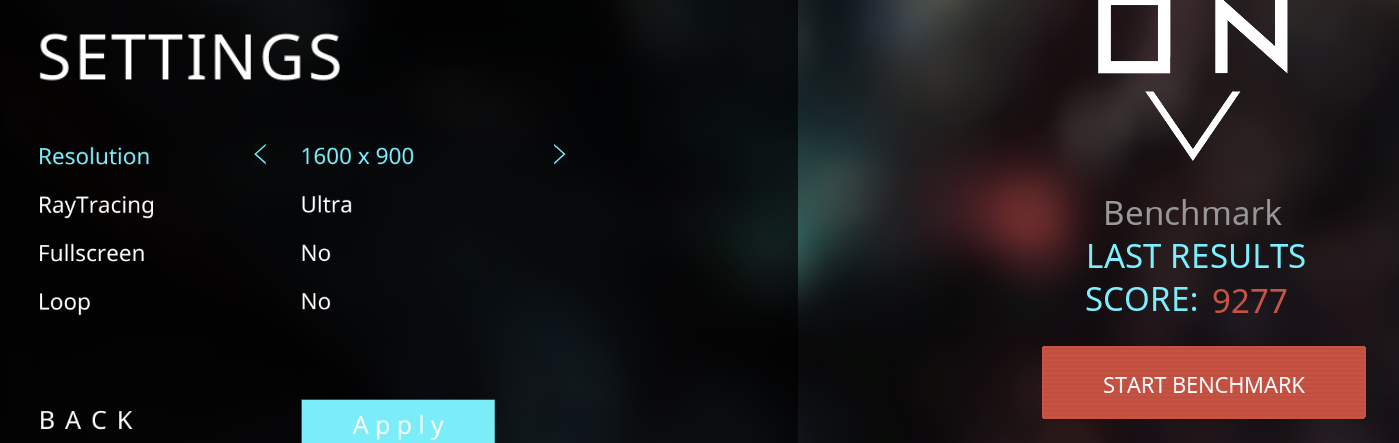

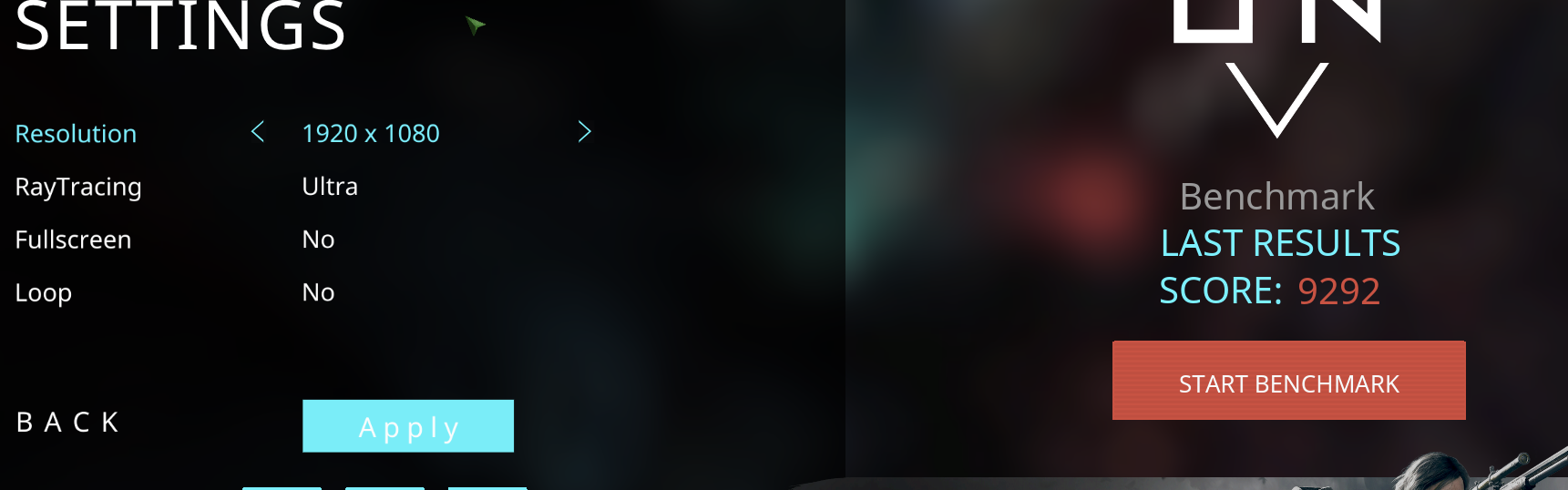

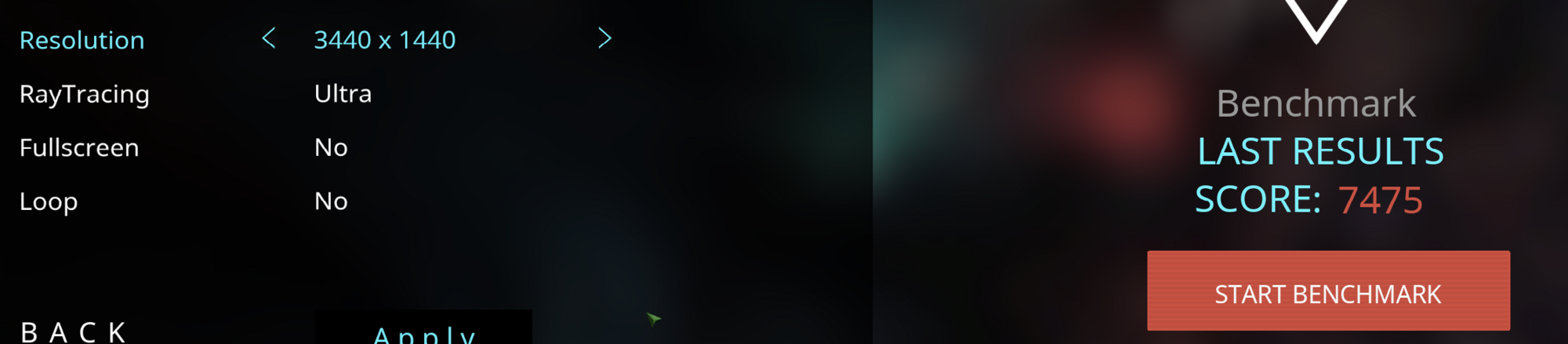

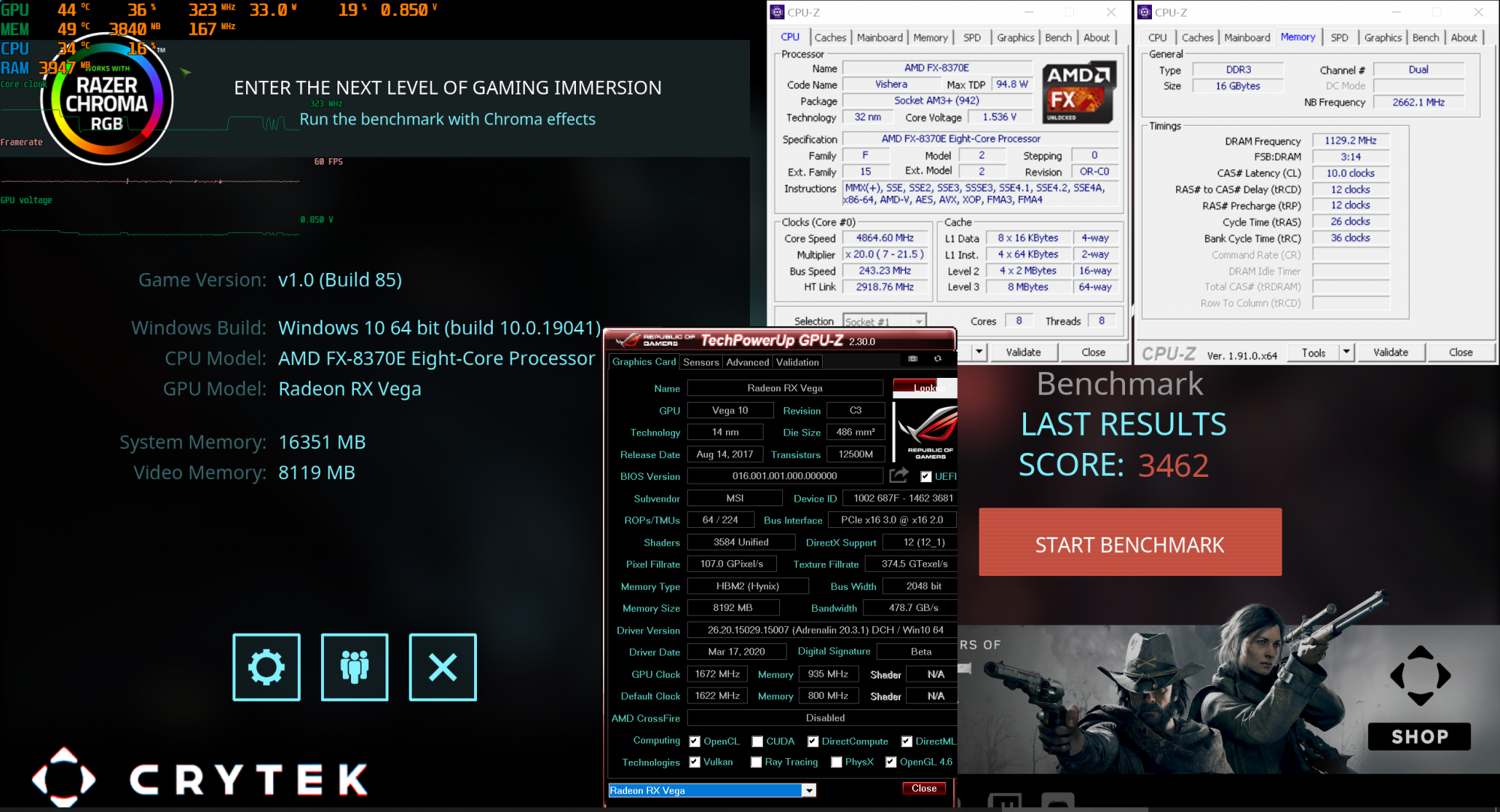

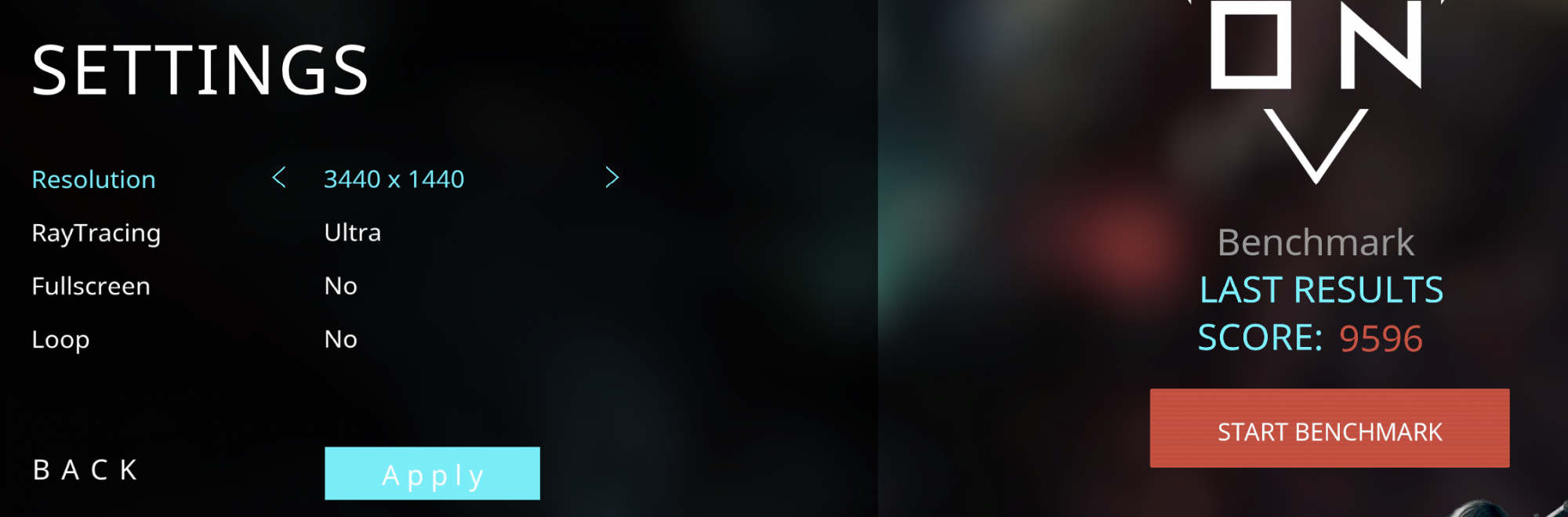

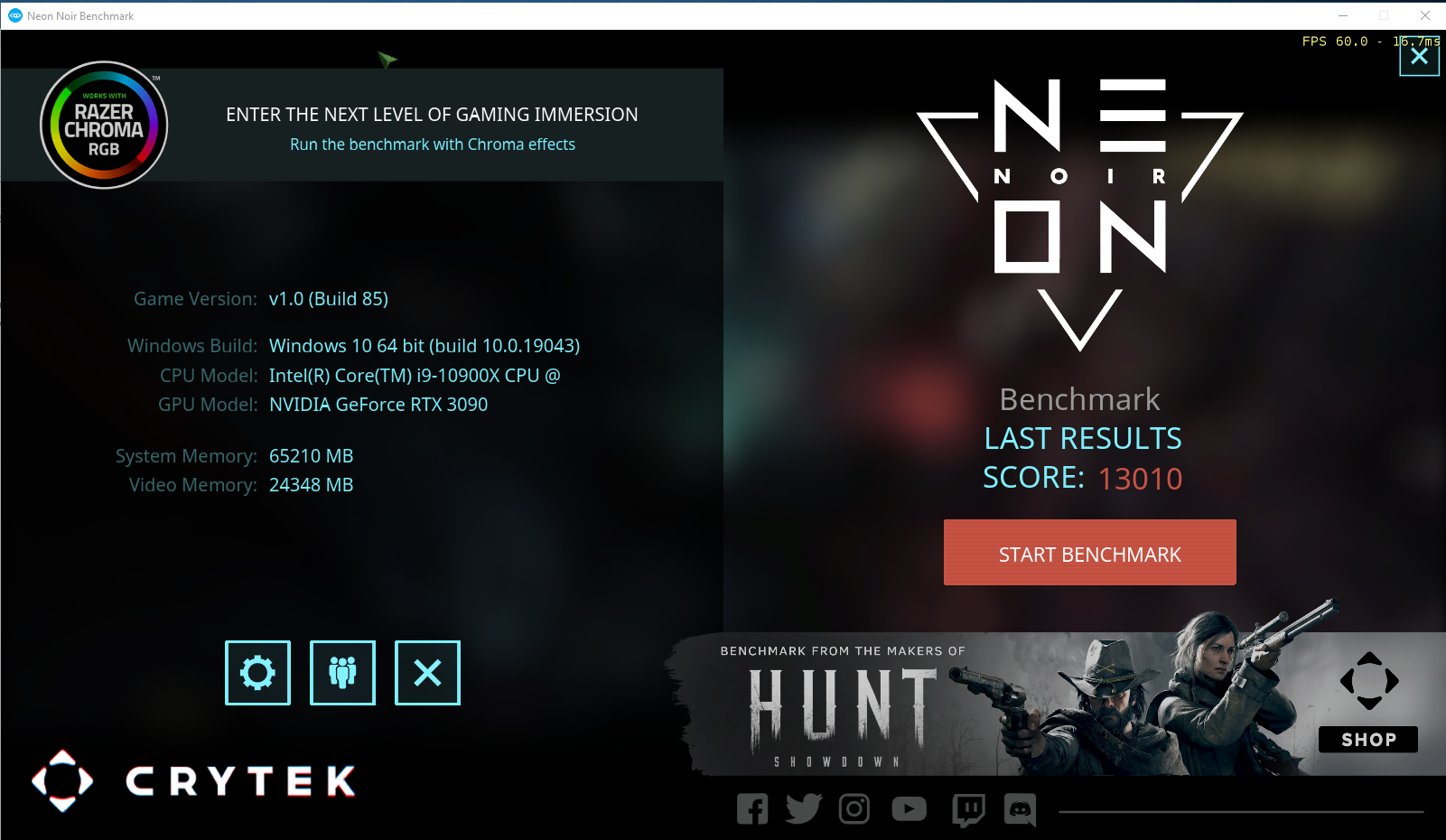

3440 x 1440 gets me 3,844..... so just under half of what I get at 1920 x 1080.

It's a nice looking benchmark if nothing else. Got some nice screen grabs out of it for wallpapers.

Since 1080p is 2megapixels and 3440x1440 is 5, that sounds about right. Also sounds like it scaled nicely.

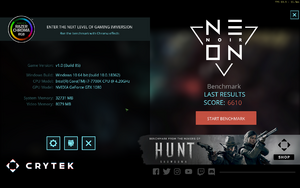

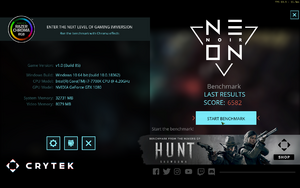

I have to try this benchmark.

Someone earlier said reflections looked blurry. Personally I think games so far with RTX reflections are too good. I’d rather more FPS and more blurry reflections (probably closer to real life...).

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)