Of course one core's pegged, you have to have a master thread. In comparison, here's Call Of Duty MW2:

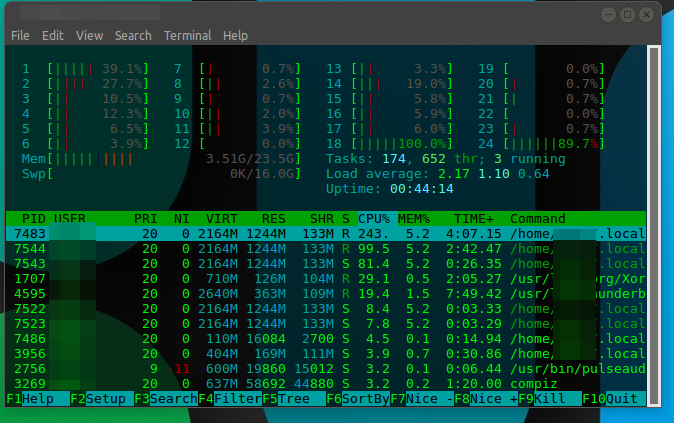

That example of Doom 2016 is on a dual X5675 Xeon based system from 2010 and in that particular configuration running 1920 x 1200 in order to be fairly CPU bound it was pulling between 160-180 FPS under Linux using Proton. You need to consider, that's two 3.06Ghz processors, not even 4Ghz.

That example of Doom 2016 is on a dual X5675 Xeon based system from 2010 and in that particular configuration running 1920 x 1200 in order to be fairly CPU bound it was pulling between 160-180 FPS under Linux using Proton. You need to consider, that's two 3.06Ghz processors, not even 4Ghz.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)