jbltecnicspro

[H]F Junkie

- Joined

- Aug 18, 2006

- Messages

- 9,526

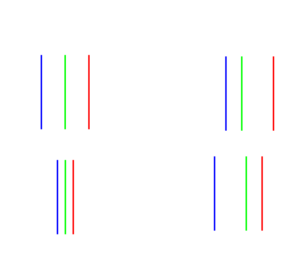

You need all three channels when performing the VMC and HMC adjustments, so cyan and yellow simply won't give you the information you need to perform these adjustments. jbl's info in his guide is incorrect about what the VMC and HMC sliders do.

I don't have it with me (I'll have to dig up my document) but what was wrong about it exactly? I can revise it, if need be.

If I recall correctly, HMC adjusts the magenta beam (red and blue concurrently) with respect to Green on the horizontal axis (so if you have a crosshatch pattern and you move the slider around, green and magenta vertical bars separate). VMC does the same thing but on the vertical axis. Is that not the case?

EDIT: I reread your original explanation. I think I know what the problem is. My document probably suggested that the green gun was also moved using those sliders when in fact green is stationary during their adjustment.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)