MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,457

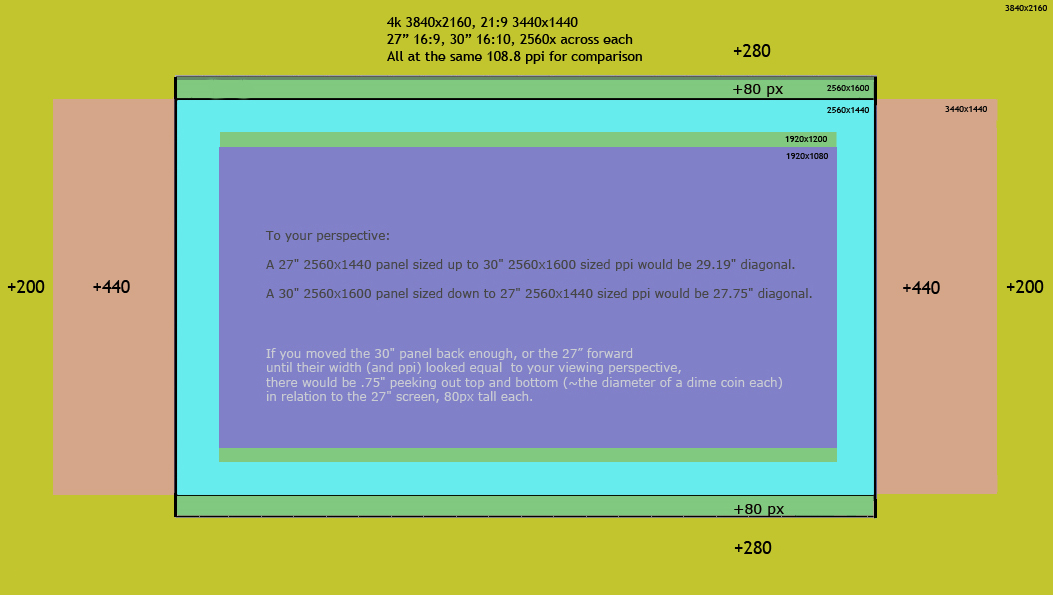

PRO/CON...Resolution: The resolution gives a much better pixel density but drives your frame rate lower which gets nothing out of the high hz capability or requires you to turn you graphics settings down considerably on the more demanding games to get toward 100fps average or more for 120hz+ benefits.

Well seeing how you value 100fps so much this monitor is completely pointless to buy. Not even an 2080 Ti will get you to that frame rate in many games, even WITH reduced settings. Only a select few like Wolfenstein will actually run at 100+fps at 4k. 2080 Ti is really only good for targeting 60Hz in the vast majority of AAA titles, good luck with a 1080 Ti.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)