Regular desktop use just doesn't have enough motion for it to make a practical difference, unless you are the type to jiggle your windows around just to be in awe of how smooth they are

Which is something you will do a couple times when you get your first monitor that can do over 100Hz.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)

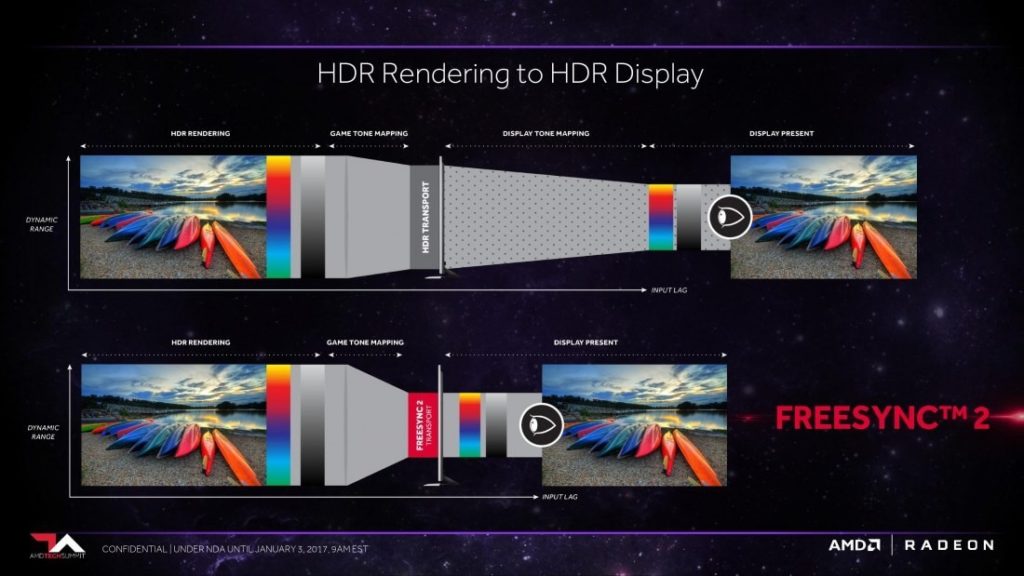

![156955-amd-radeon-freesync2-hdr-logo-dark-1260x500_0[1].png 156955-amd-radeon-freesync2-hdr-logo-dark-1260x500_0[1].png](https://cdn.hardforum.com/data/attachment-files/2019/08/230804_156955-amd-radeon-freesync2-hdr-logo-dark-1260x500_01.png)