Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,860

Not many "AAA games" (been watching too much Jim Sterling on YT) support SLI anyways. I suppose it's still good if you're into overclocking (which I'm not).

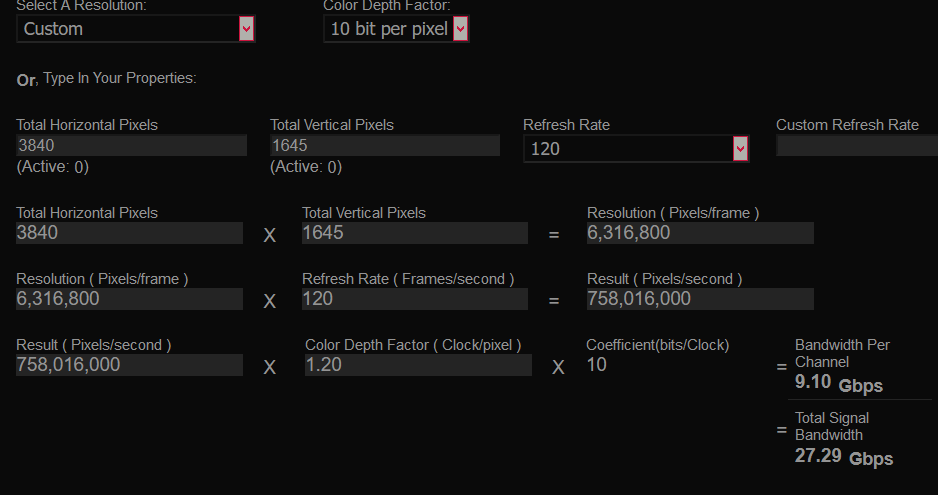

That's why these displays are future investments for me. Yes, I want the adaptive sync features with my 2080Ti but it might pay for me to wait for a full 10bit HDR 120Hz display with those features.

That said I really am not happy using my 2015 Samsung 48" TV as my every day display anymore. Really want a decent PC focused 4k display with adaptive sync.

Yeah, I've done multi-GPU three times. Once way back in the day with Voodoo2 cards. That actually worked fairly well.

Then I did it again in 2010 when I was an early adopter of the 30" 2560x1600 screens, and no single GPU on the market was fast enough to render the resolution well at 60fps. I ran dual triple slot Asus DirectCU II Radeon 6970's. Sure, average frame rates went up, but the experience was miserable, buggy, games crashed or were unstable, stutter, frame skipping, and the all important minimum frame rates pretty much stayed put, or only improved very little. So it essentially became a Toyota Supra Dyno Queen. Lots of high performance when it wasn't really needed, and no additional performance when it really mattered during minimum fps conditions.

I upgraded to a single 7970 as soon as it launched, and it was a clear improvement, despite only being 20% faster than each of the 6970's. I swore I would never do multi-GPU again.

Then 2015 happened. I was an early 4k adopter with my current Samsung TV as a screen. No single GPU was sufficiently fast, so I decided to try dual 980ti's. Maybe Nvidia did it better than AMD? Nope. All the same problems. As soon as the Pascal Titan X launched I jumped on it.

So my take is, has never been good in the modern era. It has always been a flawed solution. Today many fewer titles support it, and that's because it sucks. The experience is a total downgrade from any single GPU solution and has been since SLI actually stood for scanline interleave.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)