IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

To do what exactly ?

To support any point you think you are going to make, anywhere.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

To do what exactly ?

To do what exactly ?

I posted an excerpt from him endorsing Nvidia it does not mean anything if I get it from another source especially since all of them signed 5 year NDA from Nvidia ...

A bad source is a bad source, even if it sometimes gets things right. A stopped clock is correct twice/day, but I wouldn't use it to tell time.

Adored TV is essentially like 2nd rate speculations on forums, except made into click bait videos for money. It's garbage.

There are a few high quality YT channels, like Gamers Nexus and Hardware Unboxed, that do comprehensive detailed reviews and analysis (AKA real work), and both do their reviews in text, so you don't even have to waste time on videos.

Meh, everyone seems to be looking for something to praise Nvidia on so they don't cheese off leather jacket man so they've been talking up a $350 to now $400+ 1080p video card in the 2060. At least price/perf the 2070s is tolerable if still historically overpriced.

Meh, everyone seems to be looking for something to praise Nvidia on so they don't cheese off leather jacket man

The market is what it is right now. BOTH AMD and Nvidia are playing the same pricing game now. In that context it does make the 2060 Super a better "value" than the 2060 non-Super. There's nothing "looking to praise" about it. Its the reality of the current GPU market. Things are never going back to where they were before. This has happened multiple times in the GPU market over the past decade and every single time we adapt to the new prices because the old prices are never coming back.

And you've bought into that mindset apparently. Resign yourself, pay up and bite odditory's pillow there I guess. And yes, I agree AMD is doing the same thing and at this moment Navi does not look like a good purchase. We'll see on Sunday for sure.

And by "mindset" you mean reality, right? No one likes the prices, but they're not going to change. AMD and Nvidia aren't going to suddenly drop to pre-mining prices again. Unless Intel decides to be the "value" brand (I highly doubt it) and knocks it out of the park from day one this is where we're at.

If no one buys then prices drop. It is always a buyers market. You seem to think Jensen’s jackets pay for themselves.

Meh, everyone seems to be looking for something to praise Nvidia on so they don't cheese off leather jacket man so they've been talking up a $350 to now $400+ 1080p video card in the 2060. At least price/perf the 2070s is tolerable if still historically overpriced.

It’s only overpriced if you ignore the RTX portion of the card... which I wouldn’t blame someone for doing.

RTX in its current iteration is useless. Next gen maybe.

I really enjoy it in the games that use it. It just depends if you actually play those games.

Man I can’t tell a difference whether it’s on or not lol. It all looks same to me.

The envy of companies making a profit is strong in this thread...

I might be biased, but if Intel or Nvidia make a profit it is evil. When AMD makes a profit it's for the good of humankind.

At least that's the gist I get when surfing the web.

Meh, everyone seems to be looking for something to praise Nvidia on so they don't cheese off leather jacket man so they've been talking up a $350 to now $400+ 1080p video card in the 2060. At least price/perf the 2070s is tolerable if still historically overpriced.

That is almost signature worthy....because I get the same kind of "feeling"

The issue is for a lot of us is we already have 1080 Ti performance and everything below $998 is not really an upgrade. The 2080 Ti, at least in my case is a laughable card at the going rate. If there were some decent $999 ones (not the EVGA Black) but with great coolers etc. that would at least give an option for significant better performance. Just have to see what the 2080 Super delivers to the table but if between a 2080 and 2080 Ti, why bother if one already has a 1080 Ti? This whole generation has been meh to the max.It looks like you are saying, you don't like high quality reputable sites, because they don't tell you what you want to hear?

There is a huge problem with this. It's called confirmation bias. You don't learn anything this way, you just get set in your bubble avoiding the truth.

"Historically overpriced"? What is that supposed to mean?

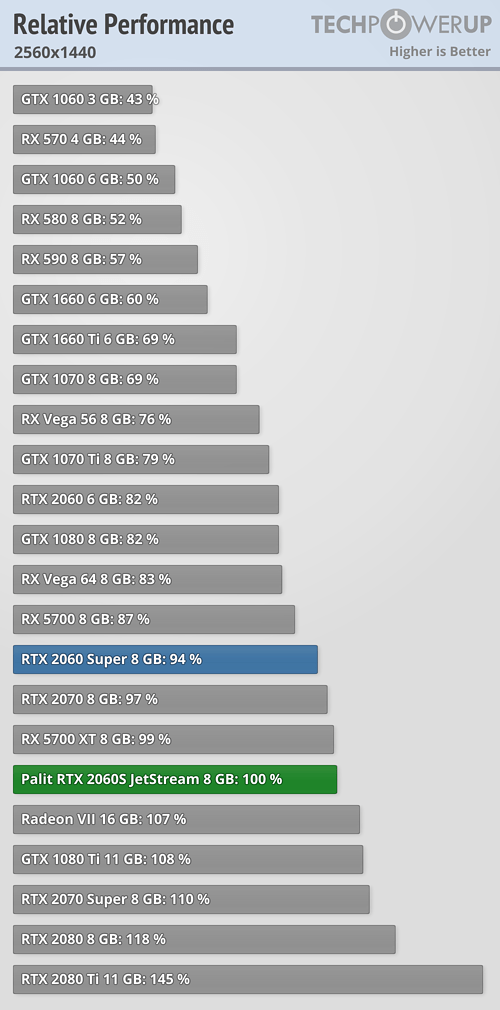

2070 Super gives you 1080Ti performance for about $200 less.

2060 Super gives you above 1080 performance for about $100 less.

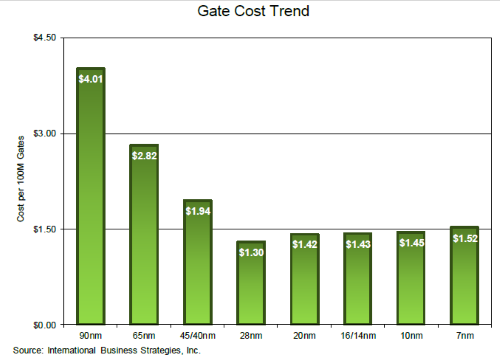

If you are referring to the big gains back in the days when process improvements gave significant increases in transistor/$, then sadly those days are gone.

The issue is for a lot of us is we already have 1080 Ti performance and everything below $998 is not really an upgrade. The 2080 Ti, at least in my case is a laughable card at the going rate. If there were some decent $999 ones (not the EVGA Black) but with great coolers etc. that would at least give an option for significant better performance. Just have to see what the 2080 Super delivers to the table but if between a 2080 and 2080 Ti, why bother if one already has a 1080 Ti? This whole generation has been meh to the max.

The days of buying top end part and upgrading every generation with big gains are GONE forever.

The unfortunate reality of the slowdown in process technology are going to hit even harder at the top, as huge chips get hit much harder by yield issues.

.

So why didn't Nvidia just use 16nm if there is very little benefit? How stupid they must beThe days of buying top end part and upgrading every generation with big gains are GONE forever.

The unfortunate reality of the slowdown in process technology are going to hit even harder at the top, as huge chips get hit much harder by yield issues.

2080Ti is crazy expensive because the die is ridiculously large. It's the largest consumer GPU chip EVER produced.

Also 12nm FFN is more marketing name than reality. It's really 16nm+. The transistor density is the same as 16nm (~25million/mm2), so there is really zero progress on process density since Pascal. Maybe slight power improvements, but really not much change, this is just slight refinement of 16nm. All the performance improvements of Turing come from much bigger, more expensive dies.

Bingo.I might be biased, but if Intel or Nvidia make a profit it is evil. When AMD makes a profit it's for the good of humankind.

At least that's the gist I get when surfing the web.

So why didn't Nvidia just use 16nm if there is very little benefit? How stupid they must beto pay extra for nothing

TSMC 12nm is rated for 33.8mTr/mm^2 vs. 28.2mTr/mm^2 on the 16nm node. Transistor density is higher for 12nm.

So why didn't Nvidia just use 16nm if there is very little benefit? How stupid they must beto pay extra for nothing

TSMC 12nm is rated for 33.8mTr/mm^2 vs. 28.2mTr/mm^2 on the 16nm node. Transistor density is higher for 12nm.

You can check the practical transistor density by transistors count/chip size, and it's ~25 million/mm2 for both Pascal and Turing.

Who says it costs more. It's just mature refined 16nm. Lower cost and and higher yield than 7nm. That is why they used it.

nope… for TSMC 12nm and 16nm are the same, even it's categorized and marketed by TSMC as same 16/12nm

16/12nm

12nm didn't changed too much the density beyond improved process to achieve better yields, the enhanced process was just to achieve reduced leakage and better cost and more important better marketing name tan say 14nm, so yeah.. it's cheaper for nvidia to use 12nm, but basically TSMC 12nm and 16nm are the same..

It is a great card. Keep missing out due to your incredible bias and poor information sources.The 2080 Ti, at least in my case is a laughable card at the going rate. If there were some decent $999 ones (not the EVGA Black)

I am bias because I don't buy one, lol, nope - just plainly not worth the money. No reason to pay $1200 for a decent version to get 30% increase of real gaming performance. If your happy great, power to you game on. Now if I was on pre Pascal generation cards the 2070 Super would be my choice unless AMD pulls a cat out of the hat.It is a great card. Keep missing out due to your incredible bias and poor information sources.

The evga black is $999. Get your facts straight.$1200

The non A chip, the worst cooler of all 2080 Ti's - no thanks. Feedback poor, poor return rates, at least EVGA kicks ass on that. Of course some had great success with them, even A chips on board. Just don't like the thought of getting the worst bin chips as a good deal. If the 2080Ti FE was $999 or similar quality - that would be something to think about. Also if Nvidia has a 2080 Ti Super that has a significant performance increase making the higher price maybe digestible. Once again I did look into the 2080Ti Black, just not worth it to me at this time.The evga black is $999. Get your facts straight.

So TSMC lied on their density increase, right. Just so Nvidia will pay more since they are so stupid. Yes it is a half node but increase density is one reason, plus reduce power/heat is another. If Nvidia takes advantage of it or spreads out parts of the chip that generates more heat is another thing for higher clock speeds. Bottom line is the specs says otherwise on density unless you two champs just know much more than TSMC.

I think I'll wait for 7nm ☺They're good cards for the money. Barely faster than a 1080Ti for $500 isn't a bad deal. AMD would be smart to drop the price on Radeon VII to $500 and rethink their pricing strategy on the new NAVI cards coming out.

think I'll wait for the 2080Ti Ultra Mega FTWWTFBBQ Super Sayan OC Extreme Shroud Edition

Not buying into pascal now is solid advice.Hotter. No rtx. Slightly slower. Only 90 day warranty instead of 3 years. I'd have to recommend anyone from here on out skips the 1080ti, since the 2070 super is here.