Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

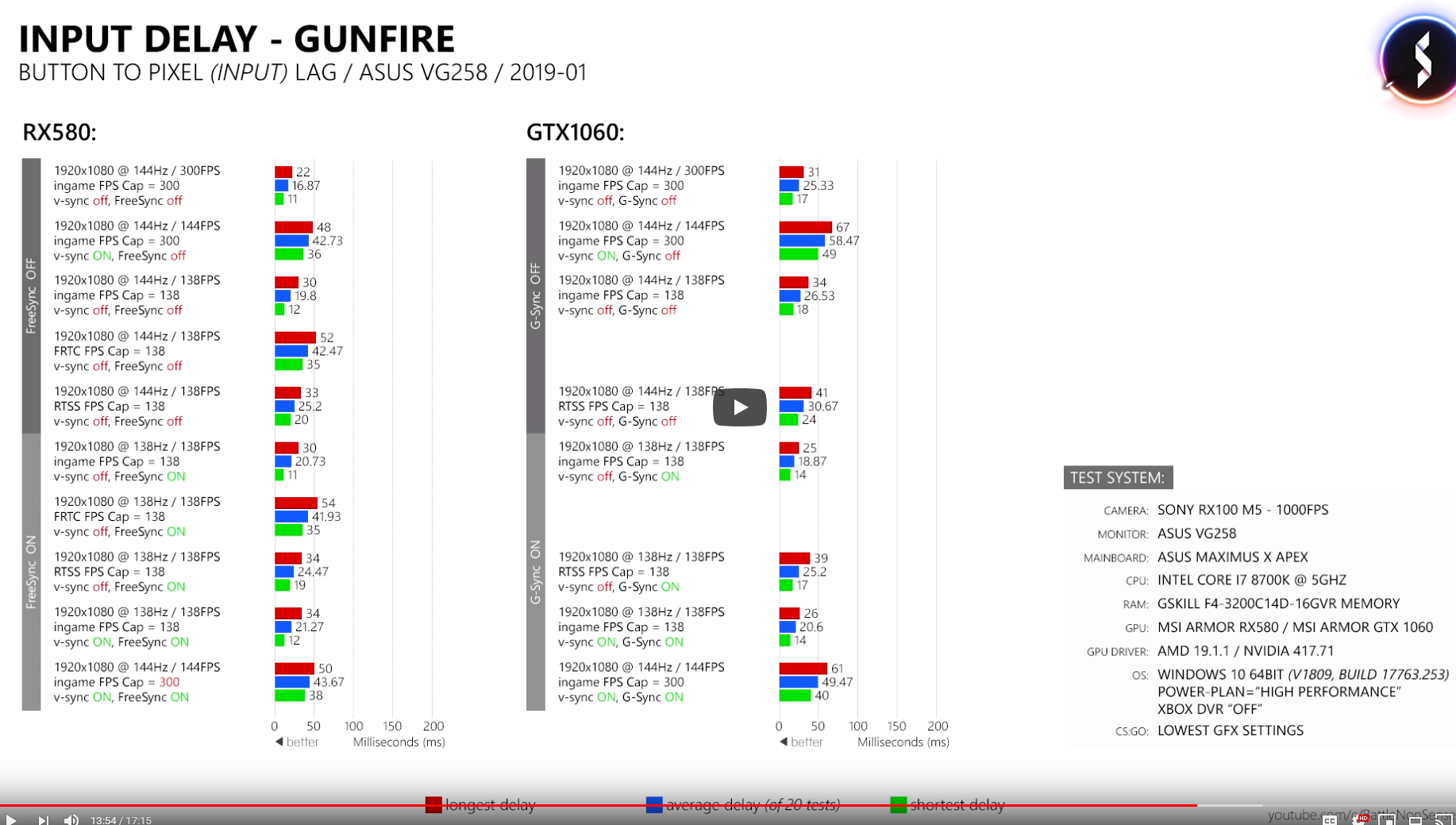

nVidia has significantly more input lag than AMD

- Thread starter Lepardi

- Start date

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

Why isn't there a bigger fuss about this?

You're taking the video and results therein at face value, with an especially bold claim.

You should start by telling us why you're doing that. Why do you believe the results? What do you corroborate them with?

Furious_Styles

Supreme [H]ardness

- Joined

- Jan 16, 2013

- Messages

- 4,534

I'm in the gsync on vsync on and in-game fps cap category and things look good. For both freesync and gsync.

The input lag increase is in fixed refresh rate mode. Reproduced reliably with different 1000-series cards, fresh Win 10 installs...I'm in the gsync on vsync on and in-game fps cap category and things look good. For both freesync and gsync.

As said above, and video explains the testing process. I don't see a reason to believe that there is no input lag increase here, potentially affecting all monitors out there in fixed refresh rate mode.You're taking the video and results therein at face value, with an especially bold claim.

You should start by telling us why you're doing that. Why do you believe the results? What do you corroborate them with?

Last edited:

Pieter3dnow

Supreme [H]ardness

- Joined

- Jul 29, 2009

- Messages

- 6,784

Classic whataboutism: Turn it around when you don't care for what people find when Nvidia hardware/software is put into question.You're taking the video and results therein at face value, with an especially bold claim.

You should start by telling us why you're doing that. Why do you believe the results? What do you corroborate them with?

N4CR

Supreme [H]ardness

- Joined

- Oct 17, 2011

- Messages

- 4,947

You're taking the video and results therein at face value, with an especially bold claim.

You should start by telling us why you're doing that. Why do you believe the results? What do you corroborate them with?

I've seen similar results from other tests a few months ago and it's true, the 'perfect' G-sync isn't so perfect when input lag is taken into account in some frame rate regimes and resolutions, aka when all else is equal, Freesync has a slight edge there. Nothing to be salty about.

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

As said above, and video explains the testing process. I don't see a reason to believe that there is no input lag increase here, potentially affecting all monitors out there in fixed refresh rate mode.

So you're not questioning the results.

Classic whataboutism: Turn it around when you don't care for what people find when Nvidia hardware/software is put into question.

I'd recommend looking up whataboutism. It isn't asking why someone would post results from one source as fact.

I've seen similar results from other tests a few months ago and it's true

Then post them?

If it's clearly 'true', then it should be easy to prove. Further, given how obsessed eSports'ers are with response time, there should be some corroboration available from that community as well. Do they all run AMD for lower response time?

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

Why isn't there a bigger fuss about this?

Practically for competitive gaming, AMD is the only meaningful solution currently.

If you're running those high of frame rates to boot any form of sync is largely worthless and should be turned off as it is.

G-Sync was never made for high frame rate competitive gaming. Where it shines is when you are in that sub-60 FPS area in demanding titles where you would want v-sync to eliminate tearing, but can't maintain above a consistent 60hz. This is why you use g-sync, and where it shines. In this type of scenario you largely aren't worried about a marginal increase in frame times as having the tear free experience at sub-optimal frame rates is the key.

I'm not really sure what we're supposed to take away from this video as it's testing a scenario that is largely irrelevant. To truly show what 'sync' method is better we'd need to see a test in the sub 60hz range, and also compare if having a full G-Sync display w/ the actual g-sync FPGA gives the user a 'smoother' experience at sub-optimal FPS. This high FPS test, as I mentioned, is a scenario that really doesn't make sense. If you can push 120+ FPS without a problem you'll just turn this crap off.

Last edited:

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

G-Sync was never made for high frame rate competitive gaming. Where it shines is when you are in that sub-60 FPS area in demanding titles where you would want v-sync to eliminate tearing, but can't maintain above a consistent 60hz. This is why you use g-sync, and where it shines. In this type of scenario you largely aren't worried about a marginal increase in input lag as having tear free experience at sub-optimal frame rates is the key.

The point behind VRR, which G-Sync does best (still, come on AMD), is to eliminate tearing without incurring the input lag and uneven frame delivery of V-Sync. Nvidia has been pretty clear on the lower-latency angle.

Clearly you didn't even watch the video results. The input lag is the biggest in your high framerate scenario without sync. In 138FPS limited non synced scenario, average difference is 6.7ms, in 300FPS non synced its about 9ms.If you're running those high of frame rates to boot any form of sync is largely worthless and should be turned off as it is.

G-Sync was never made for high frame rate competitive gaming. Where it shines is when you are in that sub-60 FPS area in demanding titles where you would want v-sync to eliminate tearing, but can't maintain above a consistent 60hz. This is why you use g-sync, and where it shines. In this type of scenario you largely aren't worried about a marginal increase in frame times as having the tear free experience at sub-optimal frame rates is the key.

I'm not really sure what we're supposed to take away from this video as it's testing a scenario that is largely irrelevant. To truly show what 'sync' method is better we'd need to see a test in the sub 60hz range, and also compare if having a full G-Sync display w/ the actual g-sync FPGA gives the user a 'smoother' experience at sub-optimal FPS. This high FPS test, as I mentioned, is a scenario that really doesn't make sense. If you can push 120+ FPS without a problem you'll just turn this crap off.

With sync activated NVIDIA is comparable directly to AMD, and lag is lower than without sync. The issue is when sync is not activated.

Also disagree that sub 60FPS is where gsync is the best. Textures are all blurry, input lag is very much there, and 60FPS doesnt even look smooth. ~140FPS on a 144Hz monitor gives you smooth lagless and visual error free image. It's at that 300FPS mark I would turn G-sync off for the less input lag and short duration tearing such high frames offer.

Last edited:

N4CR

Supreme [H]ardness

- Joined

- Oct 17, 2011

- Messages

- 4,947

Clearly you didn't even watch the video results. The input lag is the biggest in your high framerate scenario without sync. In 138FPS limited non synced scenario, average difference is 6.7ms, in 300FPS non synced its about 9ms.

With sync activated NVIDIA is comparable directly to AMD, and lag is lower than without sync. The issue is when sync is not activated.

Also disagree that sub 60FPS is where gsync is the best. Textures are all blurry, input lag is very much there, and 60FPS doesnt even look smooth. ~140FPS on a 144Hz monitor gives you smooth lagless and visual error free image. It's at that 300FPS mark I would turn G-sync off for the less input lag and short duration tearing such high frames offer.

This is a pretty well written summary of it all. It's not lower input lag in every scenario.

Was posted here in Jan

https://hardforum.com/threads/freesync-vs-g-sync-compatible-unexpected-input-lag-results.1976258/

https://hardforum.com/threads/frees...ed-input-lag-results.1976258/#post-1044055796

This post has the chart attached here.

IdiotInCharge there is another website that looked into this also end of last year or start of this year which had similar results to this video. I can't find it at the moment though. One thing that's interesting is the input lag at same Hz is slightly lower on average with sync on AMD.

Last edited:

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

Clearly you didn't even watch the video results. The input lag is the biggest in your high framerate scenario without sync. In 138FPS limited non synced scenario, average difference is 6.7ms, in 300FPS non synced its about 9ms.

With sync activated NVIDIA is comparable directly to AMD, and lag is lower than without sync. The issue is when sync is not activated.

Also disagree that sub 60FPS is where gsync is the best. Textures are all blurry, input lag is very much there, and 60FPS doesnt even look smooth. ~140FPS on a 144Hz monitor gives you smooth lagless and visual error free image. It's at that 300FPS mark I would turn G-sync off for the less input lag and short duration tearing such high frames offer.

You aren't hitting 140 FPS, or anywhere near even 100 FPS @ 4k resolutions. I've used G-Sync on my X27 specifically because any modern game like AC Odyssey, even with a 2080ti, you are still hitting sub 60 FPS and if you aren't running G-Sync you either deal with tearing, or you deal with V-Sync stutter.

That's why you forget about 4K until mid 2020's and go for 1440p until that.You aren't hitting 140 FPS, or anywhere near even 100 FPS @ 4k resolutions. I've used G-Sync on my X27 specifically because any modern game like AC Odyssey, even with a 2080ti, you are still hitting sub 60 FPS and if you aren't running G-Sync you either deal with tearing, or you deal with V-Sync stutter.

Also the comparison is between each vendor GPU on a Freesync/G-Sync compatible monitor, without testing other G-Sync compatible monitor, a sample size of 1 is meaningless to draw any conclusions.You're taking the video and results therein at face value, with an especially bold claim.

You should start by telling us why you're doing that. Why do you believe the results? What do you corroborate them with?

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

That's why you forget about 4K until mid 2020's and go for 1440p until that.

Or I just run 4K at around 60 FPS w/ HDR and i'm happy anyways.

120+FPS for a game like Batman or AC Odyssey doesn't matter as much. It's just a third person adventure game.

If i'm playing a twitch shooter, then I just turn down settings (if I even need to) and i'm hitting 120hz as it is.

You act as if everyone is playing twitchy first person shooters constantly.

Anyways, I didn't know G-Sync removed some input lag, i'll begin frame capping games more often as needed.

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

Also the comparison is between each vendor GPU on a Freesync/G-Sync compatible monitor, without testing other G-Sync compatible monitor, a sample size of 1 is meaningless to draw any conclusions.

I'd like to see a test on a panel that is known to be exactly the same except the difference of models between having the G-Sync FPGA and see what the numbers were. I always figured the G-SYNC FPGA would just add some input lag to help out with smoothness, but I guess i'm wrong.

Sorry didnt watch whole vid, just that chart of one specific AMD card vs one specific Nvidia card with one driver version for each and one monitor. Is that it, for some to reach grand sweeping conclusions across the board? Where are the stats showing this 'consistency' across several different cards, driver versions and different monitors? So there is no "big fuss" that the limited data used has not been trumpeted around the tech media as the all conclusive 'big proof' this test has 'established'? I dont know, but have a feeling a massive face-palm is in order.

Pieter3dnow

Supreme [H]ardness

- Joined

- Jul 29, 2009

- Messages

- 6,784

This is what you said:So you're not questioning the results.

I'd recommend looking up whataboutism. It isn't asking why someone would post results from one source as fact.

Then post them?

If it's clearly 'true', then it should be easy to prove. Further, given how obsessed eSports'ers are with response time, there should be some corroboration available from that community as well. Do they all run AMD for lower response time?

Yeah because making bold claims is your job, believing the results is because he prolly watched the video.You're taking the video and results therein at face value, with an especially bold claim.

You should start by telling us why you're doing that. Why do you believe the results? What do you corroborate them with?

Dear god AMD is better then Nvidia and the world as you know it is collapsing ....

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

This is what you said:

Yeah because making bold claims is your job, believing the results is because he prolly watched the video.

Dear god AMD is better then Nvidia and the world as you know it is collapsing ....

IMO, I would get an AMD GPU if they actually offered something at least on-par with what Nvidia was offering. The issue is that AMD's best is still only somewhat on par with a 1080ti/2080, and for 4K gaming even those aren't enough.

If I were still at 1080p resolutions i'd 100% go AMD though.

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

Yeah because making bold claims is your job, believing the results is because he prolly watched the video.

Do you not see the other critiques above?

Are you blind?

Dear god AMD is better then Nvidia and the world as you know it is collapsing ....

In this aspect, I'd expect them to be the same. I'm not going to take a single video as proof, or single anything, especially given the complexity of the issue and the implications.

The OP asks, "why doesn't this get more coverage?", on the assumption that the information presented is universally true. Applying just a hint of critical thinking leads to the conclusion that if it were universally true, there would definitely be more coverage.

So if the OP is true, we should be able to find more proof.

5150Joker

Supreme [H]ardness

- Joined

- Aug 1, 2005

- Messages

- 4,568

Why isn't there a bigger fuss about this?

Practically for competitive gaming, AMD is the only meaningful solution currently.

This is limited to one monitor that is freesync. You can't make such a ridiculous sweeping judgment based on that.

Pieter3dnow

Supreme [H]ardness

- Joined

- Jul 29, 2009

- Messages

- 6,784

Do you not see the other critiques above?

Are you blind?

In this aspect, I'd expect them to be the same. I'm not going to take a single video as proof, or single anything, especially given the complexity of the issue and the implications.

The OP asks, "why doesn't this get more coverage?", on the assumption that the information presented is universally true. Applying just a hint of critical thinking leads to the conclusion that if it were universally true, there would definitely be more coverage.

So if the OP is true, we should be able to find more proof.

You can question anything you want especially when you don't watch the linked material that especially seems to work well for you and some others.

So I can question anything you write without reading anything you wrote it is a shitty principle but hey works for quite a few people on this forum. Just ask for a second source that is seriously not whatboutism because you did not say what about a second source that can verify this, see?

I think this topic is pretty trivial there is nothing stopping Nvidia from solving this problem.

Especially the same youtuber test Freesync vs Gsync and found Gsync is faster but not by much and of course only 1 monitor for each solutions so very small sample size.This is limited to one monitor that is freesync. You can't make such a ridiculous sweeping judgment based on that.

One monitor, one driver, one GPU for each side. As we all know, drivers are never improved or updated. And monitors all display the same lag characteristics according to the GPUs used, no variables whatsoever. And RTX lag = Pascal lag = Maxwell lag and Polaris lag = vega lag = Navi lag, no variables whatsoever.This is limited to one monitor that is freesync. You can't make such a ridiculous sweeping judgment based on that.

TheFlayedMan

Limp Gawd

- Joined

- May 29, 2015

- Messages

- 410

How many people can react to visual stimuli in less than 9ms?Clearly you didn't even watch the video results. The input lag is the biggest in your high framerate scenario without sync. In 138FPS limited non synced scenario, average difference is 6.7ms, in 300FPS non synced its about 9ms.

None, but there is some effect in how the controls feel at those 9ms. You can feel the mouse reacting sharper when you go from basic 8ms USB to 1ms USB. And given equal opponents in equal grounds, those 9ms would mean that the AMD user wins.How many people can react to visual stimuli in less than 9ms?

No. The RX 580 + 19.1.1 driver + Asus VG258 monitor shows slightly better results than the GTX 1060 + 417.71 driver + Asus VG258 monitor. That is all. Is there a test with 430.86 driver + another monitor + another GPU with different specs, ie, DDR6, etc, that precisely reproduces the same results? Or is there a Vega 64 with different driver + monitor that also does the same? You are using a very limited data set with zero other variables to reach far greater conclusions than that limited evidence can possibly show.... And given equal opponents in equal grounds, those 9ms would mean that the AMD user wins.

N4CR

Supreme [H]ardness

- Joined

- Oct 17, 2011

- Messages

- 4,947

I think most just assumed G-Sync is better without actually bothering to look. It's better in most metrics but this is definitely an interesting one that needs further investigation. I'm going to keep digging and will post if I find other data.D

So if the OP is true, we should be able to find more proof.

You aren't hitting 140 FPS, or anywhere near even 100 FPS @ 4k resolutions. I've used G-Sync on my X27 specifically because any modern game like AC Odyssey, even with a 2080ti, you are still hitting sub 60 FPS and if you aren't running G-Sync you either deal with tearing, or you deal with V-Sync stutter.

Depends on what you play though. I moved to 4k in 2013 on an R9 290 and was still CPU limited. I am definitely ready for 4k144 for the older games I play. Sure, there are many games that I have to lower settings to maintain 60fps at 4k. I think the bigger deal with 4k144 is waiting for HDMI 2.1....

I am quite impressed with the input lag on Gsync overall. I always do Gsync + Vsync off and the lack of tearing is something I take for granted now.

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

Depends on what you play though. I moved to 4k in 2013 on an R9 290 and was still CPU limited. I am definitely ready for 4k144 for the older games I play. Sure, there are many games that I have to lower settings to maintain 60fps at 4k. I think the bigger deal with 4k144 is waiting for HDMI 2.1....

I am quite impressed with the input lag on Gsync overall. I always do Gsync + Vsync off and the lack of tearing is something I take for granted now.

According to this test, and from everything i've heard you want to have VSync On while using gsync. It's best to keep it forced 'On' in the NVCP, generally.

Dayaks

[H]F Junkie

- Joined

- Feb 22, 2012

- Messages

- 9,774

You guys ever try this test?

https://www.humanbenchmark.com/tests/reactiontime

Because for me... if we’re talking in the tens of milliseconds for response time it’s negligible lol.

https://www.humanbenchmark.com/tests/reactiontime

Because for me... if we’re talking in the tens of milliseconds for response time it’s negligible lol.

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

Just ask for a second source that is seriously not whatboutism because you did not say what about a second source that can verify this

What I asked was of the OP, and why they believed that the results were fact- and universally so as per their thread title- with a single source.

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

According to this test, and from everything i've heard you want to have VSync On while using gsync. It's best to keep it forced 'On' in the NVCP, generally.

To add to the confusion: you want G-Sync and V-Sync on in the Nvidia Control Panel globally, and V-Sync off in games.

Annoying to have to specify that, and to be sure that it's set, but that's more or less what must be done for the best experience with VRR. Beyond that, you typically want to limit framerates to a few frames below your max refresh rate. So at 144Hz, you'd want to limit framerate to say 141FPS.

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

You guys ever try this test?

https://www.humanbenchmark.com/tests/reactiontime

Because for me... if we’re talking in the tens of milliseconds for response time it’s negligible lol.

I will say that there's a difference in feeling, since we don't really have a better word, regardless of an individual's response time to various stimuli. Obviously the importance differs on a per-game basis, but generally speaking higher consistent framerates (lower frametimes) feel more responsive.

And given equal opponents in equal grounds, those 9ms would mean that the AMD user wins.

Here's the hard part: maybe. This will depend also at the level of competition and level that both the competitors are at. Now, assuming everything is equal across the board, that delta could easily be a rounding error, i.e., too small to make a difference, even though it can be measured. To prove that it matters, you'd have to show a real difference in effect.

And what calls all of it into question is the thread title: if you're going to say "nVidia has significantly more input lag than AMD", you need to show that for every Nvidia card, every AMD card, every driver, every operating system, every game, and every set of game configurations.

In a word: impossible.

Now consider what the OP states, and consider the excruciatingly small sample sizes being used to 'prove' that statement. Further, we shouldn't be looking to prove it so much as looking to find out why these results are being generated. Part of that is showing that they're consistent across many configurations, because they may not be. Hell, they probably aren't consistent across many configurations. I'd expect to find configurations where the results are reversed; where both vendors are 'slow'; where both vendors are 'fast'; and so on, and I'd expect on average for both vendors to be about the same.

Taken a step further, my interest is in determining where there might be latency issues and what can be done to address them. Aside from the faulty title and opening statement, the sample size used to produce the results is wholly inadequate to actually begin approaching a solution.

According to this test, and from everything i've heard you want to have VSync On while using gsync. It's best to keep it forced 'On' in the NVCP, generally.

Yes, VSync ON in NVCP but off in game.

Pieter3dnow

Supreme [H]ardness

- Joined

- Jul 29, 2009

- Messages

- 6,784

And yet this is what you wrote :What I asked was of the OP, and why they believed that the results were fact- and universally so as per their thread title- with a single source.

You're taking the video and results therein at face value, with an especially bold claim.

You should start by telling us why you're doing that. Why do you believe the results? What do you corroborate them with?

That is not the same as what you write.

Now consider what the OP states, and consider the excruciatingly small sample sizes being used to 'prove' that statement. Further, we shouldn't be looking to prove it so much as looking to find out why these results are being generated. Part of that is showing that they're consistent across many configurations, because they may not be. Hell, they probably aren't consistent across many configurations. I'd expect to find configurations where the results are reversed; where both vendors are 'slow'; where both vendors are 'fast'; and so on, and I'd expect on average for both vendors to be about the same.

Ooh look other people told you what you could have known if you watched the video before you "responded". That does not change the outcome tho but it does effect your job of deflecting and not taking things at face value when it comes to Nvidia and their products. So lets mark them all up:

Bold claims (not so much)

Why are the results real (still have not watched the video by all means that is what people should be discussing)

Well you found one good reason to ignore this result the sample size. And I would state that when it comes to technical things sometimes when you test mechanics it would be odd that they would appear in one test with one sample and not with others if there is no reason to doubt the testing methodology (you know not trusting websites as PCper which royally fucked up their freesync testing when the panel was at fault).

Why don't you disprove it then. All this fancy talk and you can't disprove it.So you're not questioning the results.

I'd recommend looking up whataboutism. It isn't asking why someone would post results from one source as fact.

Then post them?

If it's clearly 'true', then it should be easy to prove. Further, given how obsessed eSports'ers are with response time, there should be some corroboration available from that community as well. Do they all run AMD for lower response time?

So many home made claims about sync here but nobody talking about framebuffers.

hint for anyone: If someone talk about sync without bringing up framebuffers, they dont know what sync is and you should take any info with a grain of salt really

Ima refrain from this debate as historie has show that people tend to be shut off for new information anyway and want to retain their denial in how things works.

but sidenote i really wish they would not have called it Gsync and freesync is ppl tend to see them as another sync method instead of and extra feature

hint for anyone: If someone talk about sync without bringing up framebuffers, they dont know what sync is and you should take any info with a grain of salt really

Ima refrain from this debate as historie has show that people tend to be shut off for new information anyway and want to retain their denial in how things works.

but sidenote i really wish they would not have called it Gsync and freesync is ppl tend to see them as another sync method instead of and extra feature

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

If someone talk about sync without bringing up framebuffers

Obviously there are framebuffers involved. I get that, but maybe not everyone here does. Why not share a bit?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)