Pieter3dnow

Supreme [H]ardness

- Joined

- Jul 29, 2009

- Messages

- 6,784

https://videocardz.com/80956/amd-announces-radeon-pro-vega-ii-duo-a-dual-vega-20-graphics-card

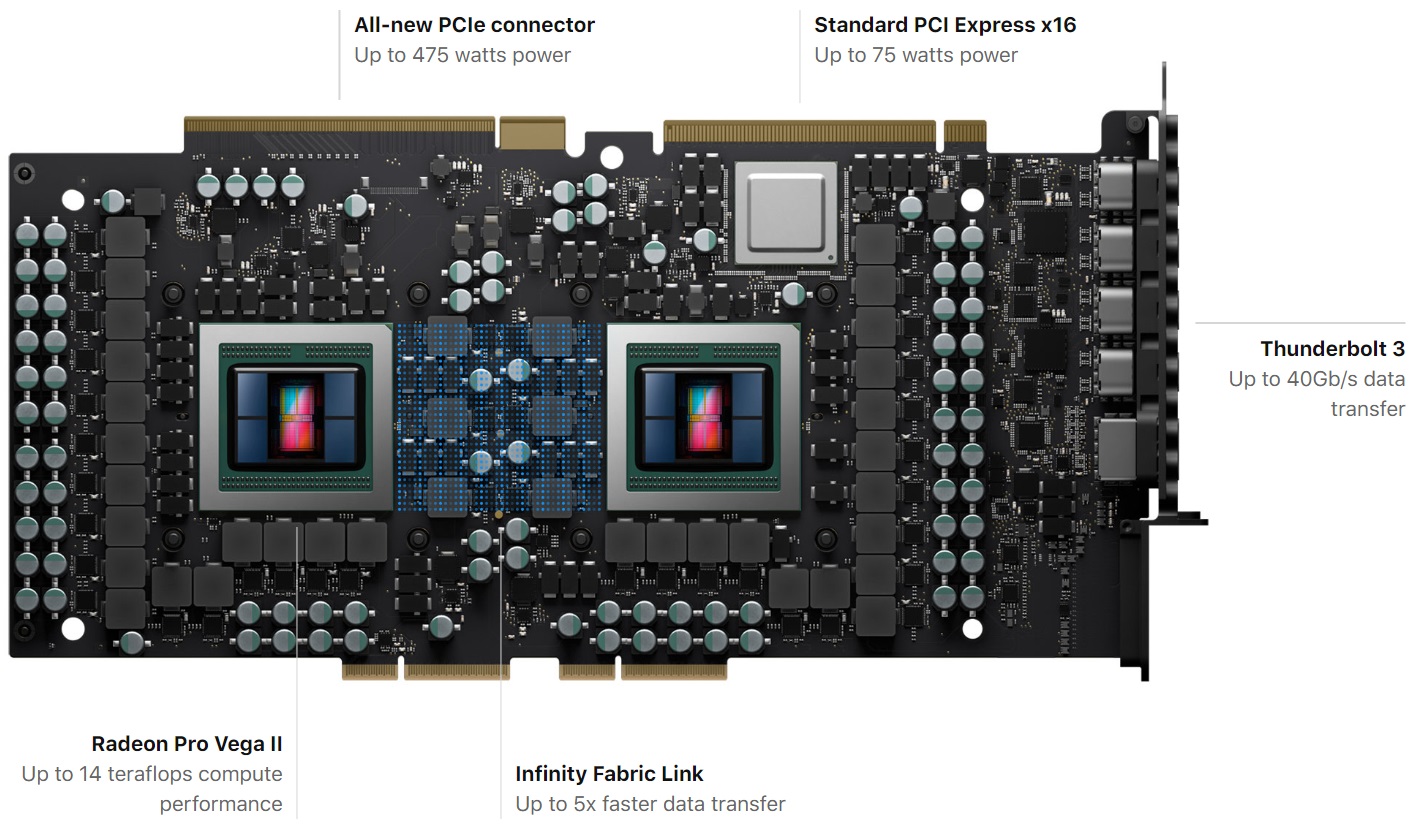

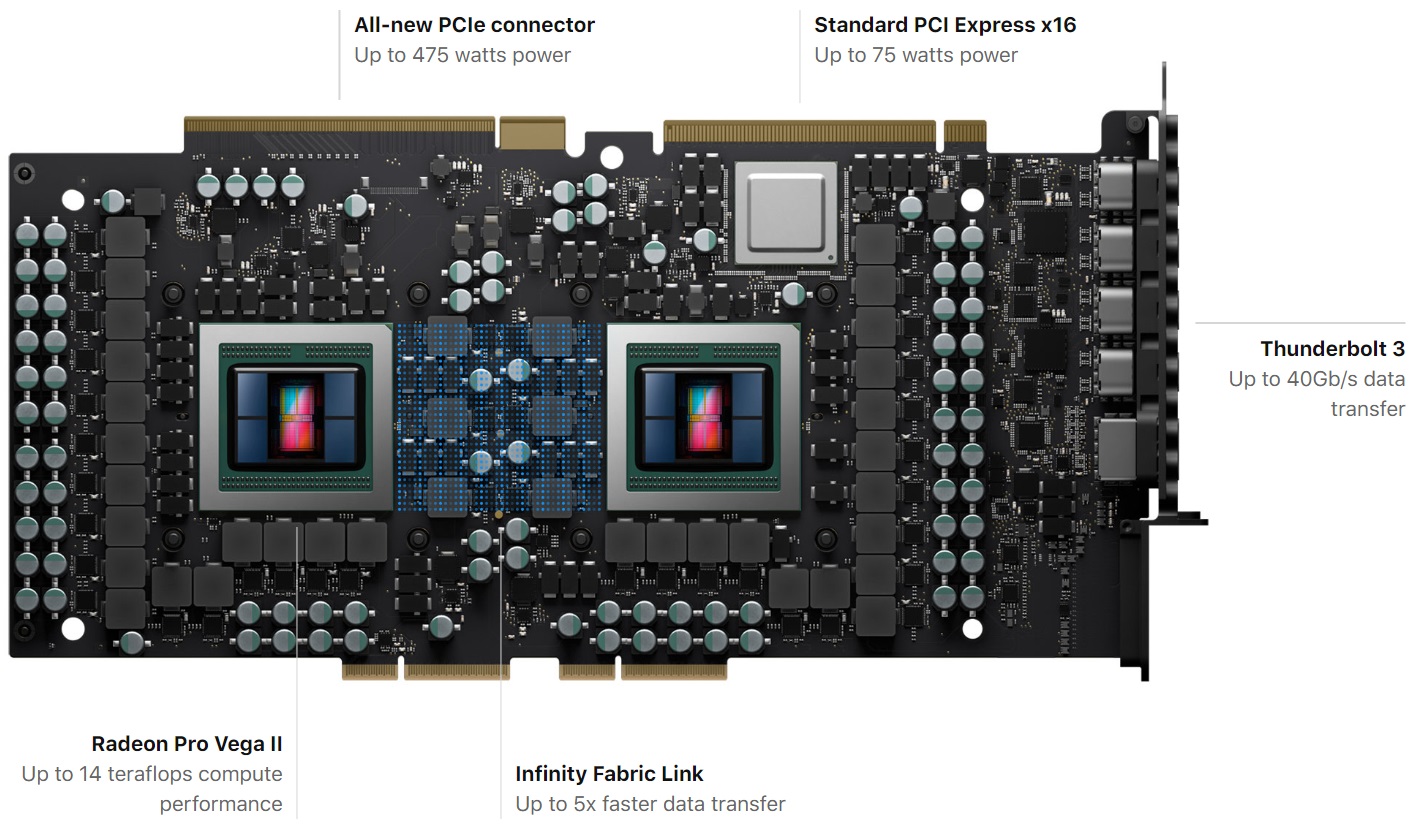

AMD and Apple developed a new PCI-Express connector, which supports up to 475W of power. Alongside the new PCI-e connector Radeon Pro Vega II Duo features Infinity Fabric Link capable of carrying 84 GB/s of data between the GPUs. More importantly, the Radeon Vega II Duo features onboard Thunderbolt 3 connector.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)