I am considering again returning this monitor and going 4k for sharper text, which is my primary use concern, with gaming being secondary. And I could run at 1080 to prevent loading the gpu too much during gaming.

I think it should be stressed that the choice between 4k60hz and 1440p144hz depends largely on the type of games you're playing. FPS and other shooters benefit greatly from higher refresh rates and a more "connected" reticle, but RPG and RTS fans will probably appreciate the extra detail in a 4k panel far more than a barely perceptible smoothness.

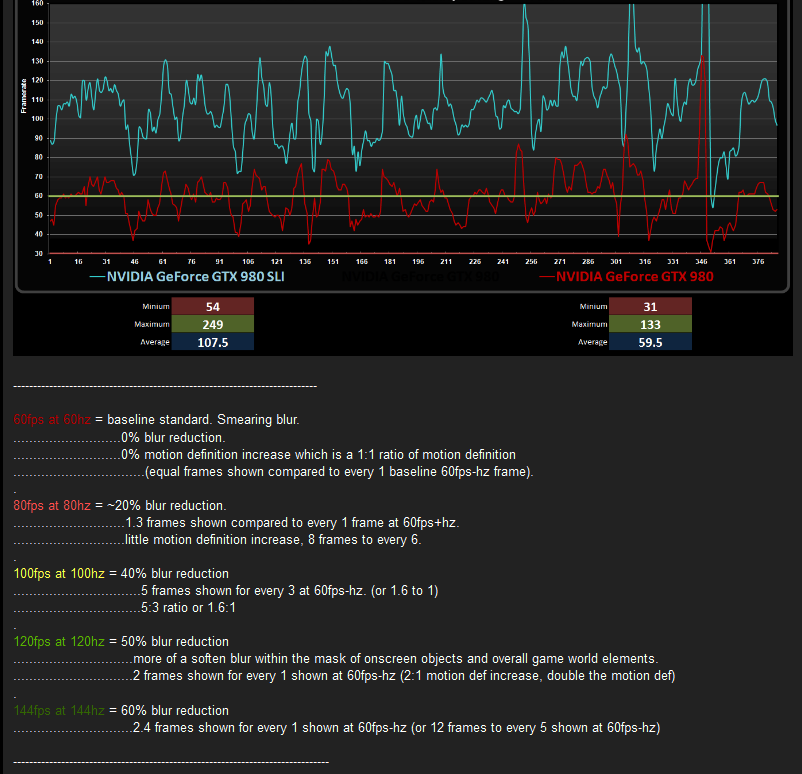

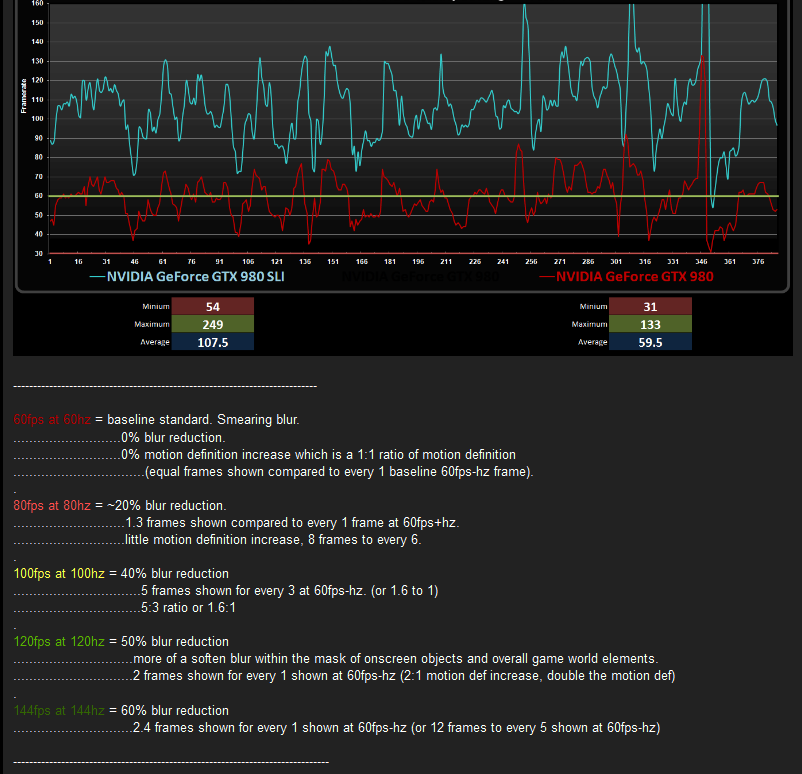

I play both, but I'm happy enough with the bump from 1080->1440, the extra refresh is rarely utilized fully by my system anyway, but 100+ fps is nice on the eyes

.

.![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)