Nenu

[H]ardened

- Joined

- Apr 28, 2007

- Messages

- 20,315

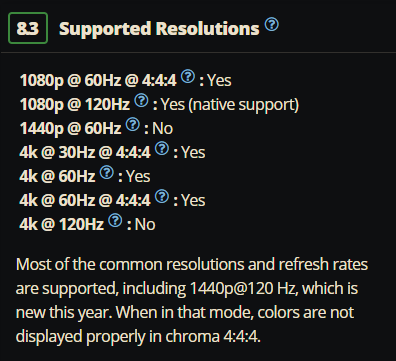

I think you have been misinformed.The main selling point - the 4k120 video mode.

Downscaling to 1080p for full chroma content or 1440p for reduced chroma content is a severe limitation alleviated by HDMI 2.1.

If you wanted to buy something _RIGHT_NOW_, sure, but otherwise it's LG/Alienware All the way. Hopefully the 2019 Samsung Flagship comes in with 4k120 + BFI aswell.

It does full chroma at 4K and 1440p, there is no need to downscale to 1080p.

1440p mode needs to use RGB Full not ycbcr, that is the only limitation and tbh doesnt matter because full RGB is better.

Colours on this TV are simply fantastic no matter what you use it for.

4K/120Hz cant be pushed with current gfx cards.

By the time gfx cards can do it, they will be HDMI 2.1 and the new series of TVs will be out.

If you want a blazingly good TV/monitor now, the Q9 is a great choice.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)