pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,096

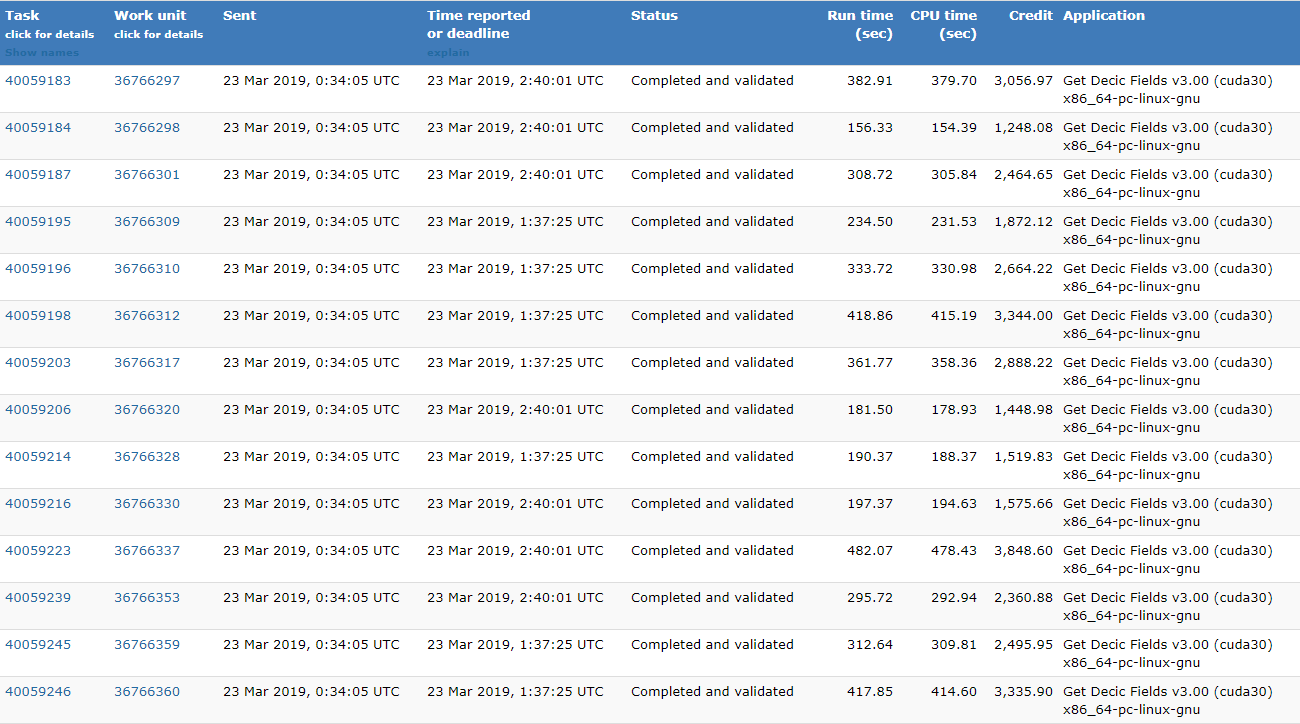

After all these years, we finally have our first GPU app. It's only a beta version for 64bit linux with Nvidia GPUs. Support for other platforms and GPUs will be coming soon.

If you'd like to help test this app, you will need to check the "run test applications" box on the project preferences page. I generated a special batch of work for this app from some older WUs that I have truth for. This will help to find any potential bugs that are still there.

A few potential issues:

1. This was built with the Cuda SDK version 10.1, so it uses a relatively new Nvidia driver version and only supports compute capability 3.0 and up. If this affects too many users out there, I will need to rebuild with on older SDK.

2. I was not able to build a fully static executable, but I did statically link the ones most likely to be a problem (i.e. pari, gmp, std c++)

Please report any problems. I am still relatively new to the whole GPU app process, so I am sure there will be issues of some kind.

Also, feel free to leave comments regarding what platform, GPU, etc I should concentrate on next. I was thinking I would attack linux OpenCL (i.e. ATI/AMD) next as that should be a quick port of what I did with Nvidia. I think the windows port will take much longer, since I normally use mingw to cross-compile but I don't think that's compatible with the nvidia compiler.

https://numberfields.asu.edu/NumberFields/forum_thread.php?id=362

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)