5150Joker

Supreme [H]ardness

- Joined

- Aug 1, 2005

- Messages

- 4,568

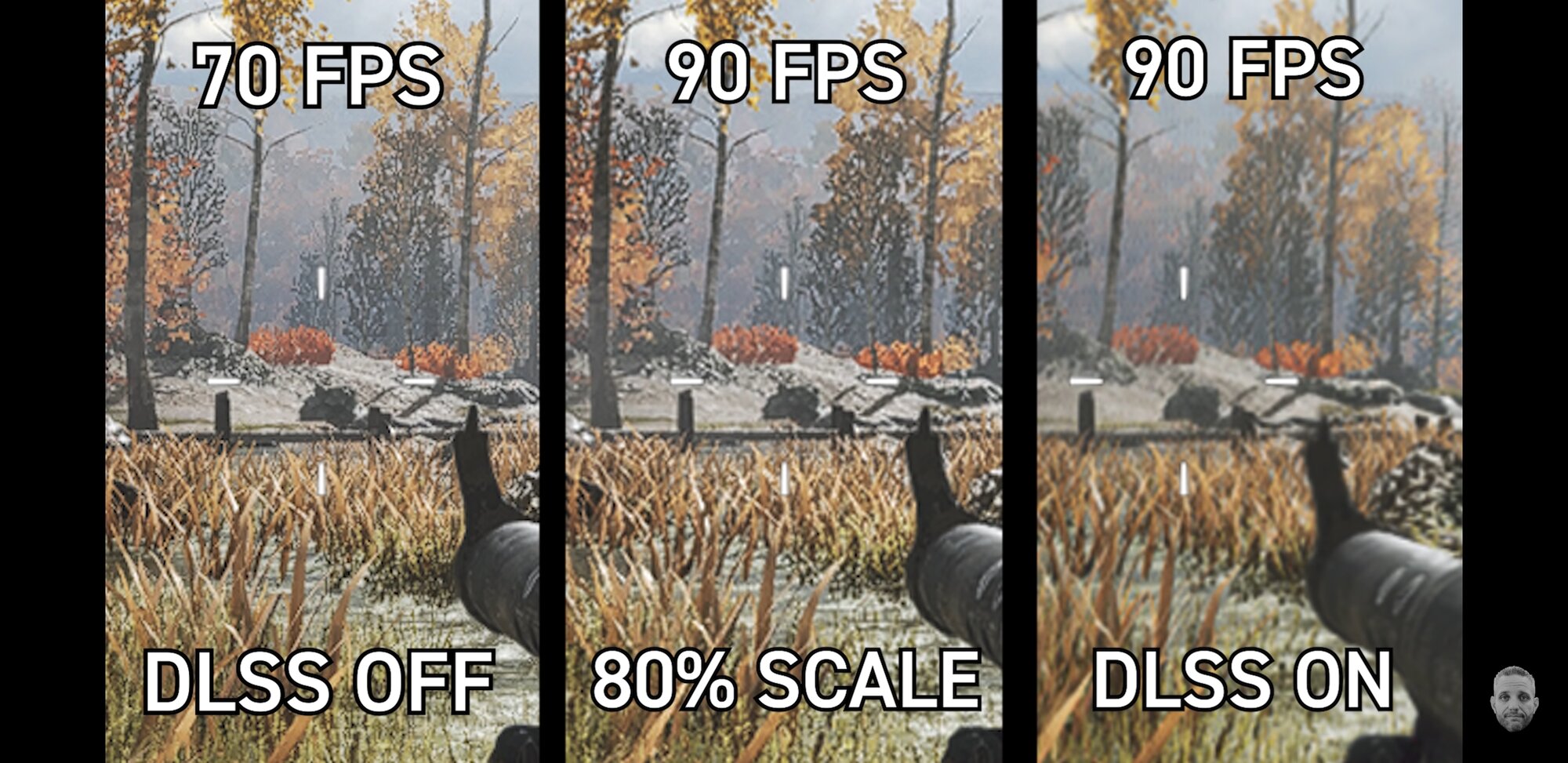

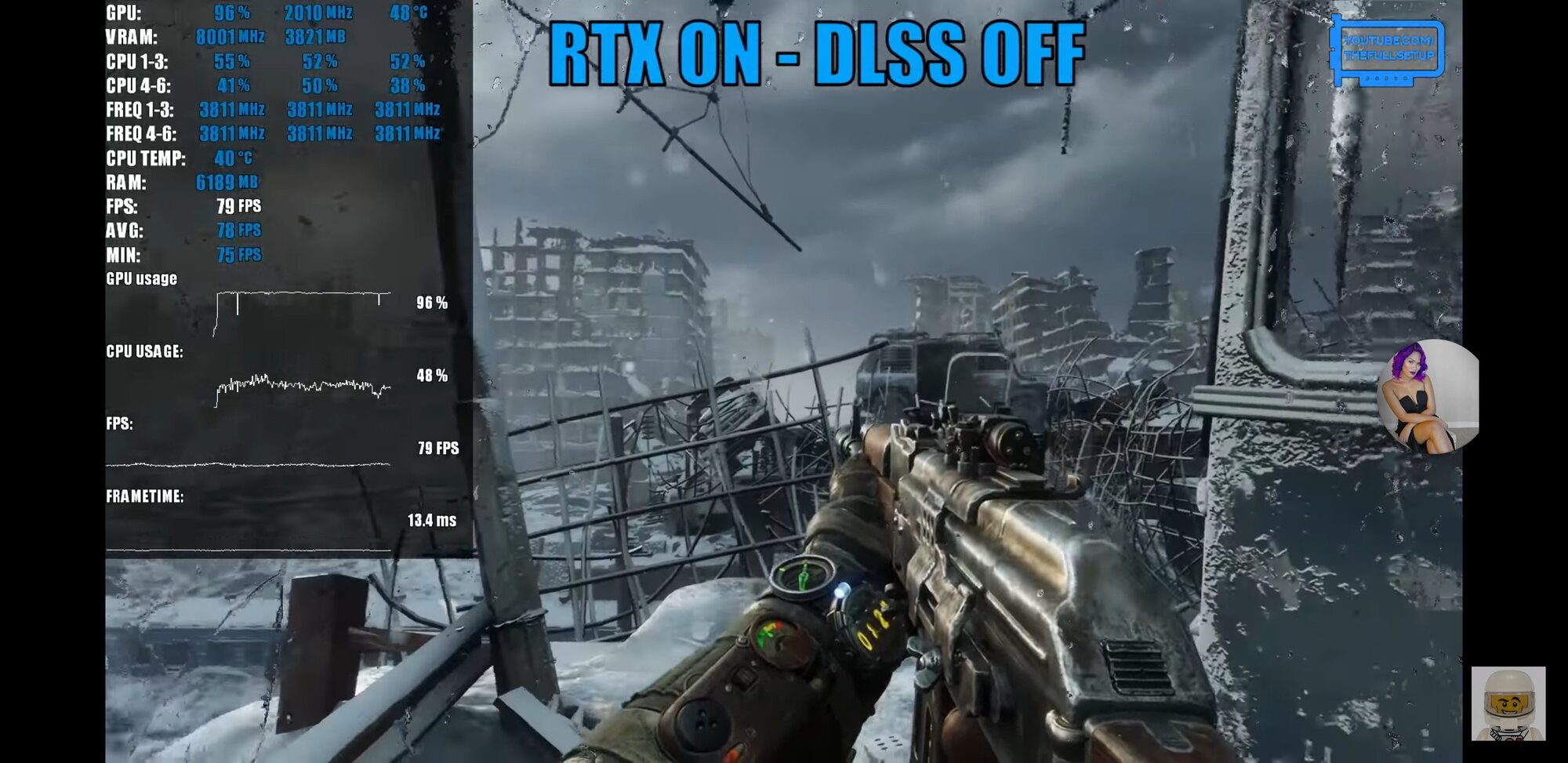

At least you can use RTX in locked 30 or 60 fps mode in games like Metro and take advantage of it but DLSS is completely useless unfortunately. Now whether it gets better over time, who knows but I have a feeling NVIDIA is going to quietly drop DLSS in it's next architecture and it will be a forgotten feature. Hopefully the piss poor Turing sales spurs NVIDIA to build another GPU like Pascal that is 100% for gaming and not some cut down pro rendering card to pad their margins using their customers as suckers. Anyone who is even remotely unbiased will recognize that Turing is a Turd and DLSS is hot garbage.

I sat this generation out because Turing didn't offer anything that interested me and these reviews just continue reinforcing my decision. NVIDIA better hurry up and step it up before Intel gets their act together and comes after them in a big way.

I sat this generation out because Turing didn't offer anything that interested me and these reviews just continue reinforcing my decision. NVIDIA better hurry up and step it up before Intel gets their act together and comes after them in a big way.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)