elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,296

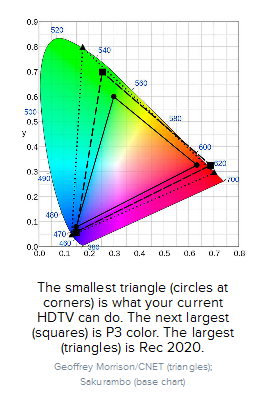

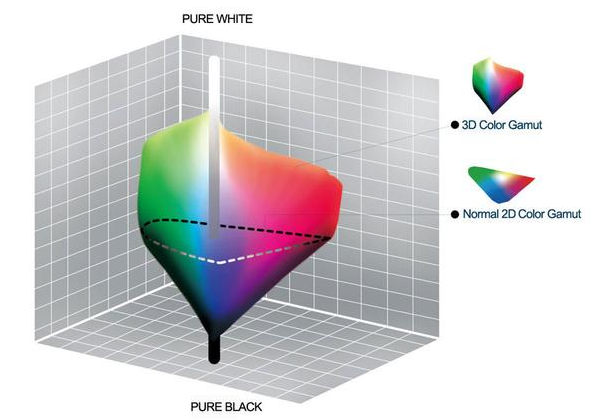

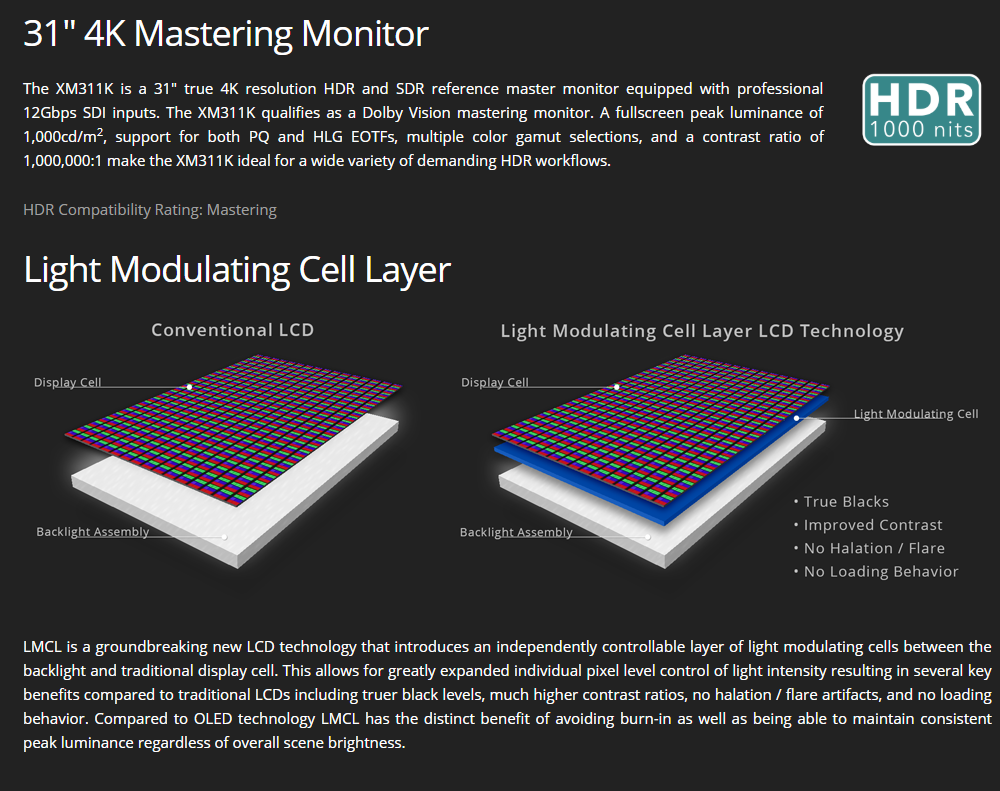

Per pixel FALD would be very welcome especially at HDR mastered levels of color volume resulting from color luminances ranging up to 1000, 4000, and 10,000nit that HDR is designed for in movies and games. Even mini LED would be welcomed..

4 pixel per light would be over 2million backlights,

10 pixels per light would be over 800,000 backlights.

1million backlight FALD would be 8 pixels per light and a more modest

100,000 backlight fald would be 83 pixels per light.

The samsung Q9FN already does a good job with a little dim offset at 17,280 pixels per light (3840 x 2160 divided by 480 zone FALD), so those much higher numbers would a huge gain.

https://www.techhive.com/article/3239350/smart-tv/will-hdr-kill-your-oled-tv.html

"manufactured by LG) don’t actually use true blue, red, and green OLED subpixels the way OLED smartphone displays do. Instead, they use white (usually, a combination of blue and yellow) subpixels with red, green, and blue filters on top. This has many advantages when it comes to large panel manufacturing and wear leveling, but filters also block light and reduce brightness.

To compensate, a fourth, white sub-pixel is added to every pixel to increase brightness. But when you add white to any color, it gets lighter as well as brighter, which de-emphasizes the desired color. "

4 pixel per light would be over 2million backlights,

10 pixels per light would be over 800,000 backlights.

1million backlight FALD would be 8 pixels per light and a more modest

100,000 backlight fald would be 83 pixels per light.

The samsung Q9FN already does a good job with a little dim offset at 17,280 pixels per light (3840 x 2160 divided by 480 zone FALD), so those much higher numbers would a huge gain.

https://www.techhive.com/article/3239350/smart-tv/will-hdr-kill-your-oled-tv.html

"manufactured by LG) don’t actually use true blue, red, and green OLED subpixels the way OLED smartphone displays do. Instead, they use white (usually, a combination of blue and yellow) subpixels with red, green, and blue filters on top. This has many advantages when it comes to large panel manufacturing and wear leveling, but filters also block light and reduce brightness.

To compensate, a fourth, white sub-pixel is added to every pixel to increase brightness. But when you add white to any color, it gets lighter as well as brighter, which de-emphasizes the desired color. "

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)