_chesebert_

Weaksauce

- Joined

- Nov 2, 2016

- Messages

- 74

What is the rationale? Gaming only or mixed use?Vega 64. I plan to sell my 1080TI and get Radeon Vii when it is released

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

What is the rationale? Gaming only or mixed use?Vega 64. I plan to sell my 1080TI and get Radeon Vii when it is released

Not if you are limiting power usage....gpus naturally clock down when not under load.

I do gaming on it...and that caused a reboot. Pulled too much power at peak. If I limited it down 20% max power it works perfectly.If you're not loading it, then you don't need to worry about power usage (these days). What you're doing is putting in an artificial block to prevent the power usage from going too high regardless of the load.

Was referencing 1080. Vega is undisputed compute champ among the choices, but suffers in some popular titles. I would pick Vega in general. However it does suffer in some games I play compared to Nvidia, AC, Ff Xv etc

Vega 64. I plan to sell my 1080TI and get Radeon Vii when it is released

I do gaming on it...and that caused a reboot. Pulled too much power at peak. If I limited it down 20% max power it works perfectly.

It is a leftover mining card.......instead of sending it back for a lower-powered card that's more appropriate for the system?

It is a leftover mining card....

And you're complaining about Afterburner

You do realize a mining app is just an app that uses a GPU just like a game, right?

This is level 3 textures and playing with the settings it will go all the way to needing Vega 7 and that 16Gb to max this game out it DX 12 1080p ..

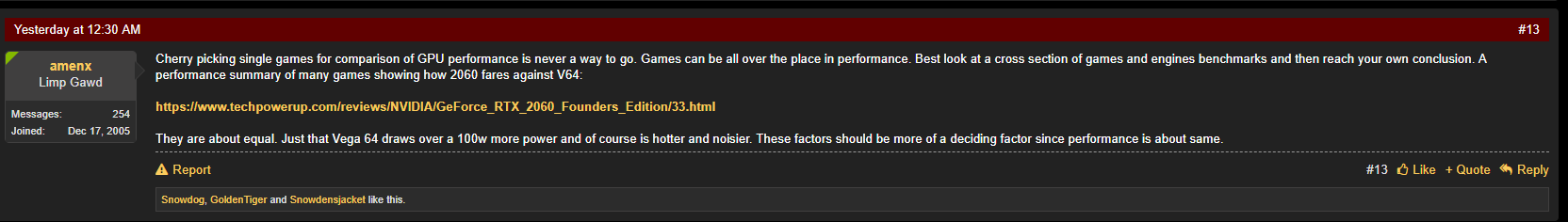

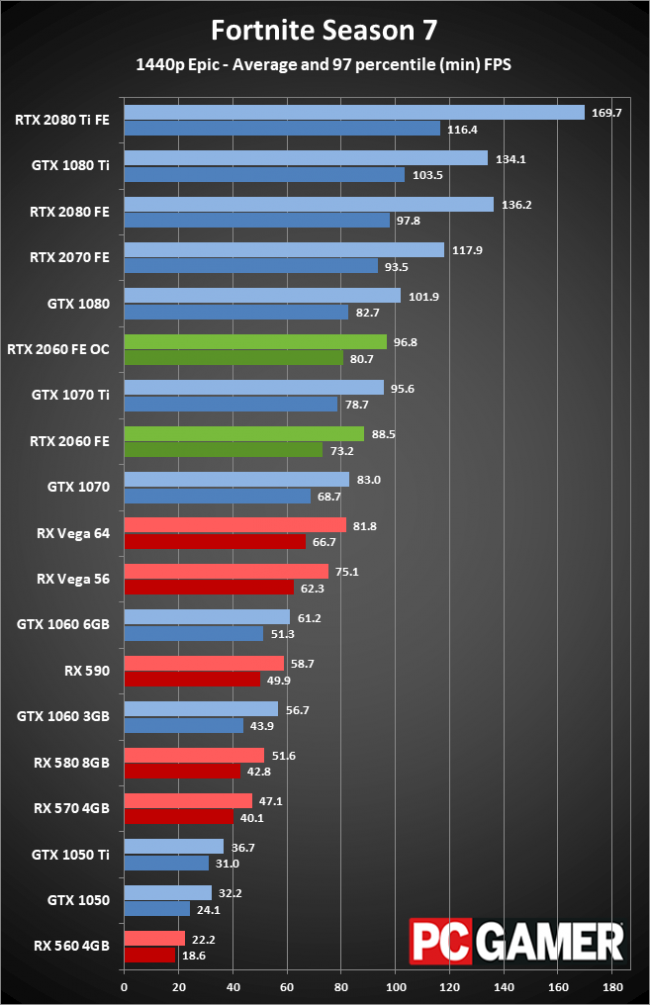

I looked at that review. The AMD cards were using Catalyst drivers meaning old.

They left a lot of performance on the table not updating their benchmark set for Vega since Adrenalin driver update has been out.

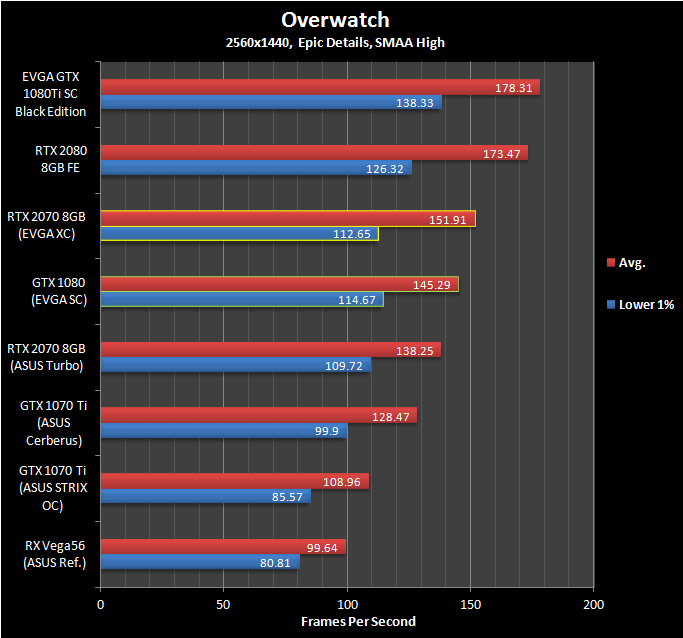

It’s not a fair comparison at all. I get not wanting to retest everything but it’s a bit of a disservice to compare that way. When you look at newer benchmarks using the newest drivers for AMD Vega64, the Vega pretty much beats the 2060 on most everything.

Why do you keep repeating stuff that can be easily verfied to be False:

https://www.techspot.com/review/1781-geforce-rtx-2060-mega-benchmark/

"Our GPU test system consisted of a Core i9-9900K clocked at 5 GHz with 32GB of DDR4-3200 memory. We used AMD's Adrenalin 2019 Edition 19.1.1 drivers for the Radeon GPUs and Game Ready 417.35 WHQL for the GeForce GPUs."

If anything the guys at TPU/HWUB are AMD fans, Not only that but the recently did a video complaining how NVidia shut them out of early 2060 coverage. These guys really don't like and are not getting along with NVidia right now. They weren't going to leave AMD on old drivers, and clearly they didn't.

As much as people like to shit on NVidia, the RTX 2060 is actually a good card and decent value in todays market.

Bad quote

Read post 24 in this thread.

If you can handle the noise and heat, the Vega 64 is the superior card. It has faster clocks most Vega 56 can’t quite hit, and it has extra processor cores over the Vega 56.

Hey, YOU, stop making stuff up.

The article we were talking about was not the one you just linked. Nice bait and switch there.

Oops. My mistake. Too many tech sites and I was just looking at the review on Techspot.

So I don't think drivers had that much effect.

Techspot tested across 30+ games and they used the latest Adrenalin drivers and reached essentially the same conclusion.

2060 performs nearly as well as the Vega 64.

And it tends to mainly be the crappy Blower design Vegas that go on sale, while there are many dual fan 2060s at the base price.

I think the extra $$$ you pay for vega64 extra 2G ram over 2060 is money well spent, blower fan or not. If you change video cards yearly, I suppose it doesn't matter. If you don't mind using lower res textures in some cases, doesn't matter.

In any case, I'd pick up a 1070 used for $200-$225 on ebay before I'd even thinking of picking up a 2060, due to the ram bonus alone.

I'd take a 1080 over a 2060 every day of the week, twice on sundayIs it a lot more for a non blower? I would never consider an AMD blower... especially at Vega 64 wattages. The only way I see it making sense is if you live alone and wear a headset all the time.

IIRC OP went for a used 1080 in the same price bracket.

Lol in what, two games?It's slower than a 1080,

Many games load more vram than needed simply because the card has more vram. Its called cacheing. For a proper test, you need the SAME exact card in both 4gb and 8gb versions and then compare the performance. There are a few examples floating around the web, and yes, you will see minor differences, but then again, 4gb vs 8gb, and 6gb kind of narrows things down.

I just so happen to also have a XFX RX 570 RS with 4Gb card if you would like me to load it up and see , the clocks are a little higher but the vram is the question and not the clocks .

Agree, and doesn't NVidia make slightly more efficient use of memory as well. Until I see some evidence, I don't see 6GB NV vs 8GB AMD as a big issue.

Pretty muchIt's slower than a 1080, and still uses more power for gaming. I recognize Vega's raw compute capacity but it should also be stated that that is not a decision point in and of itself. Given AMD's less advanced software platform, applications need to be evaluated on a case by case bases; particularly, anything that runs well in CUDA is worth taking a close look at.

So you like spending cash and getting no return...?

My God, I wouldn't want to be in the same room!

Building this machine for a friend:

https://hardforum.com/threads/1600-all-in-gaming-build-quick-gut-check.1976067/

His primary game right now is PubG, and he spends a lot of time in that title. He plays almost exclusively FPS titles.

- MSI 34" Ultrawide 3440x1440 100hz Freesync monitor ($380) - www.microcenter.com/product/512508/optix-mag341cq-34-uw-qhd-100hz-dvi-hdmi-dp-freesync-curved-gaming-led-monitor#

- Ryzen 2700

- 16GB RAM

What video card would you recommend?

You should just return everything and get this system for $1400

https://www.amazon.com/CYBERPOWERPC...07H7Q34X5/ref=cm_cr_arp_d_product_top?ie=UTF8

You should just return everything and get this system for $1400

https://www.amazon.com/CYBERPOWERPC...07H7Q34X5/ref=cm_cr_arp_d_product_top?ie=UTF8

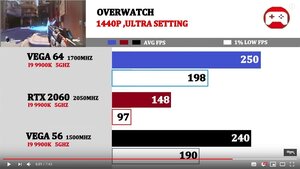

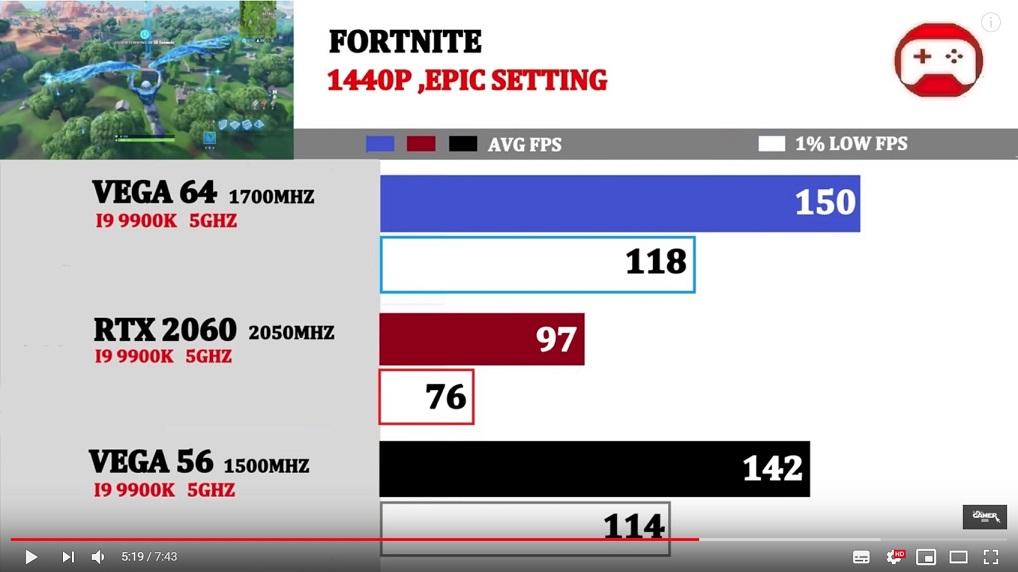

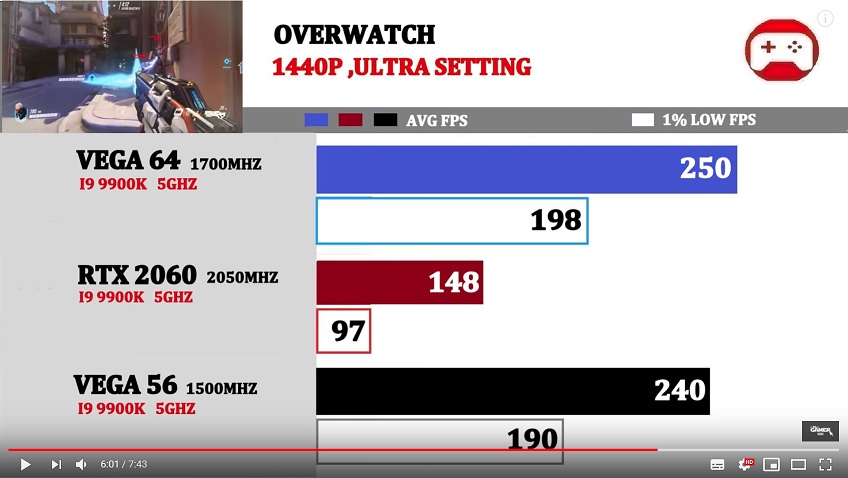

Here’s a vid that actually uses the current Adrenalin drivers and the Vega 64 steamrolls the 2060 in almost everything including Assasins Creed and PubG. (Where older AMD Vega drivers lose hard to the Nvidia 2060). This vid paints a much different light. So much so that I deleted the previous video post because it is pretty obvious they were using old recycled benchmark numbers from previous tests with very old drivers. (Like techpowerup)

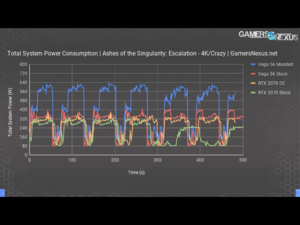

Pretty sure the 2060 will have some voltage limitations like the others but it'd be quite funny to see. I'm going to see how much power someone managed to get a Vega to suck down too lol... god damn they have bigger power circuits than the 2080Ti lmao!

View attachment 138870

Something is really fishy about those results.

...

This review look incredibly cooked.

I can’t run AC Origin and Odyssey at 100% GPU utilization at 1080p using r7 1700x at 4ghz. Something is still not right with the AMD 2019 driver.

Yep. That's what happens when someone scourers the internet for a result to fit their agenda, instead of sticking to reasonably reliable sources.