cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,074

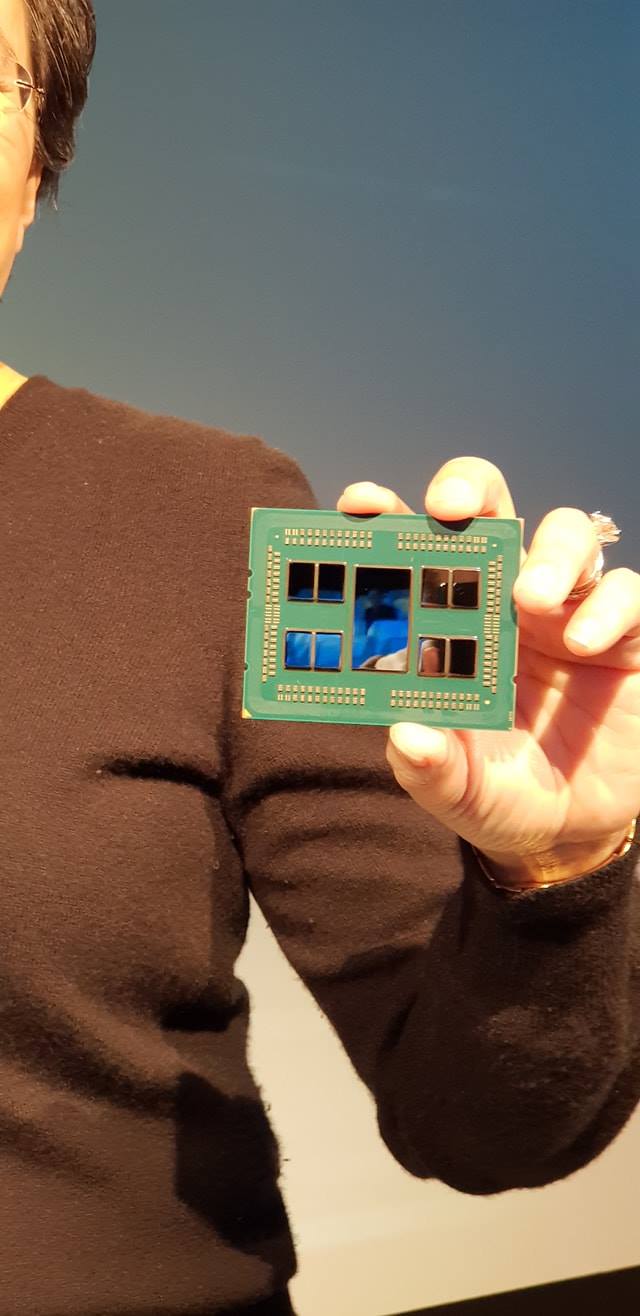

AMD unveiled the world's first lineup of 7nm GPUs for the datacenter that will utilize an all new version of the ROCM open software platform for accelerated computing. "The AMD Radeon Instinct MI60 and MI50 accelerators feature flexible mixed-precision capabilities, powered by high-performance compute units that expand the types of workloads these accelerators can address, including a range of HPC and deep learning applications." They are specifically designed to tackle datacenter workloads such as rapidly training complex neural networks, delivering higher levels of floating-point performance, while exhibiting greater efficiencies.

The new "Vega 7nm" GPUs are also the world's first GPUs to support the PCIe 4.02 interconnect which is twice as fast as other x86 CPU-to-GPU interconnect technologies and features AMD Infinity Fabric Link GPU interconnect technology that enables GPU-to-GPU communication that is six times faster than PCIe Gen 3. The AMD Radeon Instinct MI60 Accelerator is also the world's fastest double precision PCIe accelerator with 7.4 TFLOPs of peak double precision (FP64) performance.

"Google believes that open source is good for everyone," said Rajat Monga, engineering director, TensorFlow, Google. "We've seen how helpful it can be to open source machine learning technology, and we're glad to see AMD embracing it. With the ROCm open software platform, TensorFlow users will benefit from GPU acceleration and a more robust open source machine learning ecosystem." ROCm software version 2.0 provides updated math libraries for the new DLOPS; support for 64-bit Linux operating systems including CentOS, RHEL and Ubuntu; optimizations of existing components; and support for the latest versions of the most popular deep learning frameworks, including TensorFlow 1.11, PyTorch (Caffe2) and others.

The new "Vega 7nm" GPUs are also the world's first GPUs to support the PCIe 4.02 interconnect which is twice as fast as other x86 CPU-to-GPU interconnect technologies and features AMD Infinity Fabric Link GPU interconnect technology that enables GPU-to-GPU communication that is six times faster than PCIe Gen 3. The AMD Radeon Instinct MI60 Accelerator is also the world's fastest double precision PCIe accelerator with 7.4 TFLOPs of peak double precision (FP64) performance.

"Google believes that open source is good for everyone," said Rajat Monga, engineering director, TensorFlow, Google. "We've seen how helpful it can be to open source machine learning technology, and we're glad to see AMD embracing it. With the ROCm open software platform, TensorFlow users will benefit from GPU acceleration and a more robust open source machine learning ecosystem." ROCm software version 2.0 provides updated math libraries for the new DLOPS; support for 64-bit Linux operating systems including CentOS, RHEL and Ubuntu; optimizations of existing components; and support for the latest versions of the most popular deep learning frameworks, including TensorFlow 1.11, PyTorch (Caffe2) and others.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)