cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,089

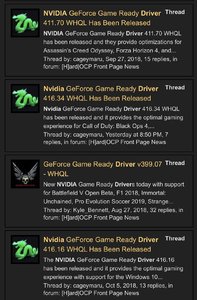

Nvidia GeForce Game Ready Driver 416.34 WHQL has been released and it provides the optimal gaming experience for Call of Duty: Black Ops 4, SOULCALIBUR VI, and GRIP. Fixed issues in this release include: Games launch to a black screen when DSR is enabled. [2411501]. Some games produce red/green/blue shimmering line when played in full-screen mode and with G-SYNC enabled. [2041443]. [Windows Defender Application Guard][vGPU][Surround]: Surround cannot be enabled from the NVIDIA Control Panel when running Edge Browser with Application Guard over vGPU. [200444614]. [PUBG]: Issue with shadows may occur in the game. [2414749]. When HDR is enabled, games show green corruption. [2400448]. The release notes are located here.

Windows 10 issues: [Windows Defender Application Guard][vGPU][Surround]: Edge Browser with Application Guard cannot be opened when Surround is enabled. [200443580]. [GeForce GTX 1060]AV receiver switches to 2-channel stereo mode after 5 seconds of audio idle. [2204857]. [GeForce GTX 1080Ti]: Random DPC watchdog violation error when using multiple GPUs on motherboards with PLX chips. [2079538]. [SLI][HDR][Battlefield 1]: With HDR enabled, the display turns pink after changing the refresh rate from 144 Hz to 120 Hz using in-game settings. [200457196]. [Firefox]: Cursor shows brief corruption when hovering on certain links in Firefox. [2107201]. [Far Cry 5]: Flickering occurs in the game. [2400207].

Windows 10 issues: [Windows Defender Application Guard][vGPU][Surround]: Edge Browser with Application Guard cannot be opened when Surround is enabled. [200443580]. [GeForce GTX 1060]AV receiver switches to 2-channel stereo mode after 5 seconds of audio idle. [2204857]. [GeForce GTX 1080Ti]: Random DPC watchdog violation error when using multiple GPUs on motherboards with PLX chips. [2079538]. [SLI][HDR][Battlefield 1]: With HDR enabled, the display turns pink after changing the refresh rate from 144 Hz to 120 Hz using in-game settings. [200457196]. [Firefox]: Cursor shows brief corruption when hovering on certain links in Firefox. [2107201]. [Far Cry 5]: Flickering occurs in the game. [2400207].

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)