Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hardware Unboxed Analyzes Intel's Commissioned Core i9-9900K Benchmarks

- Thread starter cageymaru

- Start date

Zion Halcyon

2[H]4U

- Joined

- Dec 28, 2007

- Messages

- 2,108

"AMD does the same thing."

I don't think so.

Check out the latest post on youtube:

" It's even worse than we thought, Principled Technologies are running the 2700X as a quad-core. "

One of the first things that I have noticed that shills and fanboys do, when their side is caught red-handed doing something they shouldn't, is to globalize it in order to legitimize it.

I have always found the "everyone else does it" arguments to be a fallacy and at best uneducated, and at worst disingenuous.

Game mode was introduced with and for Threadripper.

https://www.anandtech.com/show/1172...-game-mode-halving-cores-for-more-performance

For the launch of AMD’s Ryzen Threadripper processors, one of the features being advertised was Game Mode

It's not advertised for anything AM4 that I have seen. It is being used inappropriately to make the 2700x look bad.

I have a 2700 but no DDR4 yet so I can’t check for myself but, what I’m curious about is does the software give any indication what Game Mode will do when enabled. I.E. plainly say it halves the cores and is designed for CPUs with more than 8 cores?

If it isn’t plainly evident I could see the uninformed accidentally seeing the name and enabling it. It’s right in line with Autopilot in terms of being poorly named if that’s the case.

Pantalaimon

Limp Gawd

- Joined

- Aug 17, 2006

- Messages

- 200

I have a 2700 but no DDR4 yet so I can’t check for myself but, what I’m curious about is does the software give any indication what Game Mode will do when enabled. I.E. plainly say it halves the cores and is designed for CPUs with more than 8 cores?

If it isn’t plainly evident I could see the uninformed accidentally seeing the name and enabling it. It’s right in line with Autopilot in terms of being poorly named if that’s the case.

Assuming the user reads the user guide. It says there 'The Game Mode profile is pre-configured for optimal performance for most modern and legacy games with these fixed settings: 1. Legacy Compatibility Mode on, and 2. Memory Access Mode to Local. With Legacy Compatibility Mode on, core disabling control (3) is not allowed as the cores have been reduces by half of the processor's capacity for processors with more than 4 physical cores.'

TheHig

[H]ard|Gawd

- Joined

- Apr 9, 2016

- Messages

- 1,347

One of the first things that I have noticed that shills and fanboys do, when their side is caught red-handed doing something they shouldn't, is to globalize it in order to legitimize it.

I have always found the "everyone else does it" arguments to be a fallacy and at best uneducated, and at worst disingenuous.

Indeed. Let’s remember to call out the BS wherever it’s found and not get caught up in “but they do/did it too!” Big AMD guy myself but shenanigans are shenanigans and bad information is bad for us consumers. This review is terribly incompetent at best and possibly skewed at worst.

If Game mode is known to not work correctly on Ryzen why even have the option there? Its really not hard to have your software look for descriptors and configure itself based on that?

Who says it's not working correctly?

It has it's place and works well for some old games.

It's not appropriate to use this for benchmarking the latest and greatest games, or at least to be fair you need to try both ways.

This move by Intel was deliberate. It's not the stock, default setting by this software, and the software isn't even needed in the first place.

Intel deliberately found a slimy way to cut AMD off at the knees and they ran with it. They hoped nobody would notice, but they did.

Who says it's not working correctly?

It has it's place and works well for some old games.

It's not appropriate to use this for benchmarking the latest and greatest games, or at least to be fair you need to try both ways.

This move by Intel was deliberate. It's not the stock, default setting by this software, and the software isn't even needed in the first place.

Intel deliberately found a slimy way to cut AMD off at the knees and they ran with it. They hoped nobody would notice, but they did.

I'm not 100% sold on deception as opposed to mere incompetence. Still, it deserves retraction, apology, and reissuance of test data.

Monkey34

Supreme [H]ardness

- Joined

- Apr 11, 2003

- Messages

- 5,140

Christ. These days if you build a 9900K system with a 2080Ti, you're basically building Hitler's PC.

Proof found:

Attachments

Parja

[H]F Junkie

- Joined

- Oct 4, 2002

- Messages

- 12,670

interview with a PT

Poor guy. He didn't seem to understand half of what Steve was saying.

bigdogchris

Fully [H]

- Joined

- Feb 19, 2008

- Messages

- 18,707

https://www.techpowerup.com/248407/...d-technologies-retesting-amd-ryzen-processors

Well, it was an owner handling PR disaster. He would be a coward if he asked someone else to do it. He was pretty clear that he wasn't doing the technical part. I give the guy credit for not wanting to be there but doing it anyways because it would of turned out even worse had they not.Poor guy. He didn't seem to understand half of what Steve was saying.

Last edited:

TheHig

[H]ard|Gawd

- Joined

- Apr 9, 2016

- Messages

- 1,347

Ok so they were hand picked and did what they were told. A savvy tech journalist wouldn’t have fallen for it.

Patsy confirmed. I almost feel bad for them.

Patsy confirmed. I almost feel bad for them.

Ok so they were hand picked and did what they were told. A savvy tech journalist wouldn’t have fallen for it.

Patsy confirmed. I almost feel bad for them.

Contract is what it is. If someone wanted to pay me to benchmark a Threadripper with a marshmallow for a heatsink to see how far it goes, well, let's call it s'more time!

dragonstongue

2[H]4U

- Joined

- Nov 18, 2008

- Messages

- 3,162

If mighty Intel was "truly" unbiased to show off their "amazing product" they would have used their $$$$$$$$ might to show off the best result possible (beyond exotic cooling) for themselves and AMD and not purposefully find ways to make themselves look great while systematically making AMD look "bad"

simple thing such as the cooler, use stock cooler for one but using a very great cooler for the other, why not use this great cooler for both?

64gb memory is not "standard" gamer usage (not IMO) but they likely could have settled on a "happy medium" so that both the Intel and AMD systems being tested were not being "hampered" by memory speed/timings as much as possible so that the "chips" are the only thing that end up having to do the boxing sort of speak.

ahh well, hopefully enough people see through the deceptions attempted here (doubtful)

simple thing such as the cooler, use stock cooler for one but using a very great cooler for the other, why not use this great cooler for both?

64gb memory is not "standard" gamer usage (not IMO) but they likely could have settled on a "happy medium" so that both the Intel and AMD systems being tested were not being "hampered" by memory speed/timings as much as possible so that the "chips" are the only thing that end up having to do the boxing sort of speak.

ahh well, hopefully enough people see through the deceptions attempted here (doubtful)

Space_Ranger

Gawd

- Joined

- Jul 13, 2007

- Messages

- 634

If mighty Intel was "truly" unbiased to show off their "amazing product" they would have used their $$$$$$$$ might to show off the best result possible (beyond exotic cooling) for themselves and AMD and not purposefully find ways to make themselves look great while systematically making AMD look "bad"

simple thing such as the cooler, use stock cooler for one but using a very great cooler for the other, why not use this great cooler for both?

64gb memory is not "standard" gamer usage (not IMO) but they likely could have settled on a "happy medium" so that both the Intel and AMD systems being tested were not being "hampered" by memory speed/timings as much as possible so that the "chips" are the only thing that end up having to do the boxing sort of speak.

ahh well, hopefully enough people see through the deceptions attempted here (doubtful)

Anyone from [H] would know that deception is rampant here, but that's not the majority of the customer base. Average Joe's will not know and buy into the bullshit, hook, line and sinker..

In fairness, average Joe won't know the difference. Sad, and absolutely true.Anyone from [H] would know that deception is rampant here, but that's not the majority of the customer base. Average Joe's will not know and buy into the bullshit, hook, line and sinker..

TheMadHatterXxX

2[H]4U

- Joined

- Sep 7, 2004

- Messages

- 3,021

Contract is what it is. If someone wanted to pay me to benchmark a Threadripper with a marshmallow for a heatsink to see how far it goes, well, let's call it s'more time!

Probably, but how far does that go before you're outed as a shill and your reputation goes down the dumps.

- Joined

- May 18, 1997

- Messages

- 55,634

I don't get the outrage. Everyone will tell you, "Don't trust paid for reviews and analysis!" Then we see a paid for review and we get, "OH MY GOD WE CAN'T TRUST THESE PAID FOR REVIEWS!" Who even takes time to pour over that shit. It is bad marketing to be sure, and seems to be done badly, but I honestly have to wonder if it was intentional. Kinda reminds me of JaysTwoCents thinking since he can install an AIO, he is now a hardware reviewer and analyst. Particularly to what the industry calls "analysts," this stuff happens EVERY DAY. Say Qualcomm wants to put a specific message out. They go to Mr. Analyst and say, "We will pay you $20,000 to do some testing for us." At that point, Qualcomm gives the analyst the exact parameters that the test will cover. Qualcomm already knows the exact results, they just need to point to Mr. Analyst as being "independent." The thing that most likely happened here is that Intel hired idiots and did not give them the exact parameters of how to test.

On a side note, nvidia and AMD have also done "questionable" benchmarks

Cheating to make your product look faster is qualitatively different than kneecapping the competition to make it look slower, which is what[1] happened here.

[1] The way I phrased it implies it was done on purpose. I acknowledge it might have been ignorance.

ghostwich

2[H]4U

- Joined

- Sep 10, 2014

- Messages

- 2,237

https://en.wikipedia.org/wiki/Hanlon's_razor

However...

Intel's MG&A expenditure in 2018 is approximately $3.6B.

However...

Intel's MG&A expenditure in 2018 is approximately $3.6B.

Inacurate

Gawd

- Joined

- Aug 25, 2004

- Messages

- 520

The biggest take away, aside from the obvious that PT is incompetent, is how modern CPUs continue a trend of relying heavily on other components in the system, and it's no longer simply a "raw power" numbers game.

- Joined

- May 18, 1997

- Messages

- 55,634

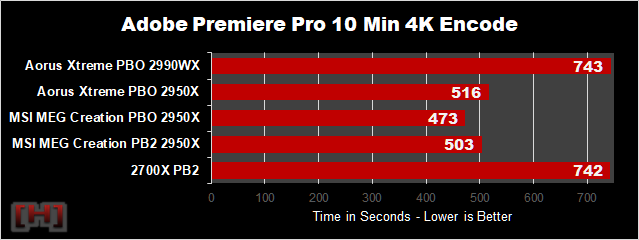

Two months and still trying to get AMD to tell me why 2990WX is broken in Premiere Pro and HandBrake. HandBrake is not that big a deal as it can be easily worked around, but PP is a HUGE negative to the 2990WX. PP was the main reason I wanted one in my personal box. It scores the same as a 2700X.The biggest take away, aside from the obvious that PT is incompetent, is how modern CPUs continue a trend of relying heavily on other components in the system, and it's no longer simply a "raw power" numbers game.

funkydmunky

2[H]4U

- Joined

- Aug 28, 2008

- Messages

- 3,871

Seems pretty clear. Ooops we did a little bad on Heat Sink and RAM. But they clearly knew, and at Intels direction, what Gaming mode was doing. They even admitted they had negative results with the 2700X, which was the target. It is Intel taking advantage of AMD's sloppyness.

AMD can't call it Gaming mode. It needed to be labeled TR Gaming and Ryzen legacy mode. Or more simply Optional Core Mode (OCM). This is AMD still allowing too many things slip between their fingers. Attention to detail has been a downfall for a long time.

AMD can't call it Gaming mode. It needed to be labeled TR Gaming and Ryzen legacy mode. Or more simply Optional Core Mode (OCM). This is AMD still allowing too many things slip between their fingers. Attention to detail has been a downfall for a long time.

dgz

Supreme [H]ardness

- Joined

- Feb 15, 2010

- Messages

- 5,838

Eh, what are you talking about? This was the other way around, and it was one of the most dishonest things Intel has done. They used the fact that their compiler was the de facto standard to cripple programs for AMD cpus. The compiler, instead of checking just the flags for availability of MMX, SSE etc instructions it checked also whether the CPU was Intel and did not enable any extra instruction set optimizations otherwise.

I thought it was obvious but oh well. Failing at my job, failing at sarcasm. Clearly not my week.

Haha, are you crazy? With the things I see posted on forums by fanboys of one company or the other how can you possibly tell sarcasm if there is no other indication?I thought it was obvious but oh well. Failing at my job, failing at sarcasm. Clearly not my week.

There is actually a thing called "alternative facts" now...

bigdogchris

Fully [H]

- Joined

- Feb 19, 2008

- Messages

- 18,707

https://www.techpowerup.com/248715/...re-over-intels-pt-benchmarks-for-9th-gen-core

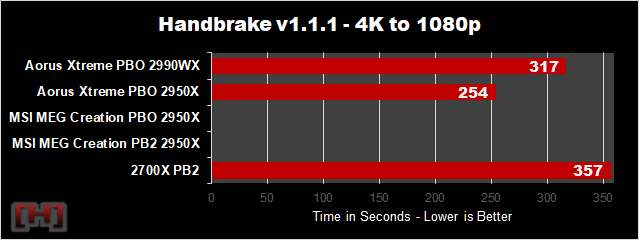

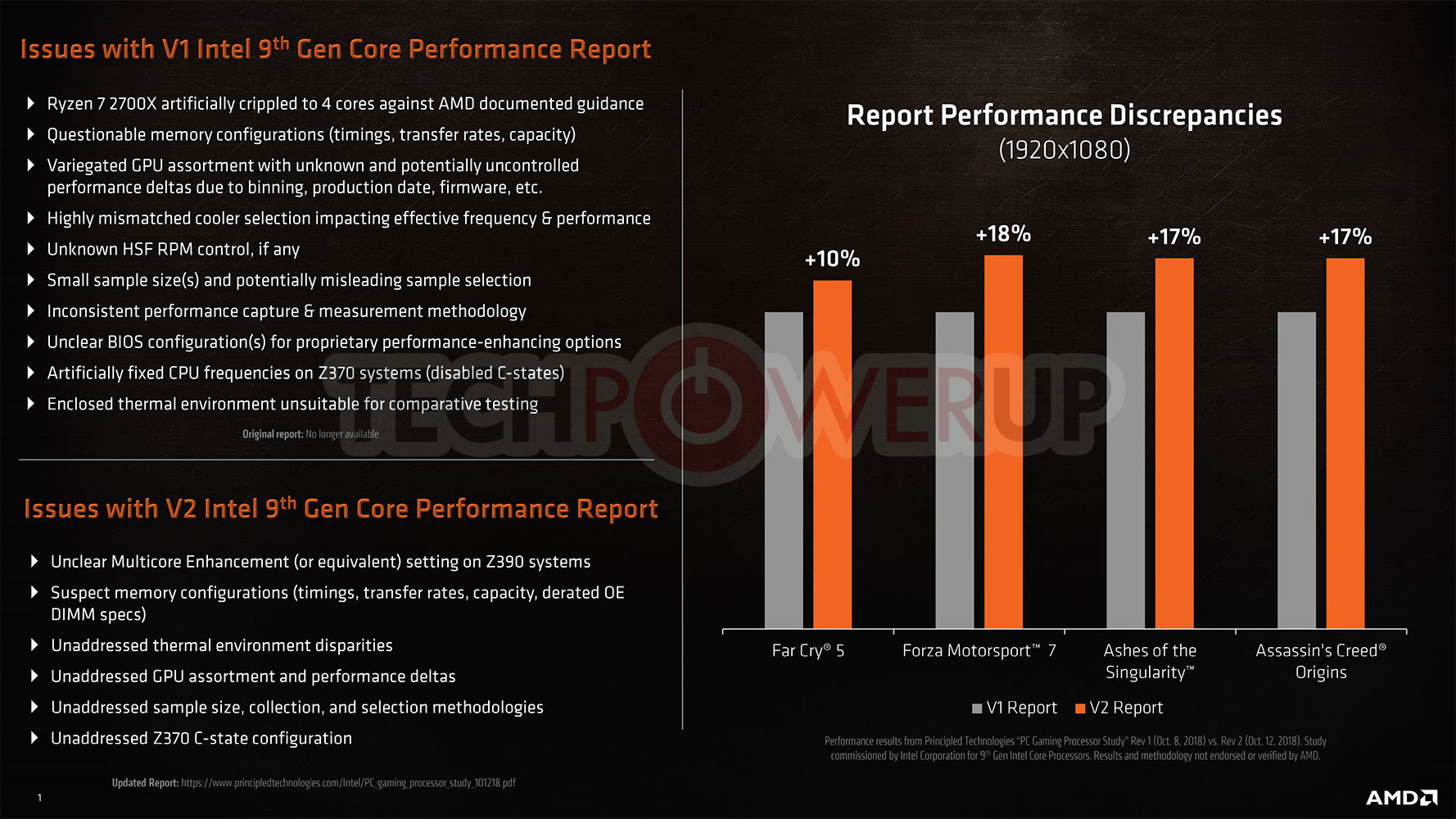

AMD gave its first major reaction to the Principled Technologies (PT) controversy...

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)