- Joined

- May 18, 1997

- Messages

- 55,601

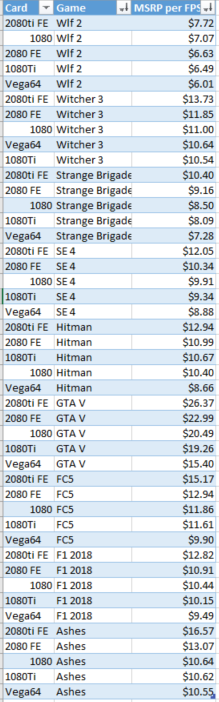

https://www.hardocp.com/news/2018/09/20/nvidia_dlss_analysis/To be clear, I am saying it is stupid to buy a 1080Ti right now (and was a great purchase 18 months ago). This is because the potential performance improvement of DLSS if you run AA on in any of the games you play.

The games slated for DLSS are here.

The question is when, not if. Buying a 1080Ti will get you a few months of fun and then regret when DLSS is supported in one of the games that you play.

Of course, this is assuming DLSS works as advertised, which is why I say "buy 2080 and hope"

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)