Hello,

I'm looking for some information on the difference between the PCIe lanes on the PCH vs. the one's that are provided by the CPU, as i have concerns about potential i/o & bandwidth limitations with an upcoming build.

To give a practical example:

I'm planning to run no more than one GPU (GTX 1080 for now), but i would like to reserve the total 16 PCIe 3.0 lanes provided by the CPU for a future graphics card upgrade. My thinking is that i might run into a bottleneck with next-gen GPUs (the one's after the recently announced RTX 20 series) if i reserve only 8 lanes.

Adding to that, let's say i want to run:

one x4 PCIe Optane SSD (4 lanes), three x4 M.2 PCIe SSD drives (12 lanes), and two SATA drives. No raid planned.

I found a lot of seemingly conflicting information on the web, so I'd be happy if someone could clear up my misconceptions.

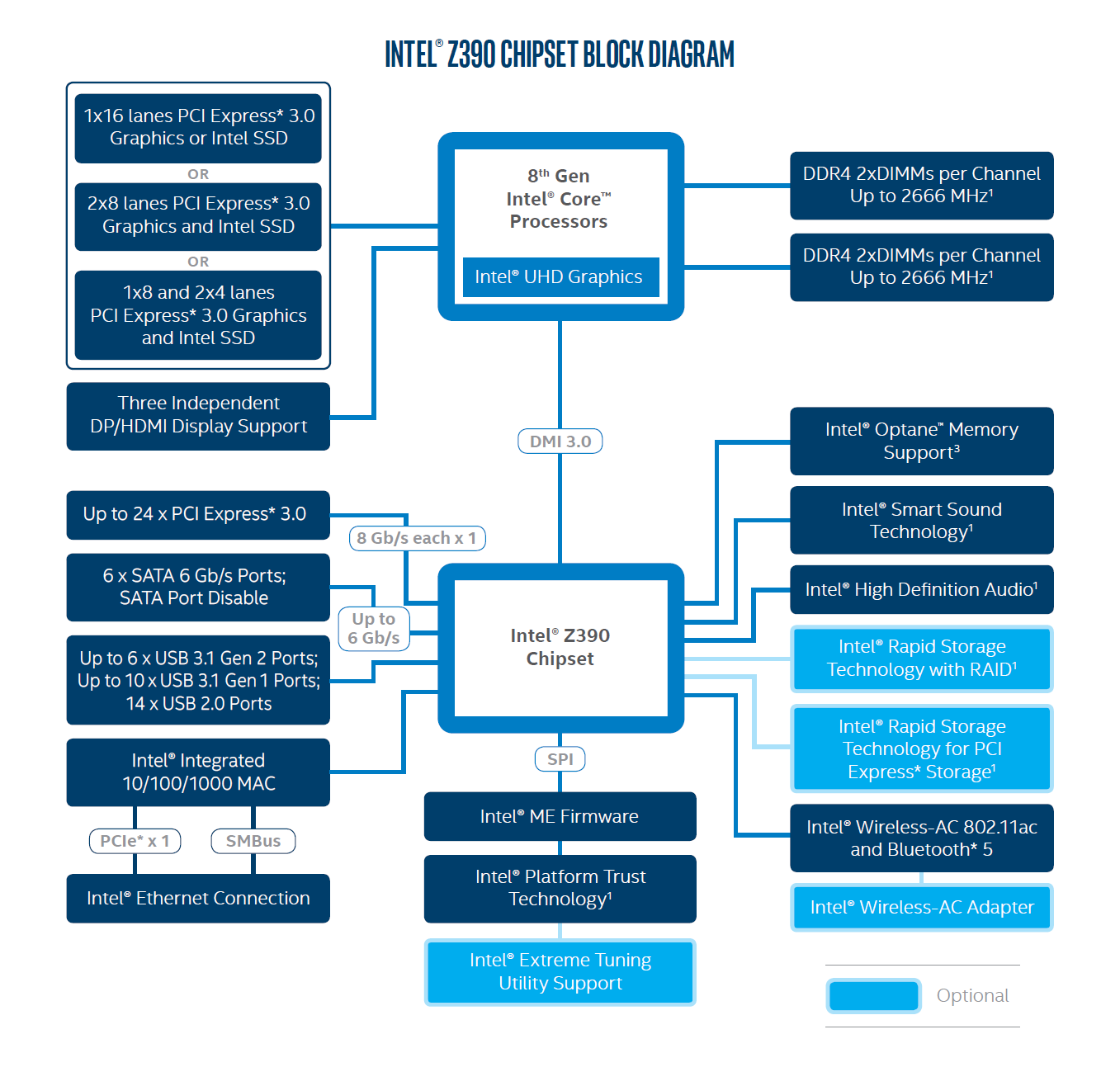

Let's take the upcoming Z390 for example: x16 PCIe 3.0 lanes provided by the CPU, x24 PCIe 3.0 lanes provided by the chipset.

Does this mean i have 40 PCIe 3.0 lanes available in total, to be used simultaneously with full bandwidth, or do all these additional lanes ultimately need to go through the 16 lanes provided by the CPU, meaning that the bandwidth is effectively limited to the equivalent of 16 lanes simultaneously?

I know that the PCH can only assign it's lanes in chunks of x4, but can all these 24 lanes (or 6 * x4 lanes) be freely used up for add-in cards, or are some of these lanes already reserved for other things like raid, usb, network,...? If it's the latter, then how many of those 24 lanes can i effectively use without peeling those lanes from other parts of the board?

I have read elsewhere that the connection from the PCH to the CPU is a x4 PCI-E 3.0 DMI link on the X299 platform. I assume it's the same on the Z370 or the upoming Z390 platform? How exactly does this DMI link work? Does this link take up 4 of the 16 CPU lanes? Do all those 24 chipset lanes of our example share the same x4 lane link, like a bottleneck?

I'm looking for some information on the difference between the PCIe lanes on the PCH vs. the one's that are provided by the CPU, as i have concerns about potential i/o & bandwidth limitations with an upcoming build.

To give a practical example:

I'm planning to run no more than one GPU (GTX 1080 for now), but i would like to reserve the total 16 PCIe 3.0 lanes provided by the CPU for a future graphics card upgrade. My thinking is that i might run into a bottleneck with next-gen GPUs (the one's after the recently announced RTX 20 series) if i reserve only 8 lanes.

Adding to that, let's say i want to run:

one x4 PCIe Optane SSD (4 lanes), three x4 M.2 PCIe SSD drives (12 lanes), and two SATA drives. No raid planned.

I found a lot of seemingly conflicting information on the web, so I'd be happy if someone could clear up my misconceptions.

Let's take the upcoming Z390 for example: x16 PCIe 3.0 lanes provided by the CPU, x24 PCIe 3.0 lanes provided by the chipset.

Does this mean i have 40 PCIe 3.0 lanes available in total, to be used simultaneously with full bandwidth, or do all these additional lanes ultimately need to go through the 16 lanes provided by the CPU, meaning that the bandwidth is effectively limited to the equivalent of 16 lanes simultaneously?

I know that the PCH can only assign it's lanes in chunks of x4, but can all these 24 lanes (or 6 * x4 lanes) be freely used up for add-in cards, or are some of these lanes already reserved for other things like raid, usb, network,...? If it's the latter, then how many of those 24 lanes can i effectively use without peeling those lanes from other parts of the board?

I have read elsewhere that the connection from the PCH to the CPU is a x4 PCI-E 3.0 DMI link on the X299 platform. I assume it's the same on the Z370 or the upoming Z390 platform? How exactly does this DMI link work? Does this link take up 4 of the 16 CPU lanes? Do all those 24 chipset lanes of our example share the same x4 lane link, like a bottleneck?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)