When was that disproven? I don't remember reading about it, but I could have easily just missed it.

I disproved it in my own testing and had others do it to back me up and post on the forums. There is zero performance hit with HDR with NVIDIA as long as you don't do chroma sub-sampling. I think it may be a bug in the drivers.

On another note,

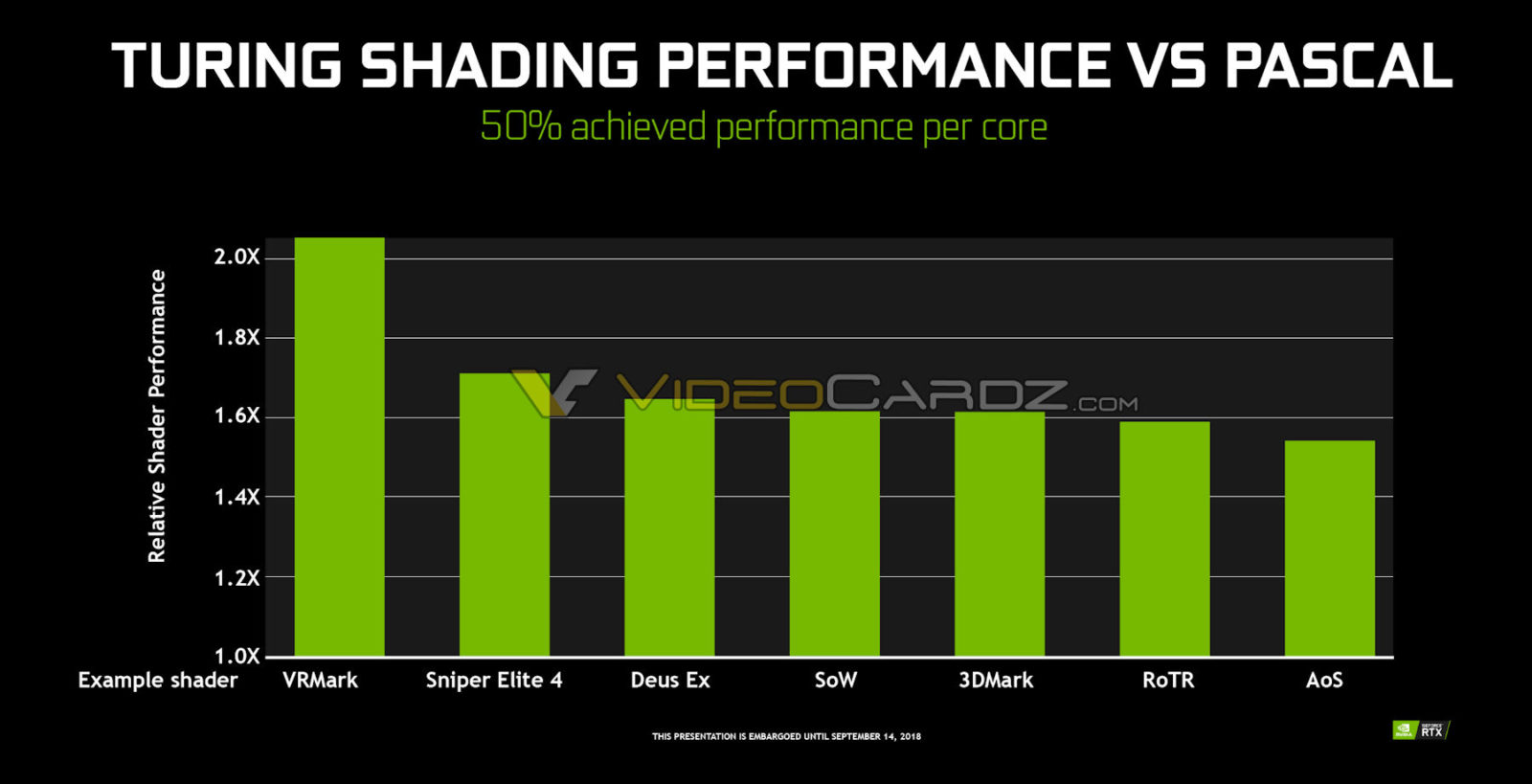

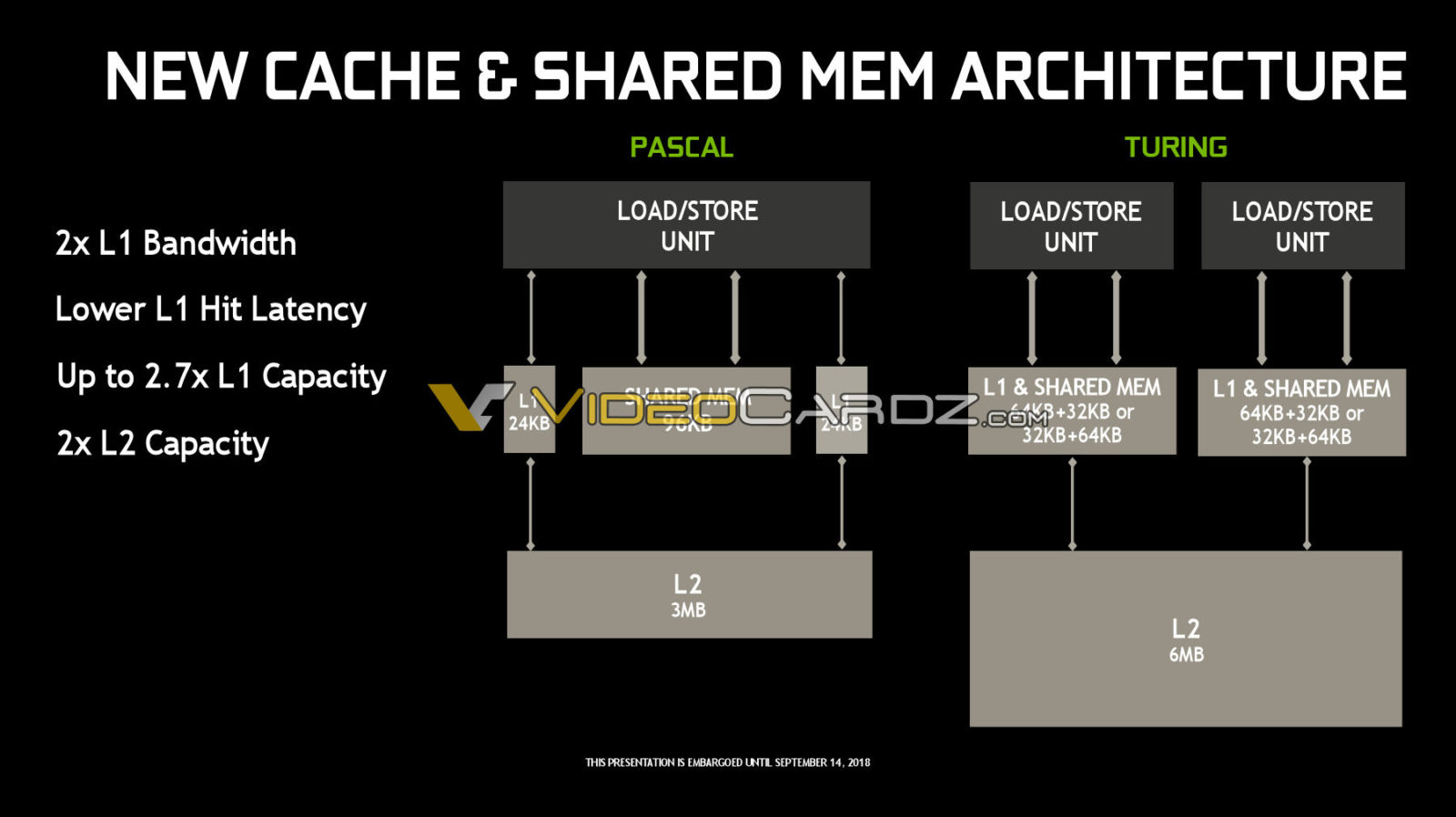

Another reason for speed increase:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)