Hello All,

I have a question re: what SCP speeds I should be seeing when copying from VM to VM.

The box I'm using is quite beefy for what I'm supposed to be doing:

HW

Lenovo Server

2x Xeon Silver 4114 (10c/20t each CPU)

96GB RAM

2 Ethernet Ports, 1 Gigabit

4x Intel 4600 SSDs in RAID10 via a dedicated RAID card

Each one can do about 400MB/s Read and Write so with RAID10, I'm expecting a little more than what I'm getting

ESXI Config

I'm a idiot/noob at this and didn't configure ESXi a lot. Just set my management IP to static and added the 2nd gigabit ethernet port to work as a NIC team

Guest VMs

RHEL 5.8 64bit, using the VMXNet3 Ethernet Driver

RHEL 5.8 32bit, using the VMXNet3 Ethernet Driver

Each has quite a bit of resources allocated. At least 10 cores per VM, and over 16GB RAM. No overallocation of resources. These, as well as a tiny Ubuntu VM just used for remote desktop and other minor things are the only VMs on this computer.

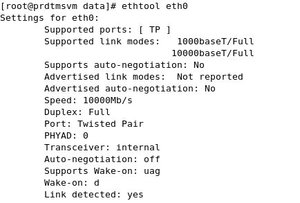

Both VMs see a 10GB ethernet <please see pic attached>

**This may or may not be significant: I set up both VMs initially with the E1000 hardware. I installed VMWare Tools BEFORE switching the hardware to the VMXNet3 Driver in ESXI. No other changes made to Guest OSs.

Edit: Not significant, I made 2 VMs with the VMXNet3 driver set as initial hardware. No network until I installed the VMWare Tools. Exactly the same result.

Issue

I'm copying about 50 big 4GB files from one VM to VM via SCP, and I'm seeing transfer rates to be between 110-125MB. I'm assuming that this should be quite a bit faster since I probably am not bottlenecked by the ethernet or SSDs.

I've read that since file transfers go through the vswitch and not through the physical ethernet ports, I should be seeing a much faster copy between the 2 VMs. Why is this not the case?

The exact command I'm using to transfer:

scp -r -c arcfour <files> <user>@<ip>:/directory

Thanks!

I have a question re: what SCP speeds I should be seeing when copying from VM to VM.

The box I'm using is quite beefy for what I'm supposed to be doing:

HW

Lenovo Server

2x Xeon Silver 4114 (10c/20t each CPU)

96GB RAM

2 Ethernet Ports, 1 Gigabit

4x Intel 4600 SSDs in RAID10 via a dedicated RAID card

Each one can do about 400MB/s Read and Write so with RAID10, I'm expecting a little more than what I'm getting

ESXI Config

I'm a idiot/noob at this and didn't configure ESXi a lot. Just set my management IP to static and added the 2nd gigabit ethernet port to work as a NIC team

Guest VMs

RHEL 5.8 64bit, using the VMXNet3 Ethernet Driver

RHEL 5.8 32bit, using the VMXNet3 Ethernet Driver

Each has quite a bit of resources allocated. At least 10 cores per VM, and over 16GB RAM. No overallocation of resources. These, as well as a tiny Ubuntu VM just used for remote desktop and other minor things are the only VMs on this computer.

Both VMs see a 10GB ethernet <please see pic attached>

**This may or may not be significant: I set up both VMs initially with the E1000 hardware. I installed VMWare Tools BEFORE switching the hardware to the VMXNet3 Driver in ESXI. No other changes made to Guest OSs.

Edit: Not significant, I made 2 VMs with the VMXNet3 driver set as initial hardware. No network until I installed the VMWare Tools. Exactly the same result.

Issue

I'm copying about 50 big 4GB files from one VM to VM via SCP, and I'm seeing transfer rates to be between 110-125MB. I'm assuming that this should be quite a bit faster since I probably am not bottlenecked by the ethernet or SSDs.

I've read that since file transfers go through the vswitch and not through the physical ethernet ports, I should be seeing a much faster copy between the 2 VMs. Why is this not the case?

The exact command I'm using to transfer:

scp -r -c arcfour <files> <user>@<ip>:/directory

Thanks!

Attachments

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)