I don't expect that using Windows Enterprise or Server will help. Windows may scale up to 256 cores on server workloads, but possibly not on HEDT/workstation workloads.Windows 10 Pro 64bit supports up to 256 cores per CPU. I hope that we can put this to rest now.

That being said, the scheduler might be absolute garbage in Windows 10 Pro and only improves as you move up to Enterprise (I'm speculating here).

Linus is at least surprised how bad Windows performs in some of the benchmarks. Also not all benchmarks where the 2990WX came out ahead are perfectly tuned for parallelism.Linus doesn't seem convinced the problem is on Microsoft side

https://www.realworldtech.com/forum/?threadid=179265&curpostid=179281

https://www.realworldtech.com/forum/?threadid=179265&curpostid=179333

http://openbenchmarking.org/result/1808130-RA-CPUUSAGED10

Certainly there are some benchmarks that fit your description, but by far not all.It seems to me that 2990WX is performing better on phoronix review because the suite is using many microbenches and toy-like workloads that fit into cache and avoid the latency/bandwidth penalties on the compute dies.

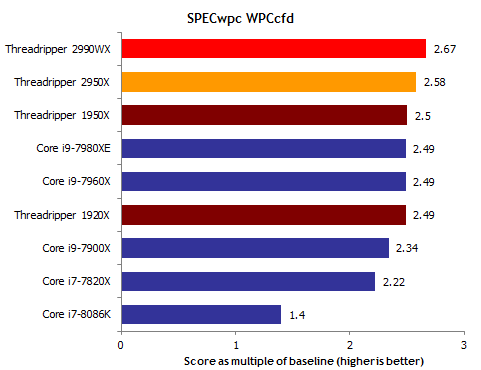

In particular, 7-zip compression and Linux kernel compile don't fit into cache. CFD depends heavily on memory bandwidth, and from looking at some of the Windows results one might think that TR 2990WX has hit a wall here (source: https://techreport.com/review/33977/amd-ryzen-threadripper-2990wx-cpu-reviewed/7):

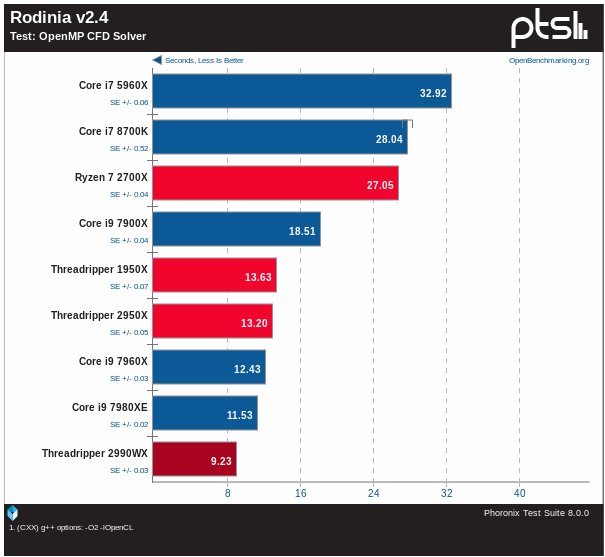

When actually that could rather be a peculiarity of Windows and/or the benchmark (source: https://www.phoronix.com/scan.php?page=article&item=amd-linux-2990wx&num=4).

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)