cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,080

We have all wondered why do our games stutter even when we maintain at least 60 fps. Alen Ladavac, Chief Technology Officer (CTO) at game developer Croteam tackles this issue head-on in a way that a simple layman or tech enthusiast can appreciate. He discusses how older games were hand drawn to make sure that a character move an exact amount of pixels per frame and games had different animations depending on if the game was released for 50 Hz PAL or NTSC 60 Hz regions.

Then he moves onto how the game can think that the GPU didn't make 60 fps because of other operations multitasking on a PC so it creates frames designed for a slower frame rate but in reality everything was running smoothly. This causes the dreaded stuttering that we all experience in games. He goes on to state, "The real solution would be to measure not when the frame has started/ended rendering, but when the image was shown on the screen." None of the graphics API have support for this feature on all OS platforms so Croteam is advocating for support to be added in the near future.

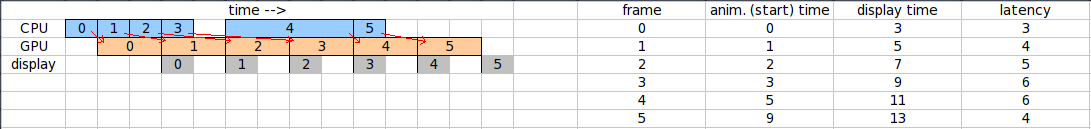

What happens here is that the game measures what it thinks is start of each frame, and those frame times sometimes oscillate due to various factors, especially on a busy multitasking system like a PC. So at some points, the game thinks it didn't make 60 fps, so it generates animation frames slated for a slower frame rate at some of the points in time. But due to the asynchronous nature of GPU operation, the GPU actually does make it in time for 60 fps on every single frame in this sequence.

Then he moves onto how the game can think that the GPU didn't make 60 fps because of other operations multitasking on a PC so it creates frames designed for a slower frame rate but in reality everything was running smoothly. This causes the dreaded stuttering that we all experience in games. He goes on to state, "The real solution would be to measure not when the frame has started/ended rendering, but when the image was shown on the screen." None of the graphics API have support for this feature on all OS platforms so Croteam is advocating for support to be added in the near future.

What happens here is that the game measures what it thinks is start of each frame, and those frame times sometimes oscillate due to various factors, especially on a busy multitasking system like a PC. So at some points, the game thinks it didn't make 60 fps, so it generates animation frames slated for a slower frame rate at some of the points in time. But due to the asynchronous nature of GPU operation, the GPU actually does make it in time for 60 fps on every single frame in this sequence.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)