D

Deleted member 133315

Guest

... confused ...

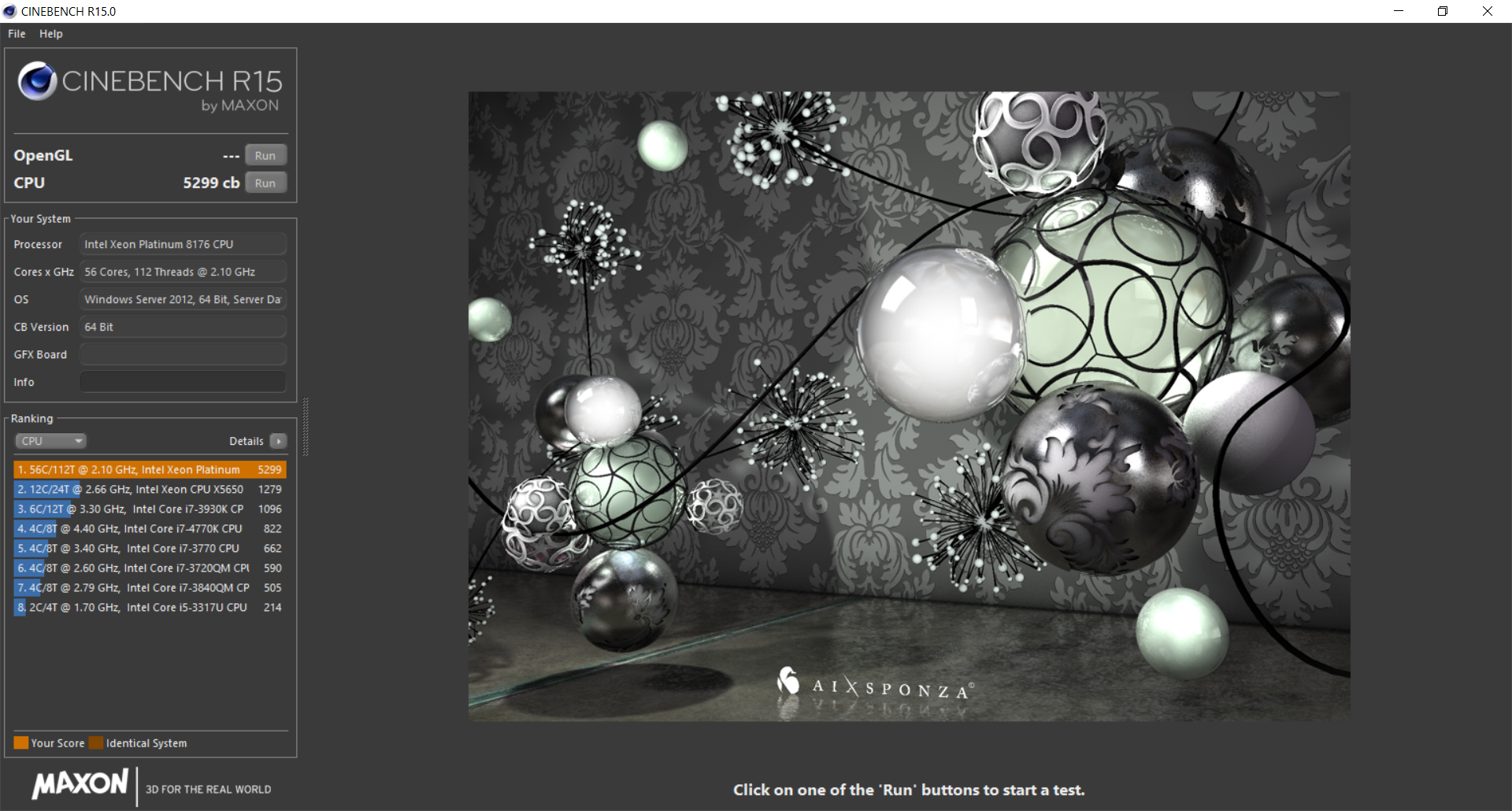

Of course it can play Crysis. Not at the moment, obviously; but slap in a video card (or three) and it'll be just fine.

Joke from like 10 years ago when Crysis was released and brought every pc to their knees.

Then it started, every new cpu, video card, pc etc. That got released.

Was asked, can it play crysis ?

The reply was usually.

Nothing can play crysis.

And seeing that cracking setup you have.

I still say, nothing can play crysis.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)