RandomNameAndNumber

n00b

- Joined

- Apr 12, 2018

- Messages

- 29

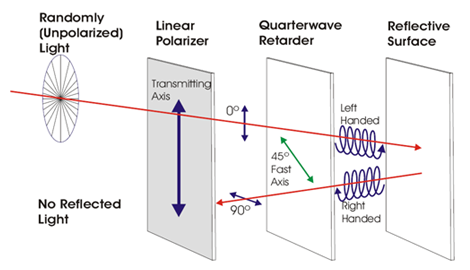

Do you have a direct link to this sort of mod. I like mods, and even if I don't entertain the idea of doing it, knowing is half the battle.polarizer mod.

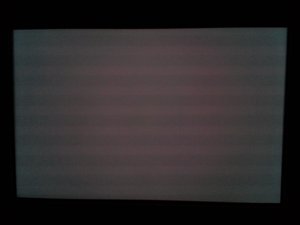

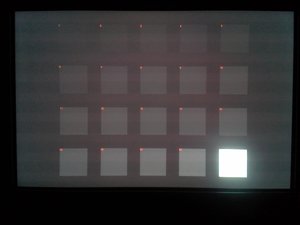

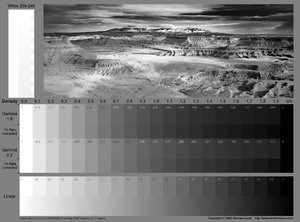

When I removed the glare screen, I marveled at how much light it blocked. I see what you are saying, and did set my brightness to 0. Contrast is 65. 8/8/12 on the RGB BIAS looks like a really nice color. Blacks are certainly more "black". I am in a well lit room, so I don't expect to be able to get a good impression until this evening during "real gaming hours". Is there a good comparison photo that will guide me better? I have a suite of larger "Test" images I have been running as a screen saver for the last 10 years. I never got into purity of color and blacks. Close counts, and I am as delicate as a sledge hammer. This monitor would probably look better WITH any sort of help in the black department.black almost not visible

If you knew what my monitor looked like after I destroyed the plastic anti-glare, you would understand that this is an exponential improvement. That is a story for another post however.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)