Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,835

You know some of these self driving car accidents are pretty scary, but don't they still have a better track record per mile than human drivers?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

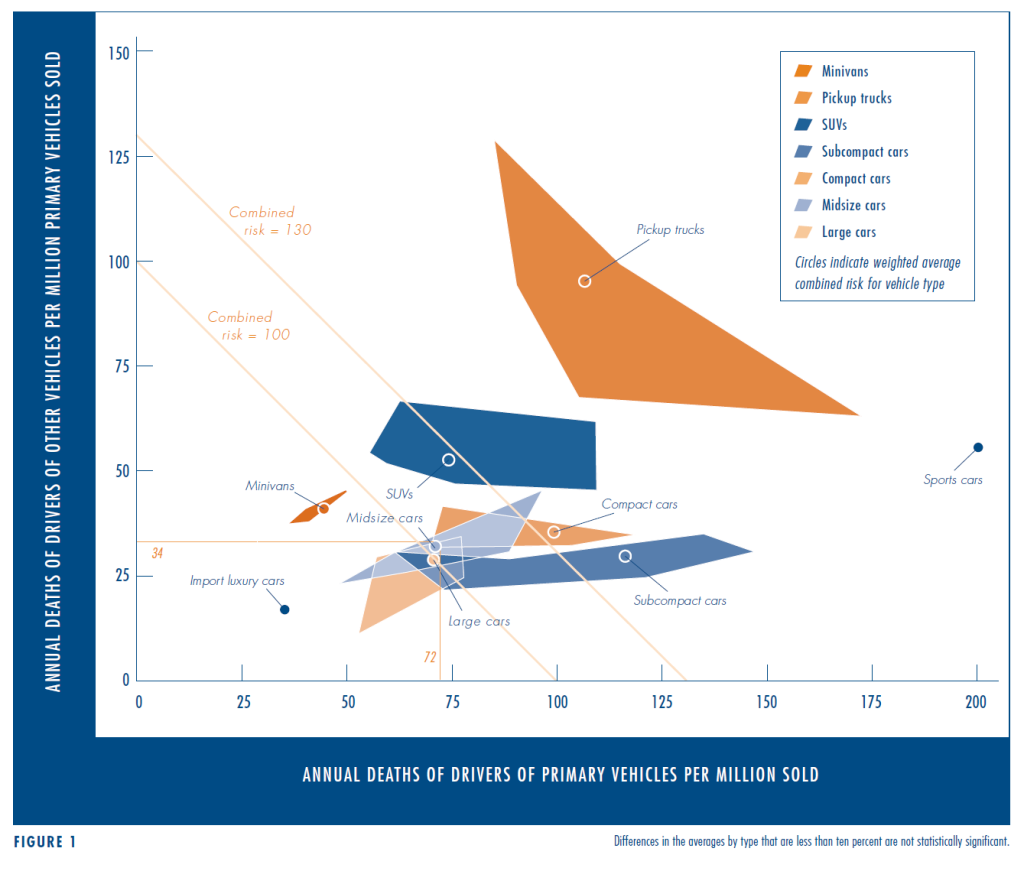

Per passenger mile, mass transit is much safer. And ultimately looking at this chart, it's much safer to be a passenger in anything other than a passenger car or light truck - otherwise it's a pedestrian or another vehicle dying, not the passenger(s) in the measured vehicle.

View attachment 64173

Keep your hands on the wheel, eyes on the road, and treat Autopilot the same way you would a passenger in the car who says "Oh shit, watch out!" when they see something you might have missed. It's a safety system that you hopefully wouldn't ever need, but is an awesome tool that might save your skin if you're in an unexpected situation.

Per passenger mile, mass transit is much safer. And ultimately looking at this chart, it's much safer to be a passenger in anything other than a passenger car or light truck - otherwise it's a pedestrian or another vehicle dying, not the passenger(s) in the measured vehicle.

View attachment 64173

But the point being made was we shouldn't accept deaths as part of the system, and mass transit was proposed as part of that answer. Surprise, there's death there too. Guess what, everyone, nothing is perfect. Human drivers kill people in huge numbers every year, but nobody says stop all driving.

People misusing advanced lane assist is an incredibly small percentage of that, and given the number of lane assist systems on the road, I'd lay money that the death rate is lower than unassisted drivers.

Ditto with AI "drivers"

BB

This data is a little old, but if still accurate it would seem that when it comes to cars and light trucks, the absolutely worst thing you can be in is a pickup truck, followed by an SUV.

It is of course detected by the sensors, the problem is that the neural net can't classify it properly and this is most likely an issue with the learning set and will improve. Given the large number of sensory combinations issues like this are bound to happen but hopefully most of these can be resolved over time.

And that is why it is still classified as Beta and they have to accept this disclaimer that BlueFireIce posted.

You know some of these self driving car accidents are pretty scary, but don't they still have a better track record per mile than human drivers?

I wonder how much of that is self-selection of the drivers of such vehicles vs the vehicle type itself. Purely anecdotal, but it seems that SUV and pickup drivers often drive faster and more aggressively than even the average sports coupe, with a much longer stopping distance and higher center of gravity. Our monkey brain equates being taller than someone with power, seems to translate to sitting up higher in those types of vehicles.

I wonder how much of that is self-selection of the drivers of such vehicles vs the vehicle type itself. Purely anecdotal, but it seems that SUV and pickup drivers often drive faster and more aggressively than even the average sports coupe, with a much longer stopping distance and higher center of gravity. Our monkey brain equates being taller than someone with power, seems to translate to sitting up higher in those types of vehicles.

Yup.... it might look just like when humans fuck things up because they don't know how to drive in the snow!Can't wait to see self driving in heavy snow, freezing rain, heavy fog, or add night time to the previous for more entertainment.

No, even Waymo for 2017 reported 63 disengagements for serious issues over 352K miles of testing.You know some of these self driving car accidents are pretty scary, but don't they still have a better track record per mile than human drivers?

The last report doesn't take into consideration the number of accidents that the human safety drivers prevented. Self driving cars have also not been significantly tested in severe weather.hmmm, the last report doesn't jibe with your conclusions/assertion.

Seems like they're close at this point.

BB

But but but neural nets! /SNo, even Waymo for 2017 reported 63 disengagements for serious issues over 352K miles of testing.

https://www.dmv.ca.gov/portal/wcm/c...5-a72a-97f6f24b23cc/Waymofull.pdf?MOD=AJPERES

For 2016, Waymo reported that without human driver intervention, the cars would have hit something 9 times in 635K miles of testing.

https://spectrum.ieee.org/cars-that...ving/the-2578-problems-with-self-driving-cars

American drivers (often driving in more challenging environments) have an accident rate of 4.2/million miles.

https://vtnews.vt.edu/articles/2016/01/010816-vtti-researchgoogle.html

If a product is used as not intended but the product can essentially do things on its own, who is liable for the mistakes that come because of it?

Beta: Gaming/Os/IoT/Refrigerator/Smartphone/Flatscreen/thermometer.....(you get the point) software doesnt place a group of people lives in jeopardy if a subsystem isnt ready for mass consumption. Beta is supposed to mean a version ready for release. After alpha which usually never sees the light of day.

When a system depends on software for its critical functions, maybe beta isnt enough.

really bad point....but....... When the military out's a new computerized machine, it only kills the wrong group of people because of a targeting issue. When a car is seen as being able to drive itself, citizens lives are at risk. Citizens are now on a battlefield as testdummies for *soon to be improved* software.

I am looking forward to autonomous vehicles, I just expect it to be done responsibly and not have a *lets try this and see if it will kinda work* attitude. Publishing numbers that seem to rationalize a problem.

Only X # of deaths after X # million of miles. Accidents happen. These products *cause* the accident. Its a difference.

I really feel that the problem stems from how it was merchandised, so the solution is to enforce how the feature is sold. May not prevent every instance but it will at least seem that the manuf is more responsible.

And those arent America. They are likely the size of a small state. Please stop trying to make us into somewhere else. We like it here.

What percentage of drivers are familiar with nothing but the hollywood version of commercial airplane autopilot? 90%?To reduce fatigue and make small adjustments to keep on course, the same thing aircraft autopilot is for. Keep in mind that aircraft autopilot has far less to deal with as it's in the air and does not, despite what people, including yourself seem to think, fly the plane without any input or control/attention from a pilot, this allows them to keep better monitor of other functions such as the many systems on an aircraft to the weather. So the name is fitting, and when using the system (in a Tesla) it warns you about the system and what it is for and what it is not for and that you need to keep control at all times, I believe they still require you to sign an agreement when getting a car with this function that you understand it. But people not being stupid enough, Tesla also added in hand placement detection, audible warnings and visual warnings for when the person has chosen to ignore the paperwork they read and signed and the warning prompt you have to click when you engage the system.

View attachment 64037

You'd think this would not have to be stated. But i bet the number is closer to 97%.What percentage of drivers are familiar with nothing but the hollywood version of commercial airplane autopilot? 90%?

What percentage of drivers are familiar with nothing but the hollywood version of commercial airplane autopilot? 90%?

The 'hold steering wheel' message and accompanying flashing border occur within the first 10 seconds of hands free driving. After 15 seconds you get a loud warning sound and at around 30 seconds the warning sound plays until, if still kept hands free, the vehicle begins to decelerate into a stop at about 40 seconds.

So basically they give you about 45 seconds of wanking off behind the wheel before it deactivates and are unable to reengage it until a couple hours later.

https://www.wired.com/story/tesla-autopilot-why-crash-radar/I thought that these Tesla cars have radars, just like my almost 9 years old Prius.

If it does, than it can keep a safe distance behind a car. Why is it that it doesn't really have any problems with driving into a concrete wall then?

I remember a video where a Prius slamming the brakes for aluminum foil hanging in front of it. That's how a fella tested this new system after buying the car.

If it doesn't have one, then I really don't understand how the hell autopilot is better than cruise control with a radar. I think it is far easier to keep steering a car than to keep playing with the pedals.

Either way it sucks and as others have pointed out: the faintly flashing white lights is nowhere near enough to alert a distracted driver.

My Prius can scare the bejesus out of me (and anyone else in the car) when it beeps and tells me to 'BRAKE ! ! !' when I intend to get around a slowly turning bus in an intersection.

Jeez, so at a typical highway traveling speed of 80mph, it will travel almost 120 feet, before even starting to slow down, if something goes wrong?

That seems kind of bad.

What percentage of drivers are familiar with nothing but the hollywood version of commercial airplane autopilot? 90%?

I thought that these Tesla cars have radars, just like my almost 9 years old Prius.

If it does, than it can keep a safe distance behind a car. Why is it that it doesn't really have any problems with driving into a concrete wall then?

I remember a video where a Prius slamming the brakes for aluminum foil hanging in front of it. That's how a fella tested this new system after buying the car.

If it doesn't have one, then I really don't understand how the hell autopilot is better than cruise control with a radar. I think it is far easier to keep steering a car than to keep playing with the pedals.

Either way it sucks and as others have pointed out: the faintly flashing white lights is nowhere near enough to alert a distracted driver.

My Prius can scare the bejesus out of me (and anyone else in the car) when it beeps and tells me to 'BRAKE ! ! !' when I intend to get around a slowly turning bus in an intersection.

You all do realize this is SkyNet shit, right? It's just the beginning...

There's plenty of money and political capital to be made for being lenient towards tech

I shouldn't be surprised about the amount of callousness in society, but I still am when I am reminded about it. Makes me get behind the FDA when they take an inordinate about of time to Ok a new drug when its already being used overseas.

One of my ongoing realizations about the ability of "big money' to make things happen, is when they place new drugs in the hands of doctors that will prescribe them to a needy public. Then there are all of the warnings than a lot of the new drugs come with.

A 60 second commercial about a new drug comes with 45 seconds of the narrator telling of all the other things that you have to be aware you may fall to.

Sometimes followed with another commercial, about lawyers telling you that they will represent you in a class action about last years go-to drug.

I would probably have drawn that conclusion if it smashed into a single barrier from the longitudinal axis.The edge face of barriers is quite small and the systems have trouble tracking them at a distance. They are far more suited to tracking large objects (cars) at the same approximate speed as the radar.

I would probably have drawn that conclusion if it smashed into a single barrier from the longitudinal axis.

But in this video, as well as pictures of the lethal crash, both show barriers in front. Both of which are 2-3 feet wide.

I'm pretty sure the system is capable of tracking objects/obstacles this wide if it has no problem tracking a person, at a sufficient distance where even if it identified it too late, it would still be sufficient to slow the vehicle down to a non-lethal incident.

How do you tell the difference between a deflection in the pavement which you will safely pass over without an issue and a wall that's 200ft out?

A Tesla owner in Indiana decided to recreate the fatal crash from last month, where the cars autopilot mode drove straight into a K-Rail according to an article from electrek. The video below shows a Model S with the latest autopilot hardware mistakenly treating a right-side land as a left-side lane and pointing the vehicle squarely at a barrier.

How is it the radar system of neither of these cars could see a solid, immobile concrete barrier? Fortunately the Indiana driver in the video managed to stop just in time.