M76

[H]F Junkie

- Joined

- Jun 12, 2012

- Messages

- 14,035

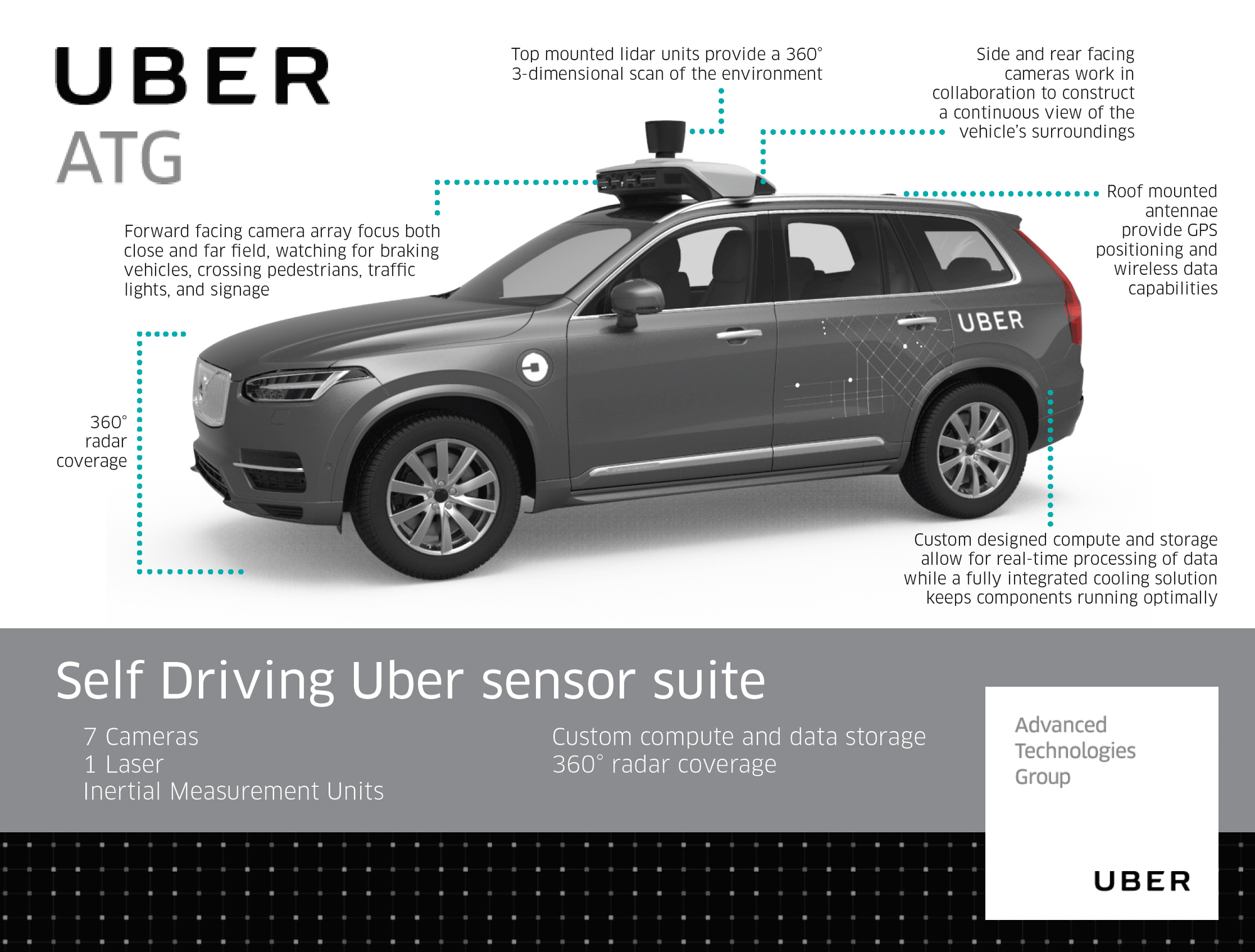

Are you just throwing terms out there? FOV has no relation to being soft bodied either. If it's a FOV issue then you won't see a solid target either. If I remember correctly Uber uses a Velodyne lidar, with a 360 FOV, which has very high pulse repetition rate, but relatively slow rotation.How do reflections work again when using lasers with narrow FOV?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)