Lunas

[H]F Junkie

- Joined

- Jul 22, 2001

- Messages

- 10,048

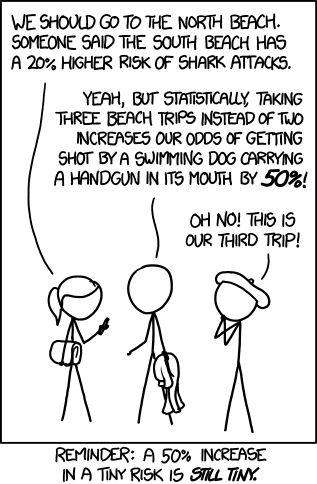

I was looking for information on raid 0 on a laptop for my 2 firecudas. And i got nothing but eww raid 0 if you really want to risk your data like that. and Gross if you want to have your array fail on you...

And such everywhere i went was raid 0 is the devil.

i remember before ssd raid 0 was hot shit and if you were not running it you were missing out and people touting 4 disk arrays of 15k rpm wd blacks...

So to get on with this

my new laptop has 2 internal bays for 2.5 and 2 for m.2 and support for 1 of those being NVME

it came with 1 WD blue 512gb m.2 sata ssd

i put 2x 2tb Firecuda SSHD inside

right now the fire cuda are a spanned volume via win 10

i can turn on intel rst and put the 2 fire cuda in hardware raid 0 should I? it requires me to reinstall windows and seagate discourages raid with firecuda too bad seagate is the only game in town for spinning disc 2.5 that are more than 1tb

And such everywhere i went was raid 0 is the devil.

i remember before ssd raid 0 was hot shit and if you were not running it you were missing out and people touting 4 disk arrays of 15k rpm wd blacks...

So to get on with this

my new laptop has 2 internal bays for 2.5 and 2 for m.2 and support for 1 of those being NVME

it came with 1 WD blue 512gb m.2 sata ssd

i put 2x 2tb Firecuda SSHD inside

right now the fire cuda are a spanned volume via win 10

i can turn on intel rst and put the 2 fire cuda in hardware raid 0 should I? it requires me to reinstall windows and seagate discourages raid with firecuda too bad seagate is the only game in town for spinning disc 2.5 that are more than 1tb

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)