Supercharged_Z06

2[H]4U

- Joined

- Nov 13, 2006

- Messages

- 3,475

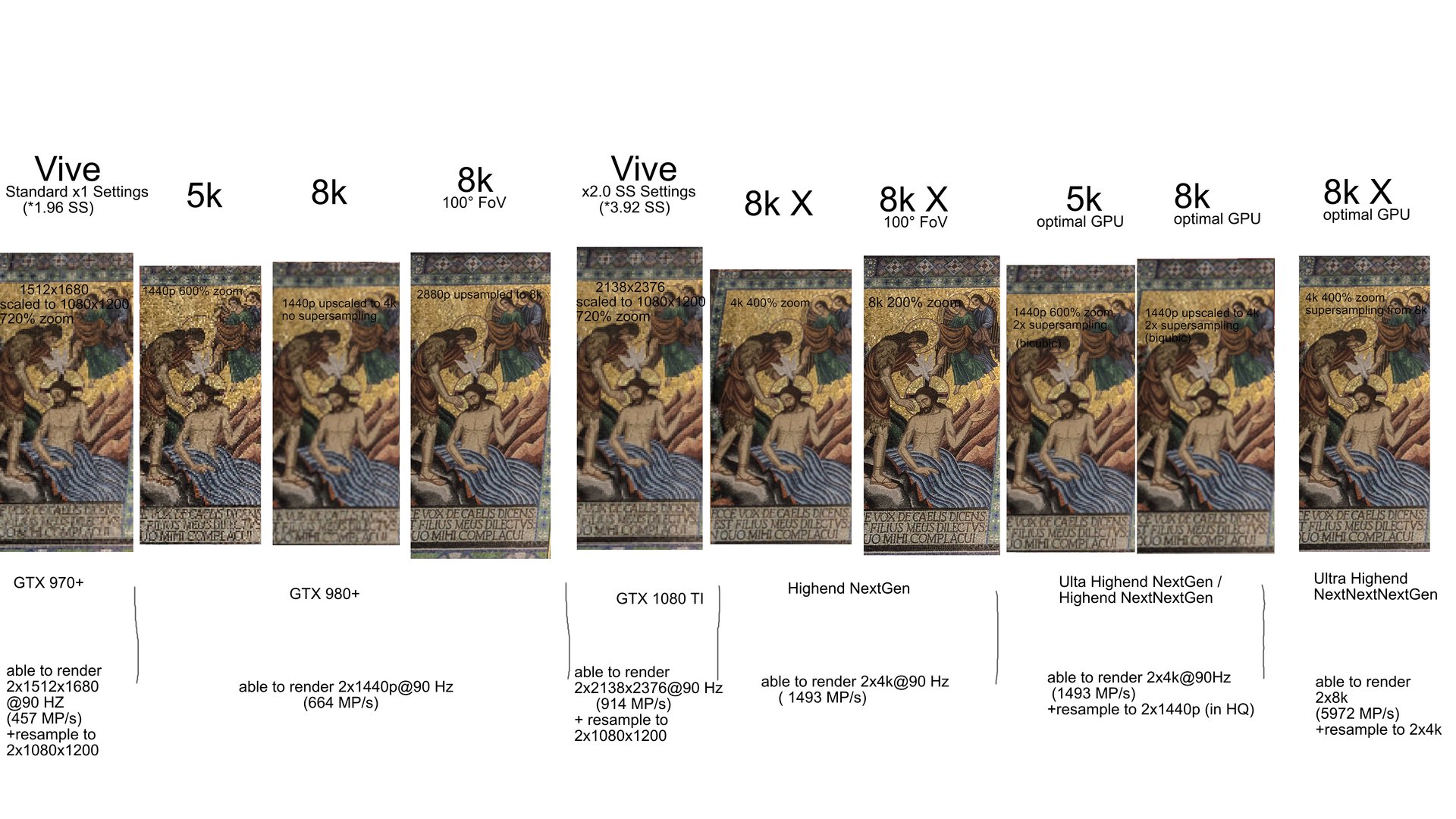

Found an interesting visual comparison of the graphics quality we can look forward to and the GPU rendering power that's going to be required for the Pimax 8K X going forward. Not my work (from the Pimax forums), but interesting nonetheless. Note that they are taking into account supersampling rendering power needed for the really good stuff:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)