So i came from a 5960x to a 1950x thread ripper.

i have a samsung 960 pro. on my old intel system i can easily transfer ( my test files 40gigs) at 2.0GB/s

copying the same files on my new thread ripper install im at 1.46 GB/s

i have the same samsung NVME driver install.

am i missing something or is amd /threadripper performance slower and nvme performance?

i have a asus zenith extreme motherboard. i have the newest bios installed from asus website.

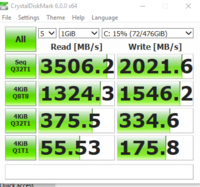

here is my crystal diskmark score.

is it possible since i was running 125 bus speed over 100 on the intel system?

i have a samsung 960 pro. on my old intel system i can easily transfer ( my test files 40gigs) at 2.0GB/s

copying the same files on my new thread ripper install im at 1.46 GB/s

i have the same samsung NVME driver install.

am i missing something or is amd /threadripper performance slower and nvme performance?

i have a asus zenith extreme motherboard. i have the newest bios installed from asus website.

here is my crystal diskmark score.

is it possible since i was running 125 bus speed over 100 on the intel system?

Attachments

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)